From our book “Mobile Unleashed”, this is a detailed history of Qualcomm:

Chapter 9: Press ‘Q’ to Connect

Unlike peer firms who started in other electronics segments and moved into communications devices later, Qualcomm has always focused on wireless technology to connect data between destinations reliably. Its CDMA technology was a leap ahead for mobile devices – if it could be made small enough, and if carriers could be persuaded to change from D-AMPS and GSM.

Qualcomm’s roots trace to some of the brightest minds in academia at the foremost technology universities in the US, first brought together working on the US space program as young engineers. A depth of technical expertise gained through serving demanding clients with digital communication systems established a foundation from which patents, chips, and devices grew.

Actual Rocket Scientists

The seminal article “A Mathematical Theory of Communication” published by Claude Shannon of Bell Labs in 1948 established information theory. Along with the invention of the transistor and advances in digital coding and computing, Shannon’s theorem and his tenure at the Massachusetts Institute of Technology (MIT) inspired an entire generation of mathematicians and scientists.

In June 1957, Andrew Viterbi, a graduate from MIT with a master’s degree in electrical engineering, joined the staff at the Jet Propulsion Laboratory (JPL) in Pasadena, California. At the time, JPL was owned by the California Institute of Technology, but worked under the auspices and funding of the US Army Ballistic Missile Command.

Viterbi was in JPL’s Communications Section 331, led by Solomon Golomb. They were developing telemetry payloads for missile and satellite programs. Golomb pioneered shift-register sequence theory, used to encode digital messages for reliability in high background noise. Viterbi was working on phase locked loops, a critical element in synchronizing a digital radio receiver with a transmitter so a stream of information can be decoded.

On October 4, 1957, the Russians launched Sputnik I. The next day, the Millstone Hill Radar at MIT Lincoln Labs (MITLL) – where researcher Irving Reed, best known for Reed-Solomon codes, was on staff – detected Sputnik in low-earth orbit. A young Ph. D. candidate at Purdue University, William Lindsey, used a ham radio to monitor a signal broadcast by Sputnik that rose and faded every 96 minutes as the satellite orbited.

The Space Race was officially underway. The US Navy rushed its Vanguard program to respond. On December 6, 1957, Test Vehicle 3 launched with a 1.3kg satellite. It flew an embarrassing 1.2m, crashing back to its pad just after liftoff and exploding. Its payload landed nearby in the Cape Canaveral underbrush, still transmitting. “And that was our competition,” said Golomb.

On January 31, 1958, JPL’s Project Deal – known to the outside world as Explorer I – achieved orbit. Life magazine featured a photo of Golomb and Viterbi in the JPL control room during the flight. On July 29, 1958, President Eisenhower signed the National Aeronautics and Space Act, creating NASA. JPL requested and received a move into the NASA organization in December 1958.

Viterbi enrolled at the University of Southern California (USC) to pursue his Ph. D., the only school that would allow him to continue working full time at JPL. He finished in 1962 and went to teach at the University of California, Los Angeles (UCLA). He recommended Golomb join the USC faculty in 1963. That started an influx of digital communications talent to USC that included Reed (who had moved to the Rand Corporation in Santa Monica in 1960), Lindsey (who joined JPL in 1962), Eberhardt Rechtin, Lloyd Welch, and others.

Lindsey would quip years later, “I think God made this group.” Rechtin would say that together, this group achieved more in digital communications than any of them could have done alone. They influenced countless others.

Linked to San Diego

At the 1963 National Electronics Conference in Chicago, best paper awards went to Viterbi and Irwin Jacobs, a professor at MIT whose office was a few doors down from that of Claude Shannon. Jacobs and Viterbi had crossed paths briefly in 1959 while Jacobs visited JPL for an interview, and they knew of each other’s work from ties between JPL and MIT.

Reacquainted at the 1963 conference, Jacobs suggested to Viterbi that he had a sabbatical coming up, and asked if JPL was a good place to work. Viterbi said that indeed it was. Jacobs’ application was rejected, but Viterbi interceded with division chief Rechtin, and Jacobs was finally hired as a research fellow and headed for Pasadena. Viterbi was teaching at UCLA and consulting at JPL, and the two became friends in the 1964-65 academic year Jacobs spent working there.

After publishing the landmark text “Principles of Communication Engineering” with John Wozencraft in 1965, Jacobs migrated to the West in 1966. He was lured by one of his professors at Cornell, Henry Booker, to join the faculty in a new engineering department at the University of California, San Diego (UCSD). Professors were valuable, and digital communication consultants were also in high demand. One day in early 1967, Jacobs took a trip to NASA’s Ames Research Center for a conference. He found himself on a plane ride home with Viterbi and another MIT alumnus, Len Kleinrock, who had joined the UCLA faculty in 1963 and became friends with Viterbi. The three started chatting, with Jacobs casually mentioning he had more consulting work than he could handle himself.

Viterbi was finishing his masterpiece. He sought a simplification to the theory of decoding faint digital signals from strong noise, one that his UCLA students could grasp more easily than the complex curriculum in place. Arriving at a concept in March 1966, he refined the idea for a year before publication. In April 1967, Viterbi described his approach in an article in the IEEE Transactions on Information Theory under the title “Error bounds for convolutional codes and an asymptotically optimum decoding algorithm.”

The Viterbi Algorithm leverages “soft” decisions. A hard decision on a binary 0 or 1 can be made by observing each noisy received bit (or group of bits encoded into a symbol), with significant chance of error. Viterbi considered probabilistic information contained in possible state transitions known from how symbols are encoded at the transmitter. Analyzing a sequence of received symbols and state transitions with an add-compare-select (ACS) operation identifies a path of maximum likelihood, more accurately matching the transmitted sequence.

It was just theory, or so Viterbi thought at first. The algorithm reduced computations and error compared to alternatives, but was still intense to execute in real-time, and thought to require “several thousand registers” to produce low error rates. Several other researchers picked up the work, notably Jim Massey, David Forney, and Jim Omura. They were convinced it was optimum. Jerry Heller, one of Jacobs’ doctoral students at MIT who had come with him to San Diego, was working at JPL. He decided to run some simulations during 1968 and 1969 and found Viterbi had been too pessimistic; 64 registers yielded a significant coding gain. That was still a rather large rack of computing equipment at the time.

The entrepreneurial thought Jacobs planted for a consulting firm had stuck. With an investment of $1500 (each man contributing $500) Linkabit was born in October 1968 with an address of Kleinrock’s home in Brentwood. Soon, offices moved to a building in Westwood near UCLA. At first, it was a day-a-week effort for Jacobs, Kleinrock, and Viterbi, who all kept their day jobs as professors.

There was even more business than anticipated. Linkabit’s first newhire engineer in September 1969 was Jerry Heller, soon followed by engineers Andrew Cohen, Klein Gilhousen, and Jim Dunn. Len Kleinrock stepped aside for a few months to pursue his dream project, installing the first endpoints of the ARPANET and sending its first message in October 1969. According to him, when he tried returning to Linkabit he was promptly dismissed, receiving a percentage of the firm as severance. With Kleinrock out and Viterbi not ready to relocate for several more years, Jacobs moved the Linkabit office to Sorrento Valley – the tip of San Diego’s “Golden Triangle” – in 1970. His next hire was office manager Dee Coffman (née Turpie), fresh out of high school.

Programming the Modem “Coding is dead.” That was the punchline for several speakers at the IEEE Communication Theory Workshop in 1970 in St. Petersburg, Florida. Irwin Jacobs stood up in the back of the room, holding a 14-pin dual-in-line package – a simple 4-bit shift register, probably a 7495 in TTL families. “This is where it’s at. This new digital technology out there is going to let us build all this stuff.”

Early on, Linkabit was a research think tank, not a hardware company. Its first customers were NASA Ames Research Center and JPL, along with the Naval Electronics Laboratory in Point Loma, and DARPA. Linkabit studies around Viterbi decoding eventually formed the basis of deep space communications links used in JPL’s Voyager and other programs. However, compact hardware implementations of Viterbi decoders and other signal processing would soon make Linkabit and its successor firm legendary.

Heller and Jacobs disclosed a 2 Mbps, 64 state, constraint length 7, rate ½ Viterbi decoder in October 1971. It was based on a commercial unit built for the US Army Satellite Communications Agency. The Linkabit Model 7026, or LV7026, used about 360 TTL chips on 12 boards in a 19 inch rackmount, 4.5U (7.9”) high, 22” deep enclosure. Compared to refrigerator-sized racks of equipment previously needed for the Viterbi Algorithm, it was a breakthrough.

Speed was also a concern. Viterbi tells of an early Linkabit attempt to integrate one ACS state of the decoder on a chip of only 100 gates – medium scale integration, or MSI. In his words, the effort “almost bankrupted the company” through a string of several supplier failures. Almost bankrupt? It sounds like an exaggeration until considering the available alternatives to TTL. From hints in a 1971 Linkabit report and a 1974 Magnavox document, Linkabit was playing with fast but finicky emitter-coupled logic (ECL) in attempts to increase clock speeds in critical areas. Many companies failed trying things with ECL. Viterbi omitted names to protect the guilty, but the ECL fab suspects would be Fairchild, IBM, Motorola, and Signetics.

A different direction led to more success. Klein Gilhousen started tinkering with a concept for a Linkabit Microprocessor (LMP), a microcoded architecture for all the functions of a satellite modem. Gilhousen, Sheffie Worboys, and Franklin Antonio completed a breadboard of the LMP using mostly TTL chips with some higher performance MSI and LSI commercial parts in May 1974. It ran at 3 MIPS. There were 32 instructions and four software stacks, three for processing and one for control. It was part RISC (before there was such a thing), part DSP.

Jacobs began writing code and socializing the LMP, giving lectures at MITLL and several other facilities about the ideas behind digital signal processing for a satellite modem. The US Air Force invited Linkabit to demonstrate their technology for the experimental LES-8/9 satellites. TRW had a multi-year head start on the spread spectrum modem within the AN/ASC-22 K-Band SATCOM system, but their solution was expensive and huge.

Linkabit stunned the MITLL team by setting up their relatively small system of several 19” rackmount boxes and acquiring full uplink in about an hour, a task the lab staff was sure would take several days just to get a basic mode running. In about three more hours, they found an error in the MITLL design specifications, fixed the error through reprogramming, and had the downlink working. Despite TRW’s certification and production readiness, the USAF general in charge of the program funded Linkabit – a company that had never built production hardware in volumes for a defense program – to complete its modem development.

Besides the fact that the LMP worked so well, the reason for the intense USAF interest became clear in 1978. The real requirement was for a dual modem on airborne command platforms such as the Boeing EC- 135 and Strategic Air Command aircraft including the Boeing B-52. The solution evolved into the Command Post Modem/Processor (CPM/P), using several LMPs to implement dual full-duplex modems and red/black messaging and control, ultimately reduced to three rugged ½ ATR boxes.

Linkabit was growing at 60% a year. Needing further capital to expand, they considered going public before being approached by another firm with expertise in RF technology, M/A-COM. In August 1980, the acquisition was completed. It radically altered the Linkabit culture from a freewheeling exchange of thoughts across the organization to a control-oriented, hierarchical structure. It didn’t stop innovation. Several significant commercial products debuted. One was Very Small Aperture Terminal (VSAT), a small satellite communications system using a 4 to 8 foot dish for businesses. Its major adopters included 7-11, Holiday Inn, Schlumberger, and Wal-Mart. Another was VideoCipher, the satellite TV encryption system that went to work at HBO and other broadcasters. Jerry Heller oversaw VideoCipher through its life as the technology grew.

Jacobs and Viterbi had negotiated the acquisition with M/A-COM CEO Larry Gould. As Jacobs put it, “We got along very well, but [Gould] went through a mid-life crisis.” Gould wanted to make management changes, or merge with other firms – none of it making a lot of sense. The M/A-COM board of directors instead replaced Gould (officially,“retired”) as CEO in 1982. Jacobs was an M/A-COM board member but was travelling in Europe and was unable to have the input he wanted in the decision or the new organizational structure. He subsequently tried to split the firm and take the Linkabit pieces back, going as far as vetting the deal with investment bankers. At the last moment, the M/A-COM board got cold feet and reneged on allowing Linkabit to separate. After finishing the three chips for the consumer version of the VideoCipher II descrambler, Jacobs abruptly “retired” on April 1, 1985. Within a week Viterbi left M/A-COM as well and others quickly followed suit.

“Let’s Do It Again”

Retirement is far from all it’s cracked up to be. For someone who hadn’t wanted to run Linkabit day-to day, Irwin Jacobs had done a solid job running it. Shortly after his M/A-COM departure, one of his associates asked, “Hey, why don’t we try doing this again?” Jacobs took his family, who he had promised to spend more time with, on a car tour of Europe to think about it.

On July 1, 1985, six people reconvened in Jacobs’ den – all freshly ex- Linkabit. Besides Jacobs, there was Franklin Antonio, Dee Coffman, Andrew Cohen, Klein Gilhousen, and Harvey White. Tribal legend says there were seven present; Andrew Viterbi was there in spirit, though actually on a cruise in Europe until mid-July, having agreed on a direction with Jacobs before departing. This core team picked the name Qualcomm, shorthand for quality communications, for a new company. They would combine elements of digital communications theory with practical design knowledge into refining code division multiple access, or CDMA.

In his channel capacity theorem, Shannon illustrated spread spectrum techniques could reliably transmit more digital data in a wider bandwidth with lower signal-to-noise ratios. CDMA uses a pseudorandom digital code to spread a given data transmission across the allocated bandwidth.

Different code assignments allow creation of multiple CDMA data channels sharing the same overall bandwidth. To any single channel, its neighbors operating on a different code look like they are speaking another language and do not interfere with the conversation. To outsiders without the codes, the whole thing is difficult to interpret and looks like background noise. This makes CDMA far more secure from eavesdropping or jamming compared to the primitive ideas of frequency hopping postulated by Nikola Tesla and later patented in 1942 by actress and inventor Hedy Lamarr and her composer friend George Antheil.

Unlike a TDMA system using fixed channels determining exactly how many simultaneous conversations a base station can carry in an allocated bandwidth, CDMA opens up capacity substantially. With sophisticated encoding and decoding techniques – enter Reed-Solomon codes and Viterbi decoding – a CDMA system can handle many more users up to an acceptable limit of bit error probability and crosschannel interference. In fact, CDMA reuses capacity during pauses in conversations, an ideal characteristic for mobile voice traffic.

Coding techniques also gave rise to a solution for multipath in spread spectrum applications. The RAKE receiver, developed by Bob Price and Paul Green of MITLL originally for radar applications, used multiple correlators like fingers in a rake that could synchronize to different versions of a signal and statistically combine the results. RAKE receivers made CDMA practically impervious to noise between channels.

USAF SATCOM planners were the first to fall in love with CDMA for all its advantages, but it required intense digital compute resources to keep up with data in real-time. Jacobs and Viterbi realized they had some very valuable technology, proven with the digital signal processing capability of the LMP and the dual modem that had handled CDMA for satellite communications reliably. Could Qualcomm serve

commercial needs?

Two things were obvious right from the beginning: cost becomes a much bigger issue in commercial products, and regulators like the FCC enter the picture for non-defense communication networks. So Qualcomm found itself picking up right where they left off at Linkabit – working government communication projects, trying to make solutions smaller and faster.

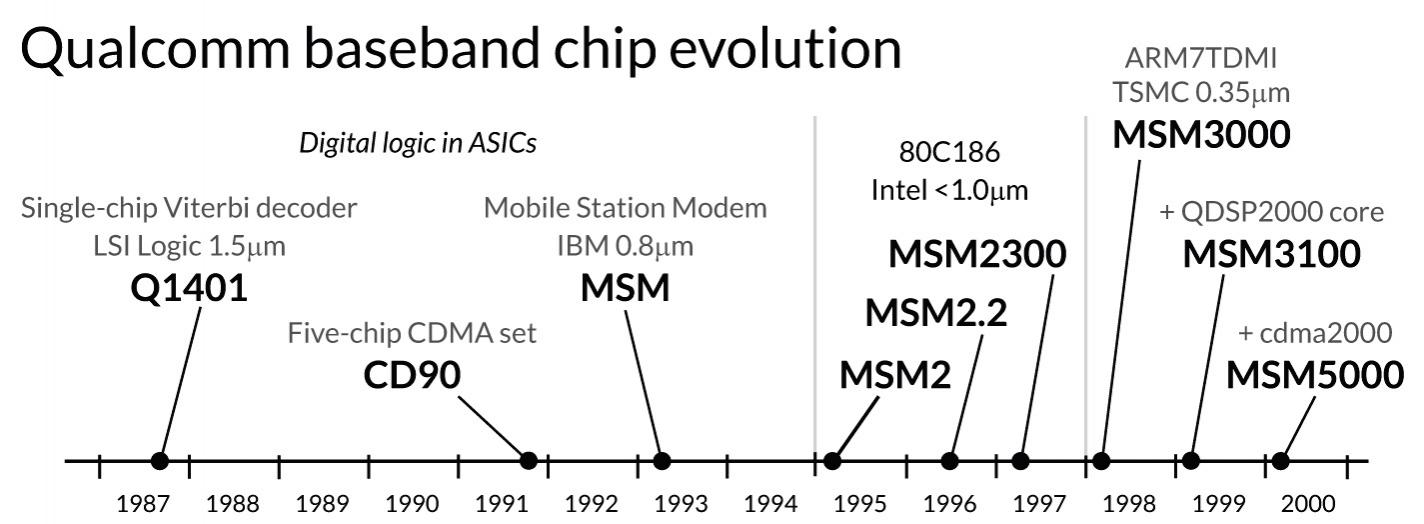

Those government projects spawned a single-chip Viterbi decoder. Finally, CMOS ASIC technology had caught up, ending the need for hundreds of TTL chips and exotic measures like ECL. Qualcomm had its first chip design ready in September 1987: the Q1401, a 17 Mbps, 80 state, K=7, rate ½ decoder. It was fabbed by LSI Logic in 1.5 micron, estimated to be a 169mm2 die in a 155 pin ceramic PGA. It was available in both commercial and military grades, slightly downgraded in speed at the wider military temperatures.

Space Truckin’

Just before Qualcomm opened for business, Viterbi received an interesting phone call. It was Allen Salmasi – who left JPL to start OmniNet in 1984 – asking if his firm could work with Qualcomm on a new location system for trucks.

The FCC allocated frequencies for RDSS (radiodetermination satellite service) in 1984. OmniNet held one RDSS license, competitor Geostar the other. Geostar’s concept had position reporting and messaging from a truck to an L-band satellite, relayed to the trucking company. If OmniNet could deliver RDSS with a link from the trucking company back to the truck, it could be a huge opportunity.

Qualcomm wasn’t too sure. Salmasi gave them $10,000 to study the situation – he had no customers, no venture capital (nobody believed it would work, not even Geostar who refused a partnership offer), and only “family and friends” money. OmniNet had to commercialize to survive, and Qualcomm was the best hope.

L-band satellites were scarce and expensive, partly because they used a processing payload that had to be mission customized. Ku-band satellites used for VSATs and other applications were ample, less expensive, allowed for ground signal processing, and could provide both uplink and downlink capability, but there was a catch. The FCC had licensed Ku-band for fixed terminals, with large ground parabolic dish antennae that had to be pointed within a degree or two. Secondary use permitted mobile if and only if it did not interfere with primary uses. A smaller ground dish antenna, especially one on a moving truck, would have both pointing and aperture issues almost certain to cause interference. Then Klein Gilhousen said, “We’re using CDMA.”

In theory CDMA and spread spectrum would solve any interference issues on the transmit side, and if antennae pointing were accurate enough, the receive side would work. Now the FCC were the ones not so sure. Qualcomm convinced the FCC to grant an experimental license, one that would cover 600 trucks. Jacobs and his teams created a unique directional antenna system that was compact at 10” in diameter and 6” tall, but highly accurate. A Communication Unit measuring 4”x8”x9” did the processing, and a Display Unit had a 40 character by four line readout with a small keyboard and indicators for the driver. By January 1988, the system began limited operational testing on a cross-country drive.

Still without a customer, Salmasi was out of capital – so Qualcomm bought him, his company, and the entire system, launching it as OmniTRACS in August 1988. With zero reports of interference, the FCC granted broader operating authority for the system. By October, Qualcomm had their first major customer in Schneider with about 10,000 trucks. OmniTRACS was on its way, with some 1.5 million trucks using the system today. This first important win provided income for Qualcomm to contemplate the next big market for CDMA.

Just Keep Talking

Gilhousen bent both Jacobs’ and Viterbi’s ears with the suggestion that Qualcomm go into cellular phones with CDMA. Viterbi found the idea familiar, having presented it in a 1982 paper on spread spectrum. Moving from defense satellite networks for several hundred B-52s and EC-135s to private satellite networks with 10,000 trucks and more had been straightforward, but a public cellular network presented a well-known problem.

While CDMA signals reduced interference between digital channels, there were still RF characteristics to consider with many transmitters talking to one terrestrial base station simultaneously. For satellite communication systems, every terminal on the Earth is relatively far away and should have roughly the same signal strength under normal operating conditions.

In a cellular grid with low power handsets, distance matters and the near-far problem becomes significant. Near-far relates to the dynamic range of a base station receiver. If all handsets transmit at the same power, the closest one captures the receiver and swamps handsets transmitting farther away from the cell tower making them inaudible in the noise.

Viterbi, Jacobs, Gilhousen, and Butch Weaver set off to figure out the details. While they ran CDMA simulations, the Telecommunications Industry Association (TIA) met in January 1989 and chose TDMA in DAMPS as the 2G standard for the US. D-AMPS was evolutionary to AMPS, and some say there was a nationalistic agenda to adopt an alternative to the European-dominated GSM despite its head start. FDMA was seen as a lower-risk approach (favored by Motorola, AT&T, and others), but TDMA had already shown its technical superiority in GSM evaluations.

Few in the industry took CDMA seriously. The Cellular Telecommunication Industry Association (CTIA) pushed for user performance recommendations in a 2G standard with at least 10 times the capacity of AMPS, but also wanted a smooth transition path. DAMPS did not meet the UPR capacity goals, but was regarded as the fastest path to 2G.

Capacity concerns gave Qualcomm its opening. Jacobs reached out to the CTIA to present the CDMA findings and after an initial rebuff got an audience at a membership meeting in Chicago in June 1989. He waited for the assembled experts to shoot his presentation full of holes. It didn’t happen.

One reason their presentation went so well was that they had been test driving it with PacTel Cellular since February 1989. After the TIA vote, Jacobs and Viterbi started asking for meetings with regional carriers. “All of a sudden, one day, Irwin Jacobs and Andy Viterbi showed up in my office. Honestly, I don’t even know how they got there,” said PacTel Cellular CEO Jeff Hultman.

However, William C. Y. Lee, PacTel Cellular chief scientist, knew why they had come. PacTel Cellular was experiencing rapid subscriber growth in its Los Angeles market, and was about to experience a capacity shortfall. Lee had been studying digital spread spectrum efficiency and capacity issues for years, comparing FDMA and TDMA.

What he saw with CDMA – with perhaps 20 times improvement over analog systems – and the risks in developing TDMA were enough to justify a $1M bet on research funding for Qualcomm. Lee, like many

others, needed to see a working solution for the near-far problem and other issues.

Just under six months later on November 7, 1989, Qualcomm had a prototype system. A CDMA “phone” – actually 30 pounds of equipment – was stuffed in the back of a van ready to drive around San Diego. There were two “base stations” set up so call handoff could be demonstrated.

Image 9-2: Qualcomm team including Andrew Viterbi (left), Irwin Jacobs (center), Butch Weaver, and Klein Gilhousen (right) with CDMA van, circa 1989

Photo credit: Qualcomm

Before a gathering of cellular industry executives, at least 150 and by some reports as many as 300, William C. Y. Lee made a presentation, Jacobs made his presentation, and Gilhousen described what visitors were about to see. Just before dismissing the group for the demonstration, Jacobs noticed Butch Weaver waving frantically. A GPS glitch took out base station synchronization. Jacobs improvised, and kept talking about CDMA for some 45 minutes until Weaver and the team got the system working.

Many attendees at the demonstration were thrilled at what they had seen. The critics said CDMA would never work, that the theory would not hold up under full-scale deployment and real-world conditions, and it “violated the laws of physics” according to one pundit. Additionally, there was the small problem of getting it to fit in a handset – a problem Qualcomm was prepared to deal with. Beyond a need for miniaturization and the basics of direct sequence spread spectrum and channelization, Qualcomm was developing solutions to three major CDMA issues.

First was the near-far problem. Dynamic power control changes power levels to maintain adequate signal-to-noise. CDMA handsets closer to base stations typically use less transmit power, and ones farther away use more. The result is all signals arrive at the base station at about the same signal-to-noise ratio. Lower transmit power also lowered interference and saved handset battery power. Qualcomm used aggressive open loop and closed loop power control making adjustments at 800 times per second (later increased to 1500), compared to just a handful of times per second in GSM.

Second was soft handoffs. In a TDMA system, dropped calls often happened when users transitioned from one base station to another due to a hard handoff. CDMA cells establish a connection at the next base station while still connected to the current one.

Third was a variable rate vocoder. Instead of on-off encoding used in GSM, a variable rate encoder adapts rapidly as speech naturally pauses and resumes, reducing the number of bits transmitted by handsets and effectively increasing overall capacity at the base station. This feature was not present in TDMA, since channels are fixed and unsharable.

Get In and Hang On

If CDMA could be productized, Hultman had promised PacTel Cellular’s support but other deals would be needed to reach critical mass. PacTel helped introduce Qualcomm to higher-level executives at the other Baby Bells and the major cellular infrastructure vendors, looking for markets where CDMA would be welcome. Qualcomm leadership also made a fateful decision on their business model: instead of building all the equipment themselves, they would license their CDMA intellectual property to manufacturers.

Another cellular market with looming capacity headaches was New York, home to NYNEX. Qualcomm carted its CDMA prototypes to Manhattan for field trials during February 1990. NYNEX already had AT&T looking at next-generation infrastructure specifics, and by early July, AT&T and Qualcomm had a license agreement for CDMA base station technology. On July 31, 1990, Qualcomm published the first version of the CDMA specifications for industry comments – the Common Air Interface. On August 2, NYNEX announced it would spend $100M to build “a second cellular telephone network” in Manhattan by the end of 1991, mostly to provide time for frequency allocation and base station construction. $3M would go to Qualcomm to produce CDMA phones.

Others held back. The two largest cellular infrastructure vendors, Ericsson and Motorola, had plans for TDMA networks. Motorola hedged its bet in a September 1990 CDMA infrastructure cross licensing agreement with Qualcomm, but publicly expressed technical concerns. Carriers like McCaw Cellular (the forerunner of AT&T Wireless) and Ameritech were trying to postpone any major commitments to CDMA. Internationally, Europe was all in on GSM based on TDMA, and Japan was developing its own TDMA-based cellular network.

In the uncommitted column was Korea, without a digital solution. Salmasi leveraged introductions from PacTel’s Lee in August 1990 into rounds of discussion culminating in the May 1991 ETRI CDMA joint development agreement (see Chapter 8 for more). Although a major funding commitment with a lucrative future royalty stream, the program would take five years to unfold.

Even with these wins, Qualcomm was hanging on the financial edge. Every dollar of income was plowed back into more employees – numbering about 600 at the close of 1991 – and CDMA R&D.

PacTel continued with its CDMA plans, leading to the CAP I capacity trial in November 1991 – using commercial-ready Qualcomm CDMA chipsets. Five ASICs were designed in a two-year program. Three were for a CDMA phone: a modulator, a demodulator, and an enhanced Viterbi decoder. Two more were created for a base station, also used with the Viterbi decoder. These chipsets interfaced with an external microprocessor. The trials proved CDMA technology was viable on a larger scale, and could produce the capacity gains projected.

Image 9-3: Qualcomm CDMA chipset circa 1991

Photo credit: Qualcomm

On the heels of disclosing the CAP I trial success and the ASICs at a CTIA technology forum, Qualcomm proceeded with its initial public offering of 4 million shares, raising $68M in December 1991. PacTel bought a block of shares on the open market, and kicked in an additional $2.2M to buy warrants for 390,000 more shares, assuring CDMA R&D would continue uninterrupted.

Along with the Korean ETRI joint development deal, four manufacturers were onboard with Qualcomm and CDMA entering 1992: AT&T, Motorola, Oki, and Nortel Networks. Licensee number five in April 1992 was none other than Nokia, the climax of a year and a half of negotiations directly between Jacobs and Jorma Ollila. Nokia had been observing the PacTel trials with keen interest, and had set up their own R&D center in San Diego to be close to the action with CDMA. One of the sticking points was the royalty: Nokia is thought to have paid around 3% of handset ASPs under its first 15-year agreement.

On March 2, 1993, Qualcomm introduced the CD-7000, a dual-mode CDMA/AMPS handheld phone powered by a single chip baseband: the Mobile Station Modem (MSM). The phone was a typical candy bar, 178x57x25mm weighing a bit over 340g. The first customer was US West, with a commitment for at least 36,000 phones. Also in March 1993, plans for CDMA phones and infrastructure in Korea were announced with four manufacturers: Goldstar, Hyundai, Maxon, and Samsung.

Qualcomm provided details of the new MSM baseband chip at Hot Chips in August 1993. The three basic CDMA functions of modulator, demodulator, and Viterbi decoder were on a single 0.8 micron, 114mm2 chip. It had 450,000 transistors and consumed 300mW, still requiring an external processor and RF circuitry to complete a handset. Qualcomm indicated a multi-foundry strategy, but didn’t disclose suppliers – later reports named IBM as the source.

The TIA finally relented, endorsing CDMA with first publication of the IS-95 specification in July 1993, known commercially as cdmaOne. Cellular markets now had their choice of 2G digital standards in CDMA, D-AMPS, and GSM.

Roadblock at Six Million

Inside the CD-7000 phone with the MSM chip was an Intel 80C186 processor. The logical next step was to integrate the two, except … Intel was not in the intellectual property business. Intel denied Qualcomm’s advances at first. Under persistent nagging from its San Diego sales office, Intel’s embedded operation in Chandler, AZ learned all about Qualcomm, CDMA technology, and market opportunities before agreeing to provide an 80C186 core.

Converting the 80C186 design from Intel-ese to a more industry standard design flow proved difficult. Qualcomm had designed the MSM with high-level hardware description language (HDL) techniques that could be resynthesized on different libraries quickly, along with a simulation database and test vectors. It quickly became obvious that it was easier to move the Qualcomm MSM IP into the Intel design flow, and have Intel fab the entire chip. Qualcomm agreed. Intel was about to enter both the mobile business and the foundry business.

On February 1, 1995, Qualcomm announced the Q5257 MSM2 with its Q186 core in a 176-pin QFP, along with the Q5312 integrated Analog Baseband Processor (BBA2) replacing 17 discrete chips in an 80-pin QFP. Those two chips formed most of a CDMA phone – such as the QCP-800 announced the next day. Gearing up for larger volumes, Qualcomm formed a joint venture with Sony to produce the new dualmode phone that more than doubled battery life to a five-hour talk time. Also announced was the single chip Q5160 Cell Site Modem (CSM) for CDMA base stations, without an integrated processor.

The Q5270 MSM2.2 was introduced in June 1996. Its major enhancement was the 13 Kbps PureVoice vocoder using QCELP, providing higher voice quality without sacrificing power consumption. It was offered in both a 176-pin QFP for production use and a larger 208-pin QFP for enhanced in-circuit debugging.

Reducing power was the objective of the MSM2300, announced in March 1997. Its searcher engine, using a hardwired DSP, ran up to eight times faster than the MSM2.2, reducing the amount of time the microprocessor spent acquiring signals. Its 176-pin QFP was pin compatible, allowing direct upgrades.

Chipset volumes exploded as CDMA deployed around the world. Qualcomm claimed combined shipments of MSM variants – mostly the MSM2 and MSM2.2 fabbed by Intel – reached six million units in June 1997. Intel had also driven its lower power 386EX embedded processors into handheld designs at Nokia and Research in Motion. What could possibly go wrong?

That was probably what Qualcomm asked when Intel balked on providing a roadmap update for an embedded core. In fairness, the degree of difficulty in fabbing a 386EX was substantially higher, and there was more Qualcomm IP to put next to it. Intel probably saw risk in both design and yield that even six million units did not justify.

Qualcomm forced the issue with an RFQ and got a rather perfunctory response without major improvements in CPU performance. (Intel was likely in the midst of settling Alpha litigation with DEC when this request arrived. Had the Qualcomm need for a new core been just a bit later, and if Intel had figured out an IP or foundry business model for StrongARM, the outcome for Intel in mobile may have been very different.) While existing parts still shipped, the Intel phase for next generation parts at Qualcomm was over.

Detour for Better Cores

It was a short search for a higher performance CPU core. Many Qualcomm CDMA licensees, particularly LSI Logic, Lucent Technologies (spun out from AT&T), Samsung, and VLSI Technology, were ARM proponents. Officially, Qualcomm announced its first ARM license in July 1998.

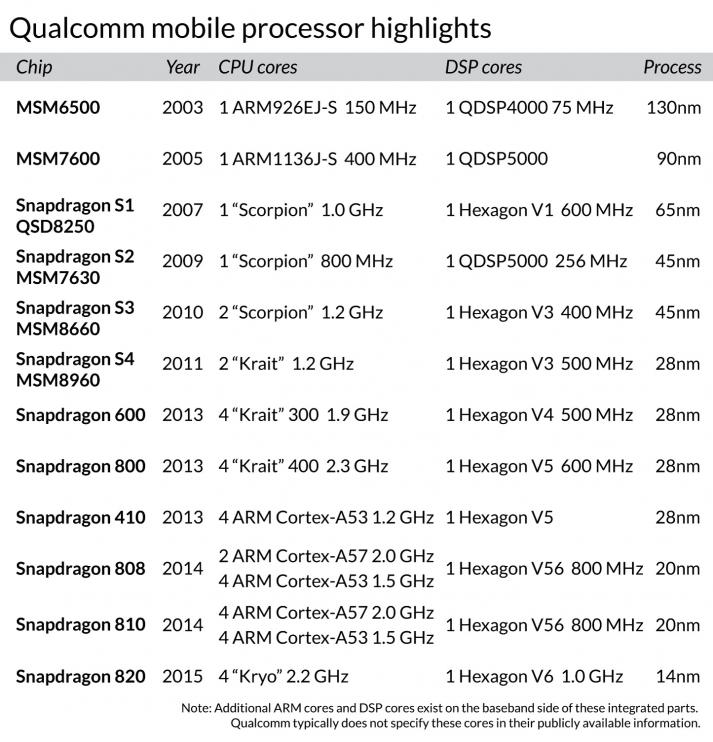

Chipset launches accelerated – quickly making Qualcomm one of the more prolific ARM-based chip suppliers used extensively in thousands of mobile devices. What follows is primarily a chip discussion with only highlights of key releases.

Already in progress when the ARM deal became public, the MSM3000 was announced in February 1998 with a core change to ARM7TDMI. It had other enhancements including the SuperFinger demodulator for faster data transfers up to 64 Kbps, and enhanced sleep modes. It was fabricated in a 0.35 micron process. For the first time, Qualcomm parts were coming from TSMC. To avoid confusion since all-new software was required, the same 176-pin QFP had a vastly different pinout.

Another core was also being productized. DSP was a feature of the product line for some time, and in February 1999 the MSM3100 was introduced with both an ARM7TDMI core and a homegrown, programmable QDSP2000 core. The 40-bit execution unit in the QDSP2000 featured a five-deep pipeline with optimized instructions for EVRC and other needs such as echo cancellation.

3G technology made its debut in the MSM5000 supporting the updated cdma2000 specification. Announced in May 1999, and still running on the ARM7TDMI core, the new chip achieved data rates of 153.6 Kbps and further improved searching capabilities. The MSM5000 supported the field trials of cdma2000 the following year and its High Data Rate (HDR) technology would evolve into 1xEV-DO.

A flirtation with Palm and the pdQ CDMA phone in September 1998 led to investigation into smartphone operating systems. In September 1999, Qualcomm tipped plans for iMSM chips targeting Microsoft Windows CE and Symbian, including the dual core iMSM4100 with two ARM720T processors, one for baseband and one for the operating system. With StrongARM and other solutions appearing, the iMSM4100 was ahead in integration but behind in performance when it launched. Qualcomm knew its baseband business, but had much more to learn about application processors.

Image 9-4

By mid-2000, three different families of chips were in development: 2G cdmaOne baseband, 3G cdma2000 baseband, and notional application processors such as the MSP1000 (essentially an iMSM with just one ARM720T processor). With numerous OEMs producing CDMA phones, Qualcomm exited the handset business, selling to Kyocera in February 2000. After years of visionary thinking, Andrew Viterbi announced his retirement in March. In May, Qualcomm announced its cumulative MSM chipset shipments surpassed 100 million.

In February 2001, Qualcomm laid out an ambitious plan. The roadmap for the MSM6xxx family offered a wide range of products, starting at the entry-level MSM6000 on the ARM7TDMI supporting only 3G cdma2000. The Launchpad application suite, on top of the new BREW API, helped OEMs produce software more efficiently. Also added were radioOne for more efficient Zero Intermediate Frequency conversion, and gpsOne for enhanced location services.

At the high end would be the MSM6500 on the ARM926EJ-S with two QDSP4000 cores, supporting 3G cdma2000 1xEV-DO and GSM/GPRS plus AMPS, all on one chip. The MSM6500 finally sampled nearly two years later, fabbed in 0.13 micron, packaged in a 409-pin CSP.661,662 2003 marked the start of leadership change. In January, Don Schrock announced plans to retire as president of Qualcomm CDMA Technologies (QCT), succeeded by Sanjay Jha who had led MSM development teams.

The MSM7xxx family was next on the roadmap, previewed in May 2003 with a similar broad plan for variants from entry-level to high-end. The 90nm version of the MSM7600 would carry an ARM1136J-S at 400 MHz and a QDSP5000 for applications, plus a 274 MHz ARM926EJ-S and a QDSP4000 for multimode baseband. Also on chip was the Q3Dimension GPU from a licensing agreement for IMAGEON with ATI. The MSM7600A would shrink to 65nm in 2006 with a 528 MHz clock. While still under the MSM banner, the MSM7600 and its stable mates indicated the direction for future Qualcomm application

processors.

In September 2003, Qualcomm reached the 1 billion-chip milestone for MSM shipments – nine years after its commercial introduction.667 “Scorpion”, “Hexagon”, and Gobi “[Qualcomm has] always been in the semiconductor business,” opened Klein Gilhousen in his presentation at Telecosm 2004. “We have always recognized that the key to feasibility of CDMA was VERY aggressive custom chip design.” Qualcomm’s next moves would test how aggressive they could get.

Irwin Jacobs stepped down as CEO of Qualcomm on July 1, 2005 – the 20th anniversary of its founding – moving into the role of Chairman. His successor was his son, Paul Jacobs, who had been behind development of speech compression algorithms and the launch of the pdQ smartphone as well as BREW and other projects. Steven Altman, who had overseen Qualcomm Technology Licensing, took over as president for the retiring Tony Thornley. Overall, the strategy remained unchanged.

Image 9-5: Paul Jacobs and Irwin Jacobs, circa 2009

Photo credit: Qualcomm

Many ARM licensees expressed immediate support for the new ARM Cortex-A8 core when it launched in October 2005. Rather than go off-the-shelf, Sanjay Jha took out the first ARMv7 architectural license granted by ARM and unveiled a roadmap for the “Scorpion” processor core in November 2005. Media headlines blaring that it was the first 1 GHz ARM core were slightly overstated; Samsung pushed the ARM10 “Halla” design to 1.2 GHz three years earlier.670 Nonetheless, Qualcomm pushed Scorpion beyond the competition such as the TI OMAP 3 using a tuned Cortex-A8, and beat Intrinsity’s “Hummingbird” core design to market by a bit more than two years.

Their advantage stemmed from a little-known acquisition of Xcella in August 2003, a North Carolina firm started by ex-IBMers including Ron Tessitore and Tom Collopy, bring a wealth of processor design experience.

Scorpion used a similar 13-stage load/store pipeline as Cortex-A8, but added two integer processing pipelines, one 10 stages for simple arithmetic, and one 12 stages for multiply/accumulate. SIMD operations in its VeNum multimedia engine were deeply pipelined and data width doubled to 128 bits. “Clock-do-Mania” clock gating, an enhanced completion buffer, and other power consumption tweaks for TSMC’s 65nm LP process yielded about 40 percent less power compared to the Cortex-A8.

DSP capability received an enhancement as well. The “Hexagon” DSP core, also referred to as QDSP6, was also moving to 65nm. Started in the fall of 2004, Hexagon applied three techniques to deliver performance at low power: Very Long Instruction Word (VLIW), multithreading to reduce L2 cache miss penalties, and a new instruction set to maximize work per packet. Dual 64-bit vector execution units handled up to eight simultaneous 16-bit multiply-accumulate operations in a single cycle. Three threads could launch four instructions every cycle, two on the dual vector execution units, and two on dual load/store units.

Both new cores were bound for the new application processor brand: Snapdragon. On November 14, 2007, Qualcomm showed off the new QSD8250 supporting HSPA and the dual mode QSD8650 with CDMA2000 1xEV-DO and HSPA. Each carried a 1 GHz Scorpion processor core and a 600 MHz Hexagon V1 DSP core on the application side. Also on chip was the Adreno 200 GPU (renamed after Qualcomm purchased the ATI mobile graphics assets from AMD in 2009) running at 133 MHz. The multimode baseband core combination of the ARM926EJ-S with a QDSP4000 continued.

Qualcomm was benefitting from the “netbook” craze, and was finding itself more and more in competition with Intel and its Atom processor. WiMAX was Intel’s anointed standard for broadband access from laptops, but required an all-new infrastructure rollout. Taking the opening, Qualcomm introduced the first Gobi chipset in October 2007, with the 65nm MDM1000 for connecting netbooks and similar nonphone devices to the Internet using EV-DO or HSPA over existing 3G

cellular networks.

Selling into PC and netbook opportunities made Gobi an immediate hit, where Snapdragon was on a slower build-up. Resources poured into Gobi. The roadmap for the MDM9x00 family announced in February 2008 bumped parts to 45nm and enhanced the modem for LTE support, later found to be ARM Cortex-A5 based.678 After Sanjay Jha departed for Motorola in August 2008, Qualcomm promoted Steve Mollenkopf to head of QCT to keep the overall strategy rolling forward.

A big change was about to occur in mobile operating systems that would help Snapdragon. In September 2008, the T-Mobile G1, built by HTC, was the first phone released running Android – on a Qualcomm MSM7201A chip. LG and Samsung were both working on Android phones with Qualcomm chips for 2009 release, and Sony Ericsson was not far behind.

Snapdragon moved forward with a second generation introduced on 45nm in November 2009. The MSM7x30 parts aimed to reduce cost and power, falling back to an 800 MHz Scorpion core with a QDSP5000 at 256 MHz and a shrunk Adreno 205 GPU.680, 681 Preparing for dual core, the 45nm version of Scorpion received debug features borrowed from the ARM Cortex-A9 and enhancements in L2 cache. In June 2010, the third-generation Snapdragon MSM8260 and MSM8660 featured two Scorpions running at 1.2 GHz paired with the Hexagon V3 at 400 MHz, plus a higher performance Adreno 220 GPU. Packages were getting larger; the MSM8x60 came in a 976-pin, 14x14mm nanoscale package (NSP).

“Krait”, Tiers, and an A/B Strategy

The Qualcomm modus operandi for major introductions is generally to tip the news in a roadmap preview early, followed by product one, two, sometimes three years later. When Mobile World Congress (MWC) convened in February 2011, Qualcomm had two major moves up its sleeve for announcement.

In its first move, Gobi was headed for 28nm in the form of the MDM9x25. The enhancement added support for Category 4 downlink speeds up to 150 Mbps on both LTE FDD and LTE TDD, and support for HSPA+ Release 9. These third-generation parts sampled at the end of 2012.

Its second move had been previewed in part, twice. Two MWCs earlier, Qualcomm had mentioned the MSM8960, a new Snapdragon variant planned for multimode operation including LTE. At an analyst briefing in November 2010, the part was identified as moving to 28nm with a next-generation processor core on a new microarchitecture, along with a faster Adreno GPU. At MWC 2011, the first ARM processor core to be on 28nm had a name: “Krait”.

Krait was announced as the core inside three different chips. At the low end was the dual-core 1.2 GHz Krait MSM8930, with the Adreno 305 GPU. Mid-range was the MSM8960, a dual-core 1.5 GHz Krait with the faster Adreno 225 GPU. High end was the APQ 8064 with a quad-core 1.5 GHz Krait with the Adreno 320 GPU.

With cores independent in voltage and frequency, Krait allowed significant power savings of 25-40% compared to on-off SMP approaches such as big.LITTLE with the ARM Cortex-A15, depending on workload. Performance gains came in part from 3-wide instruction decode compared to 2-wide in Scorpion, along with out-of-order execution, 7 execution ports compared to 3, and doubled L2 cache to 1 MB. This raised Krait to 3.3 DMIPS/MHz.

Attempting to sort out the pile they had created, Qualcomm set up tiered branding for Snapdragon parts at its analyst meeting in November 2011. The new Krait based parts in 28nm were Snapdragon S4, separated into S4 Play, S4 Plus, and S4 Pro. The 65nm Scorpion parts were labeled Snapdragon S1, the 45nm single-core Scorpion parts Snapdragon S2, and the 45nm dual-core parts Snapdragon S3.690

Sometimes, marketers can get a little too clever; tiering is good, obscure nomenclature that doesn’t translate well outside of English, not so much. The second try at CES 2013 set up today’s Snapdragon number-driven branding.

The flagship Snapdragon 800 for high end phones was announced with quad-core Krait 400 CPUs at 2.3 GHz plus the Hexagon V5 at 600 MHz and an Adreno 330 at 450 MHz, and the LTE “world mode” modem. The Snapdragon 600 had quad Krait 300 CPUs at 1.9 GHz with a Hexagon V4 at 500 MHz an Adreno 320 GPU at 400 MHz, omitting the modem for cost reasons.

Subsequent launches since CES 2013 fit into the Snapdragon 200 entry level tier for phones, Snapdragon 400 volume tier for phones and tablets, Snapdragon 600 mid-tier, and Snapdragon 800 high-end devices. The Snapdragon 200 lines adopted the ARM Cortex-A7 core to keep costs low.

There was more not-so-clever marketing. Shortly after the unexpected launch of the Apple A7 chip with 64-bit support in September 2013, Qualcomm’s chief marketer Anand Chandrasekher sounded a dismissive tone questioning its actual value to users. After further review (and probably some testy phone calls from ARM), Chandrasekher was placed in the executive penalty box and his statements officially characterized as

“inaccurate” a week later.

Crisis was averted but unanswered. At its November 2013 analyst meeting Qualcomm showed the fourth-generation Gobi roadmap moving to 20nm with the 9×35, supporting LTE Category 6 and carrier aggregation. The hasty December 2013 introduction of a quad ARM Cortex-A53 core in the Snapdragon 410 got Qualcomm back in the 64-bit application processor arena.

It might have been coincidental timing, but a few days after the Snapdragon 410 announcement, a major management turnover occurred. Paul Jacobs announced he would step aside as Qualcomm CEO, remaining Chairman, and Steve Mollenkopf was promoted to CEO-elect on December 12, 2013 pending stockholder approval the following March.

Photo credit: Qualcomm

April 2014 saw the preview of the Snapdragon 810 on TSMC 20nm. Its octa-core big.LITTLE setup featured four ARM Cortex-A57 cores at 2 GHz with four Cortex-A53 cores at 1.5 GHz. Also inside was a retuned Hexagon V5 and its dynamic multi-threading at 800 MHz, an Adreno 430 GPU at 600 MHz, and new support for LPDDR4 memory. It also packed a Cat 9 LTE modem, full support for 4K Ultra HD video, and two Image Signal Processors for computational photography. Its sibling, the Snapdragon 808, used two ARM Cortex-A57 cores instead of four, downsized the GPU to an Adreno 418, and supported only LPDDR3.

Fifth-generation Gobi 20nm parts were the main subject of analyst day in November 2014, with LTE Advanced Category 10 support in the Gobi 9×45. These offered download speeds of 450 Mbps using LTE carrier aggregation.698

What appears to have developed on the Qualcomm roadmap is an A/B strategy – take ARM’s IP where it exists for expedience, follow it with internally developed cores, and repeat the cycle. This is the only feasible way to compete on a broad swath of four tiers from entry-level to super-high-end. The Snapdragon 200 series is facing a flood of ARM Cortex-A5 based parts from Taiwan and China, while the Snapdragon 800 series and Gobi are up against the behemoths including Apple, Intel, Samsung, and many others.

What Comes After Phones?

The relentless pursuit of better chip designs at Qualcomm took CDMA and Android to astounding levels of success. In a maturing cell phone market settling in with around 11% growth and some 80% market share for Android, Qualcomm faces new challenges unlike any seen before. Instead of celebrating its 30-year anniversary, Qualcomm announced a 15 percent workforce reduction in July 2015. Pundits hung that sad news on the notion that the 64-bit wave started by Apple caught Qualcomm off guard, followed by the Snapdragon 810 overheating scandal at LG and Samsung.

Qualcomm VP of marketing Tim McDonough has his version of the Snapdragon 810 overheating story, saying phone decisions get made 18 months before the public sees them happen – and as we have seen, major chip roadmap decisions get made 18 months before that. The latter is under Qualcomm control. The former may be getting shorter than Qualcomm would like. There are hints in source code that LG may have executed a switch from the Snapdragon 810 to the scaled down Snapdragon 808 – with the same LTE broadband implementation – just a few months before the LG G4 production release. McDonough contended the issues seen were with pre-release Snapdragon 810 silicon (since updated, and reports of overheating are gone), and that vendors adopting the Snapdragon 808 did so because they didn’t need the full end-to-end 4K video experience. The long pole in the tent is carrier qualification of the LTE modem, and it was well along in the process. This would make a switchover – if it did indeed happen at LG – seem quick and less painful. Samsung may have had its own ax to grind in pointing out the problem, with its flagship Exynos 8 Octa launch pending at the time.

Image 9-7

Perhaps recent events are leading to more caution in disclosing roadmaps for public consumption. At MWC 2015 in March, the big reveal was the Snapdragon 820 with “Kryo”, Qualcomm’s new 64-bit ARMv8-A CPU core design. Details are trickling out with a quad-core clock speed of 2.2 GHz (with rumors of even faster speeds) and a new fab partner in Samsung with their 14nm FinFET process. In August, plans for the Adreno 530 GPU and a new Spectra ISP bound for the Snapdragon 820 were shown and a new Hexagon 680 DSP is also in the works.

Qualcomm used its media day on November 10, 2015 to reiterate the Snapdragon 820 consumes 30 percent less power than the Snapdragon 810, They also explored system-level support, with Cat 12 LTE, 802.11ad Wi-Fi, and malware-fighting machine learning technology. Their marketing is moving away from pure IP specsmanship and toward use cases for features, a welcome change.

Kryo creates a possible entry point into the nascent 64-bit ARM server market. Competing with Intel and AMD on their turf could be an adventure. Qualcomm is also chasing the IoT, with technology from acquisitions of Atheros and CSR, plus software development in AllJoyn. How Qualcomm shifts its business model based on licensing sophisticated communication algorithms to what comes next will determine if they remain a leader in fabless semiconductors. Can they develop intellectual property supporting a new application segment, such as drones? (More ahead in Chapter 10.) Is there more work to be done in the 4G LTE cellular arena and will 5G technology coalesce sooner rather than later?

The clamoring from investors to split the firm into an IP licensing business and a chip business seems poorly conceived. While the IP licensing side of the business has a built-in legacy revenue stream from CDMA, the chip operation has thrived from lockstep operation as specifications advanced. Without that synergy, what drives the chip business and prevents it from commoditization?

As long as mobile devices connect wirelessly, Qualcomm should be there. Hard strategy questions lie ahead near term, and that could have a significant ripple effect on foundry strategy and competition in application segments depending on how they proceed.

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026