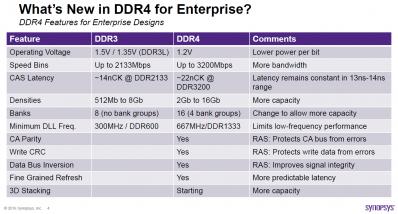

The old one-size-fits-all approach doesn’t work anymore for DDR4 memory controller IP, especially when addressing the enterprise segments, or application like servers, storage and networking. For mobile or high end consumer segments, we can easily identify two key factors: price (memory amount or controller footprint) and power consumption. The enterprise specific requirements are clearly defined and the DDR4 memory sub-system has to support very large capacity, provide as high as possible bandwidth, low latency and comply with Reliability, Availability and Serviceability (RAS) stringent requirements.

Server or storage applications are designed to compute and store large amount of data. It has been proven that using DRAM instead of SSD or HDD to build new generation of server leads to x10 to x100 performance improvements (Apache SPARK, IBM DB2 with BLU, Microsoft In-Memory option, etc.), mostly linked with better latency and bandwidth offered by DRAM. To build these efficient database systems, you just need to be able to aggregate large DDR4 DRAM capacity, and we will see the various options available, like LRDIMM, RDIMM or 3DS architectures. At the DDR4 interface level, new equalization techniques will help supporting higher speed. Larger DRAM capacity multiplied by higher bandwidth is the winning recipe for higher performance compute and storage systems.

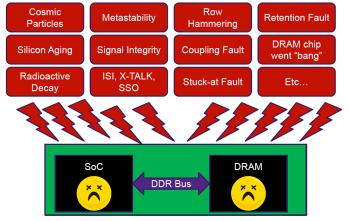

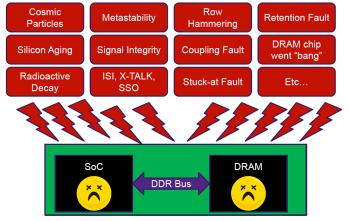

But these advanced electronic systems have to be designed on the latest technologies and these are more and more sensible to perturbations, like cosmic particles, meta-stability, signal integrity and many more (just take a look at the picture below!). At the same time, these applications are expected to run H24, 7 days a week and this can be translated into as high as possible RAS characteristics.

If we review the various approached to add memory capacity, the first is certainly to add DDR4 channels to the CPU die. Current servers already support 4 channels per CPU, with a roadmap to 6 or 8 channels. The limits are quite obvious: available PCB area around the chip, CPU ballout and finally silicon area and beachfront.

Is it possible to extend capacity by plugging more DIMM on the same channel? In fact, at DDR4 speeds, every wire becomes a transmission line, so adding more DIMM creates more impedance discontinuities that create reflections, forcing to reduce the speed. The typical max configuration with un-buffered DIMM is 32 GB per channel.

This is not the best option, but you can add more DRAM ranks with Registered DIMM (RDIMM), where the address bus is buffered on each DIMM, and requiring one RDIMM memory buffer chip per DIMM. In this case a typical DDR4 system is limited to 3 slots, 2 ranks/slot and 3DIMM, leading to typical max configuration of 96 GB per channel.

An even better option is to buffer address AND data bus on each DIMM, as the number of ranks is limited by load on DQ (data) bus, creating the Load Reduced DIMM (LRDIMM). In this case, the typical max capacity increases up to 192 GB per channel using 3 Quad-Rank LRDIMM of 8Gb x4 DDR4 devices.

If you want to increase the capacity above the LRDIMM limit, or integrate a more area efficient memory structure, you have to add one dimension, moving to DDR DRAM dies that are 3D stacked using Through Silicon Via (TSV). The master die, at the bottom, controls from 2 to 8 dies, so the CPU memory controller PHY only sees one load. The first benefit is obvious, as you directly gain 2x to 8x capacity of single dies. The system also offers less PCB area and volume (you only use one package for up to 8 dies). Because inter-die loads are better than inter-rank loads, you should benefit from better timing and lower power characteristics. 3D stacking with TSV manufacturing capability has been demonstrated, the remaining issue staying the cost of this advanced solution. We can imagine than for high-end networking, server or storage application, the cost issue can be solved is the performance improvement justify higher pricing…

Integrating DDR4 for enterprise application can be an opportunity to greatly increase DRAM capacity in the system, in such a way that DRAM could replace part of HDD or SSD capacity, leading to the design of new systems offering 10x to 100x more performance. But we are dealing with the enterprise segment, which means that the system doesn’t fail (Reliability), if it fail, the system can continue after failure (Availability) and that it should be possible to diagnose system failure and even maintain the system without stopping it (Serviceability). In other words, RAS considerations should have been integrated during DDR4 specification and design.

At the device (DDR4 DRAM) level, using ECC or CRC techniques is the most efficient approach, even if it’s not the only one. For basic operation, using Hamming Codes allow SECDED protection (Single-Error-Correct, Double-Error-Detect), but for advanced operation you have to use Block-Based Codes for SxCyED protection (x-Error-Correct, y-Error-Detect). Implementing Block-Based codes for DRAM is equivalent to RAID for HDD.

These ECC codes are traditionally used for data, but an error occurring on command/address bus may not be acceptable in enterprise systems and the solution is to integrate bus parity detection and alert in DDR4 devices or DIMM command address bus.

Good design practices can also help increasing RAS, like implementing DBI in DDR4, limiting how many data bits can switch the same way at the same time. The physical result is visible as DBI limits the data eye shrinkage from SSO or crosstalk, offering significant timing margin gain in system timing budget.

DDR4 for enterprise application offers much more capacity and bandwidth than previous DDR and the RAS capabilities have been greatly enhanced, allowing DRAM penetration detrimental to HDD/SSD. Using much higher DRAM capacity has opened doors for higher performance server/storage applications.

From Eric Esteve from IPNEST

Blog post: https://blogs.synopsys.com/committedtomemory/2016/06/08/breaking-down-another-memory-wall/?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+synopsys%2FptCJ+%28Committed+to+Memory%29

Webinar On-Demand: https://webinar.techonline.com/1878?keycode=CAA1CC

Share this post via:

Comments

0 Replies to “Why using new DDR4 allow designing incredibly more efficient Server/Storage applications?”

You must register or log in to view/post comments.