Part 1 of 2 – Essential Performance Metrics to Validate SoC Performance Analysis

Part 1 provides an overview of the key performance metrics across three foundational blocks of System-on-Chip (SoC) designs that are vital for success in the rapidly evolving semiconductor industry and presents a holistic approach to optimize SoC performance, highlighting the need for balancing these metrics to meet the demands of cutting-edge applications.

Prolog – SoC Performance Validation: Neglect It, Pay the Price!

In today’s technology-driven world, where electronics and software are deeply intertwined, the ability to estimate performance (as well as power consumption) ahead of tape-out has become a crucial factor in determining a product’s success or failure. Below are some real-world examples of failures that could have been avoided by pre-silicon performance validation:

- A hardware bug in the coherent interconnect fabric of an Android smartphone chip slipped through into the delivered product causing all caches to flush and forcing the Android system to reboot. This oversight led to a recall with significant financial losses for the developer.

- A hidden firmware bug triggered sudden and random drops in datacenter utilization in a range of 10% to 15%, resulting in sizable financial losses.

- An expert analysis of the October 22, 2023, GM Cruise autonomous vehicle accident in San Francisco revealed that the AD controller failed to detect a pedestrian lying on the asphalt after a hit-and-run accident. The failure occurred because latency exceeded the specified limits for detecting and responding to moving targets. As a result, GM suspended driverless operations of its Cruise vehicles for several months and incurred significant penalties.

- After multiple years of unsuccessful attempts to design a high-performance GPU for a mobile chip, a major semiconductor company was forced to adopt a competitor’s solution, incurring substantial costs.

These costly malfunctions and/or missed target specifications could have been avoided through comprehensive pre-silicon performance analysis.

SoC Performance Metrics Critical for Success

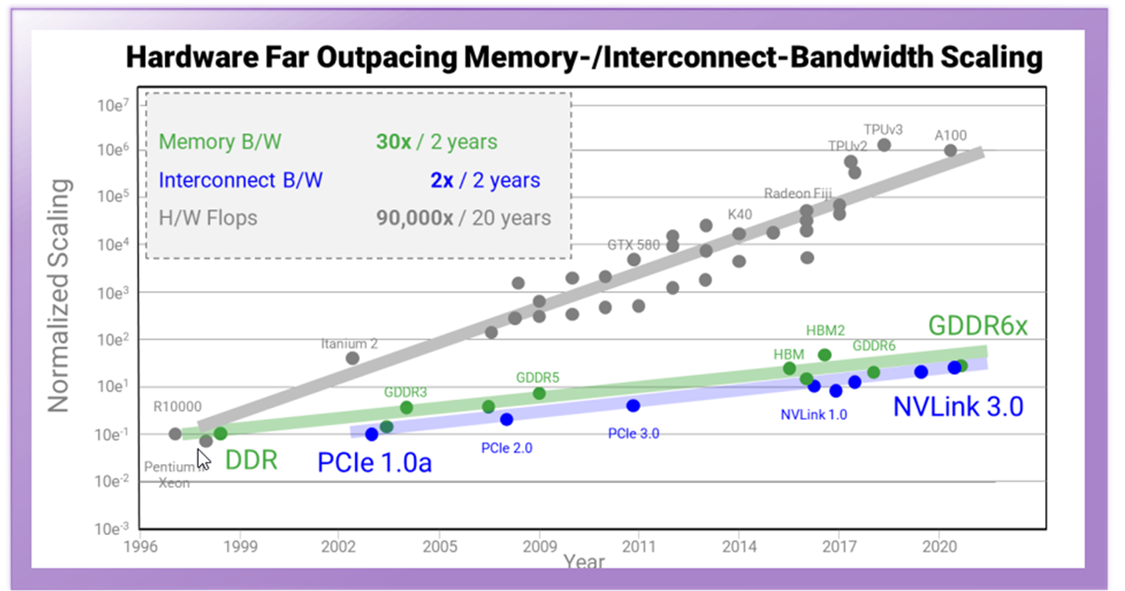

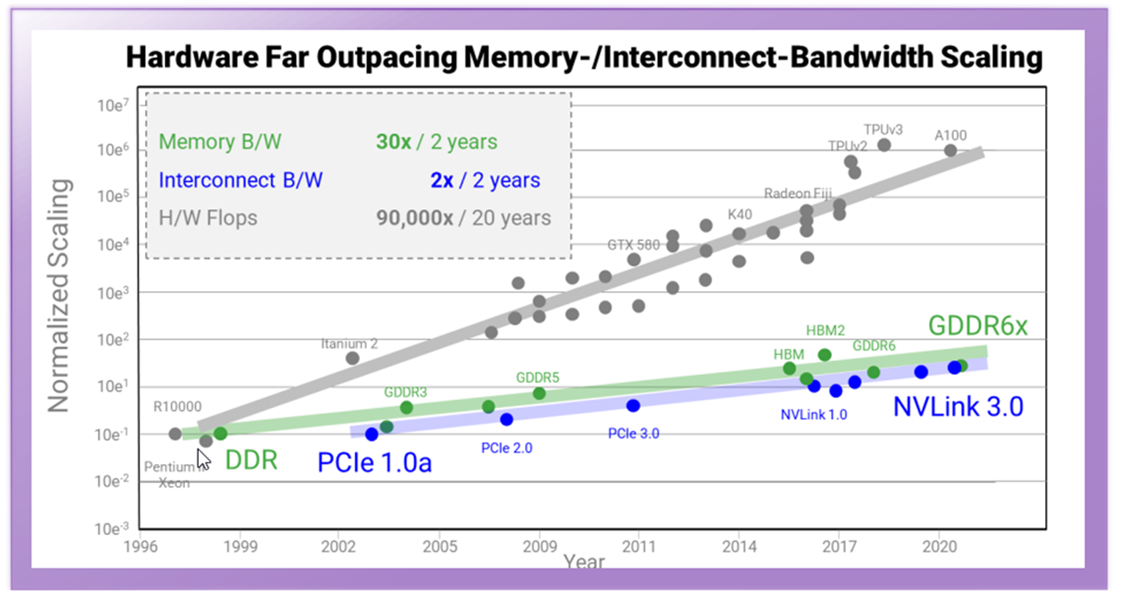

Achieving performance targets in today’s cutting-edge SoC designs is critical to determining the success or failure of a product. When looking at design trends, in particular for AI, designs are running into the memory and interconnect walls as shown in the following figures.

For instance, when evaluating SSD storage, developers focus on two metrics: the read/write speed of the SSD and its total capacity. These figures are crucial for memory companies, especially as the volume of data being transferred continues to grow exponentially. The ability to quickly move data off the chip is vital. In the data center market, memory accounts for roughly 50% of resource usage, acquisition cost, and power consumption, that is half of their investment into purchasing memory, maintaining it with sufficient power, and ensuring enough capacity to support parallel memory accesses. As a result, memory performance often becomes a bottleneck.

As another example, AI market performance hinges on the rapid processing of algorithms, which is largely determined by the speed to retrieve input data from memory and to offload results to memory. This is crucial for inference tasks, where real-time decisions depend on quickly retrieving input data and storing results. In automotive applications, for instance, swift data access is essential for split-second decisions like pedestrian detection. Conversely, the faster the data offloading occurs, the more efficient the data handling become, especially in training environments where large datasets must be constantly moved between cores and memory.

Regardless of the application, ultimately three key metrics define performance in SoC designs:

Latency

In SoC designs, latency refers to the time elapsed between the request of a data transfer or of an operation and the delivery of the data or the completion of the operation.

In any SoC design the architectures of three fundamental functional blocks determine the latency of the entire design:

- Memory Latency: The delay in accessing data in memory. Memory latency is influenced by memory type, memory hierarchy structure, and clock cycles required for access, typically interdependent.

- Interconnect Latency: The time it takes for data to travel across the SoC’s fabric interconnecting one component to another. Critical attributes in an interconnect architecture include number of hops, congestion dependency, protocol overhead.

- Interface Protocols Latency: The delay in communicating with external devices through peripheral interfaces. Latency in interface protocols ranges widely from several nanoseconds (PCIe) to microseconds (Ethernet).

High latency in data transfer or response time degrades system performance, especially in real-time or high-performance applications such as AI processing, automotive systems, real-time communications, and high-speed computing.

Bandwidth

In SoC designs, bandwidth refers to the maximum data transfer rate between different components within a chip or between a chip and external devices.

Like latency, the architectures of same three fundamental functional blocks determine the bandwidth of the entire design:

- Memory Bandwidth: The data transfer rate between processing units and memories. Measured in GigaBytes per second (GB/s) is influenced by factors such as memory type, bus width, and clock speed.

- Interconnect Bandwidth: The data transfer rate between various blocks and subsystems through interconnect fabric like buses or crossbars.

- Interface Protocols Bandwidth: The data transfer rate in the communication channels with external devices through peripheral interfaces.

Low bandwidth in an SoC design leads to data congestion. Conversely, high bandwidth improves performance, particularly for data-intensive tasks such as AI/ML processing. Achieving high bandwidth involves optimizing the architecture of memory subsystems, interconnects, and communication protocols within the SoC.

Latency optimization is often balanced with bandwidth optimizations to achieve optimal overall performance.

Accuracy

In SoC designs, data transfer accuracy refers to correctness and reliability of data as it moves between different components within the SoC or between the SoC and external devices.

Data transfer errors can be caused by several factors: congestion, overflow, underflow, incorrect handshakes, noise, interference, crosstalk, signal degradation, or electromagnetic interference (EMI) impacting signal integrity.

Inaccuracies in data transfer can lead to system failures, crashes, loss of data, incorrect computations, especially critical in systems requiring high reliability, such as automotive, medical, or AI-based systems. Ensuring data transfer accuracy is a fundamental aspect of SoC design.

Holistic Approach to SoC Performance Optimization

In today’s highly competitive and demanding SoC design landscape, optimizing the three core attributes that define performance—latency, bandwidth, and accuracy—has become an absolute necessity. This optimization extends beyond hardware alone, and it includes firmware as well. This is especially true for leading-edge SoC designs, from AI-driven systems to high-performance computing and real-time applications as self-driving vehicles.

Achieving optimal performance in SoCs requires a holistic approach that balances hardware and software considerations.

Addressing Performance in Memory Architectures

Memory architecture is central to SoC performance, directly affecting both latency and bandwidth. Memory access speeds and capacity are critical for ensuring that processing cores are not starved of data, particularly in high-throughput applications.

Advanced memory architectures are designed to strike a balance between low-latency access and high-bandwidth memory operations, delivering both speed and capacity required for today’s demanding workloads. For example, LPDDR5 (Low Power Double Data Rate 5) and HBM3 (High Bandwidth Memory 3) represent cutting-edge DRAM technologies that have been engineered to maximize performance in power-constrained environments, such as mobile devices, as well as high-performance computing applications.

LPDDR5 offers improvements in power efficiency and data throughput, enabling mobile SoCs and embedded systems to access memory faster while consuming less power. Meanwhile, HBM3 delivers unparalleled bandwidth with stacked memory dies and a wide bus interface, making it ideal for high-performance applications like AI accelerators, GPUs, and data center workloads. By integrating memory closer to the processor and using wide memory buses, HBM reduces the distance that data must travel, minimizing latency while enabling vast amounts of data to be accessed concurrently, and ensures that multiple processing cores or accelerators can access data simultaneously without bottlenecks.

Shared memory architectures enable different processing units—such as CPUs, GPUs, and specialized accelerators—to access the same pool of data without duplicating it across separate memory spaces. This is especially important for heterogeneous computing environments where different types of processors collaborate on a task.

Cache coherency protocols in multi-core systems guarantee that data remains consistent across different cores accessing shared memory. Protocols like NVMe (Non-Volatile Memory Express), UFS (unified file storage), MESI (Modified, Exclusive, Shared, Invalid) and MOESI (Modified, Owned, Exclusive, Shared, Invalid) are commonly used to maintain cache coherency, ensuring that when one core updates a piece of data, other cores working on the same data are immediately notified and updated.

Addressing Performance in Interface Protocols Architectures

Interface protocols manage the data traffic between the SoC and its external world, playing a vital role in maintaining SoC performance by directly influencing both latency and bandwidth.

High-performance interface protocols like PCIe and Ethernet are designed to maximize data transfer rates between the SoC and external devices preventing data congestion and safeguarding that high-performance applications can consistently meet performance requirements without being throttled by communication delays.

Emerging standards like CXL (Compute Express Link) and Infinity Fabric are designed to enhance the interconnectivity between heterogeneous computing elements. CXL, for instance, enables high-speed communication between CPUs, GPUs, accelerators, and memory, improving not only data bandwidth but also interconnection efficiency. Infinity Fabric, used extensively in AMD architectures, provides a cohesive framework for connecting CPUs and GPUs, ensuring high-performance data sharing and coordination across computing resources.

In the AI acceleration space, Nvidia currently dominates in part due to its superior interface protocols, such as InfiniBand and NVLink. InfiniBand, known for its low-latency, high-bandwidth performance, is widely used in data centers and high-performance computing (HPC) environments. Nvidia’s NVLink, a proprietary protocol, takes data transfer to the next level by achieving rates of up to 448 gigabits per second. This allows for fast data movement between processors, memory, and accelerators, which is essential for training complex AI models and running real-time inference tasks.

Addressing Performance in Interconnect Networks

Interconnect networks play a pivotal role in SoC designs, acting as the highways that transport data between different components. As SoCs become more complex, with multiple cores, accelerators, and I/O components, the interconnect architecture must be capable of supporting massive parallelism and enabling efficient workload distribution across multiple processors and even distributed systems.

To achieve optimal performance, the interconnect must be designed with throughput maximization and low-latency communication in mind. As data movement becomes increasingly complex, the interconnect network must handle not only the sheer volume of data but also minimize bottlenecks and contention points that can slow down performance.

Interconnect architectures developed for performance such as On-Chip Networks (NoC) and Advanced Microcontroller Bus Architecture (AMBA) reduce contention and minimize communication delays between cores, memory, and peripheral components, and ensure that data is routed efficiently between components.

High-performance interconnect architectures like Network-on-chip (NoC) and Advanced Microcontroller Bus Architecture (AMBA) have been developed to meet these demands. Networks on Chip (NoC) are designed to scale with the growing complexity of SoCs, offering high levels of parallelism and flexible routing to reduce contention and enable a modular design approach, where multiple components can communicate simultaneously without overloading shared buses or memory channels, thereby preventing data congestion and minimizing latency.

Similarly, AMBA (Advanced Microcontroller Bus Architecture) has become a standard for connecting processors, memory, and peripherals within an SoC. By incorporating advanced features such as burst transfers, multiple data channels, and arbitration mechanisms, AMBA helps reduce communication delays and ensures that data is routed efficiently across the SoC.

In addition to NoC and AMBA, newer interconnect solutions are emerging to address the growing demand for more advanced performance optimization. Coherent interconnects, such as Arm’s Coherent Mesh Network (CMN), allow multiple processors to share data seamlessly and maintain cache coherence across cores, reducing the need for redundant data transfers and improving overall system efficiency.

Addressing Performance in Firmware

Performance tuning goes beyond optimizing the three essential hardware blocks, and it must also encompass the lower layers of the software stack, including bare-metal software and firmware. These software layers are integral to the system’s overall performance, as they interact with the hardware in a tightly coupled, symbiotic relationship. Bare-metal software and firmware act as the interfaces between the hardware and higher-level software applications, enabling efficient resource management, power control, and hardware-specific optimizations. Fine-tuning these layers is crucial because any inefficiencies or bottlenecks at this level can significantly hinder the performance of the entire system, regardless of how well-optimized the hardware may be.

One of the key benefits of firmware optimization is its ability to unlock performance gains without requiring changes to the physical hardware. For instance, firmware updates can be deployed to improve resource allocation, reduce latency, or enhance power efficiency, leading to significant performance improvements with minimal disruption to the system. This is especially critical in embedded systems, IoT devices, and SSDs, where firmware governs how efficiently data is processed and managed.

Beyond storage devices, firmware optimization is beneficial in domains as networking equipment, GPUs, and embedded systems.

Conclusions

SoC designs are the backbone of numerous technologies, from smartphones and autonomous vehicles to data centers and IoT devices, each requiring precision-tuned performance to function optimally. Falling short of performance goals can lead to higher costs, delayed time-to-market, and compromised product quality, ultimately affecting a company’s competitiveness in the market. Conversely, hitting these targets means faster, more reliable products that stand out in a crowded tech landscape.

As design cycles shorten and market pressures intensify, achieving performance metrics is no longer optional—it’s a necessity for any successful SoC project.

Read Part 2 of this series – Performance Validation Across Hardware Blocks and Firmware in SoC Designs

Also Read:

Synopsys Brings Multi-Die Integration Closer with its 3DIO IP Solution and 3DIC Tools

Enhancing System Reliability with Digital Twins and Silicon Lifecycle Management (SLM)

A Master Class with Ansys and Synopsys, The Latest Advances in Multi-Die Design

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.