Key Takeaways

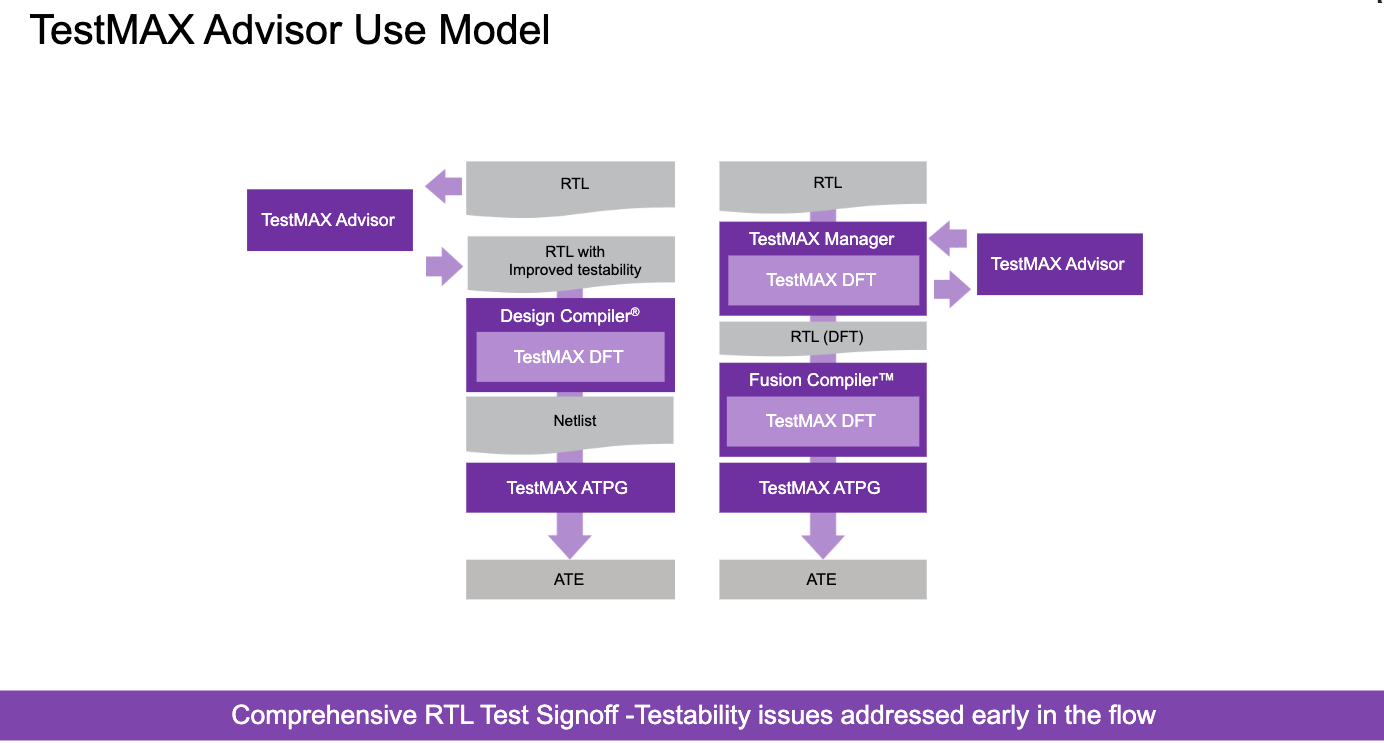

- SpyGlass DFT technology is integrated with Synopsys' TestMAX Advisor to ensure RTL is DFT-clean before test insertion.

- Modern DFT requires support for complex testing methods beyond scan, including boundary scan, MBIST, and LBIST for in-system testing.

- DFT logic must be verified and updated alongside mission mode designs, necessitating efficient connectivity checks to manage resource demands.

Many years ago, not long after we first launched SpyGlass, I was looking around for new areas where we could apply static verification methods and was fortunate to meet Ralph Marlett, a guy (now friend) with extensive experience in DFT. Ralph joined us and went on to build the very capable SpyGlass DFT app. So capable that SpyGlass DFT is now integrated inside Synopsys’ TestMAX Advisor to check that your RTL is DFT-clean prior to test insertion. As a full block or system design stabilizes, you can use TestMAX Advisor technology to insert DFT structures and verify this modified RTL continues to meet test requirements following insertion. These post-insertion checks are also enabled though the SpyGlass technology. Static analysis proves to be incredibly important in shifting DFT verification left to avoid late-stage schedule surprises.

Modern DFT demands

Test today is much more complex that just scan-based test. We must still support scan but also boundary scan, memory built-in self-test (MBIST) and logic built-in self-test (LBIST). The BIST options are important for in-system testing and, especially now, for on-the-fly self-test in mission-critical applications like cars. In addition to meeting these needs, feeding test data and control and reading results back for scan test across many scan chains in the design must be handled through test compression and decompression logic.

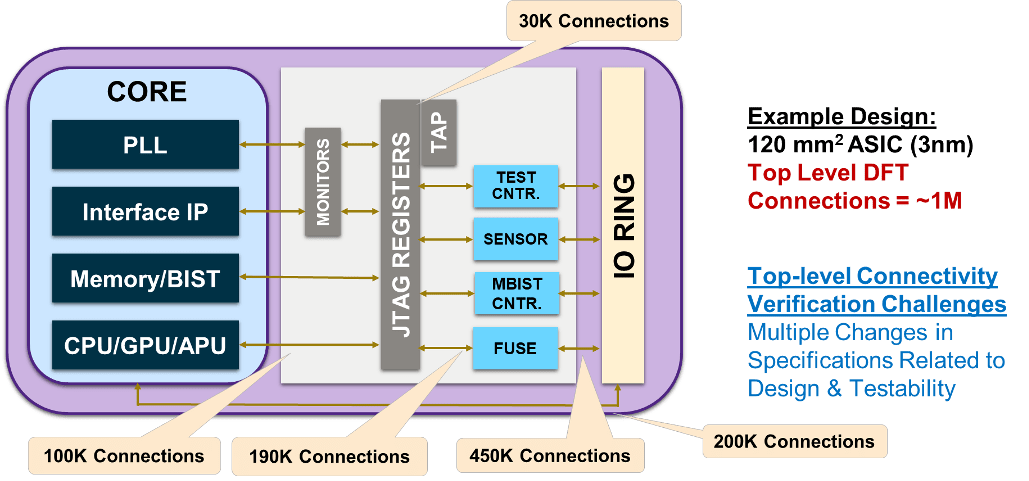

In large SoCs test infrastructure is commonly built hierarchically, where scan chains, compression, MBIST, LBIST and other test logic roll up to test interfaces around IPs and subsystems, which in turn roll up to SoC-level interfaces. Together all this hierarchical test logic, plus connectivity with the functional elements that are being tested, becomes a very complex logic overlay on top of the mission mode system.

Figure: SoC Level Connectivity Verification Challenges

OK, a lot of work but you build it once and you’re ready, apart from some later stage fine-tuning? Not necessarily. Updates to the mission mode design, whether pre-implementation or late stage ECOs, commonly require updates to the DFT logic and connectivity.

DFT logic must be verified and regressed like any other logic. We already know that verifying mission mode functionality is very resource- and time-consuming. Using the same dynamic methods to verify DFT logic in addition to mission mode logic would explode schedules and resource demands. Further, dynamic testing can never prove that a path between two points does not exist (needs to be demonstrated in some connectivity checks), and formal methods are not effective at these circuit sizes. Some level of dynamic verification is still useful as a double-check but not as the main method.

Fortunately, DFT logic elements such as MBIST and JTAG controllers are pre-verified, so the great majority of verification can be reduced to a limited set of connectivity checks. These can be grouped into value propagation, path existence or non-existence, and conditional connectivity checks. Examples commonly include that for some specified test mode certain design nodes must be in a specified state or should not be tied off to a specified state, or that a sensitizable (or sensitized) path should exist or should not exist between certain nodes. These concepts can be extended further to test for conditional connectivity, for example that a pin should be tied to a certain state OR should be driven a certain kind of element.

In short, most of the DFT functionality and its interaction with mission mode functionality can be verified through static connectivity checks. Once appropriate checks are defined, verification regressions should run in times comparable to those for other static checks.

The TestMAX approach

Connectivity checks for TestMAX are steered through Tcl commands as you might expect. When I first saw sample constraints files in the Webinar demos they seemed rather complex but the webinar hosts (Kiran Vittal, Executive Director Product Management and Markets at Synopsys and fellow Atrenta alum) and Ayush Goyal (Sr. Staff R&D engineer at Synopsys) pointed out that the way the constraints are constructed can easily can be made design independent. No doubt such a constraints file would take some effort to setup the first time but then could be reused across many designs.

Here I’m sure I’m going to display how long it has been since I did anything of this nature, but I found the approach intriguing, somewhat similar in principle to SQL-like operations on a database. You build lists of objects for value tests or pairs of objects for path tests, and you define what is required or illegal in such cases. List building is based on name-matching (allowing wild cards). If designs stick to appropriate naming conventions (e.g. a PLL name contains PLL) then the constraints should work.

Conditional connectivity checks simply continue this theme. A first check might require a “1” on a certain class of nodes. Whatever cases fail this check are gathered in a second list and checked against another test, for example whether surviving nodes are driven by some node in a different list you have defined. And so on.

Easy enough to understand. On performance, Ayush added that he knows of a design with over a billion flat instance and 80 million or more flip-flops in which they were able to run connectivity checks between 100 billion pairs of nodes in less than a day. Dynamic verification would have no chance of competing with that performance or with completeness of test.

Good stuff. You can register to watch the webinar HERE.

Also Read:

Why Choose PCIe 5.0 for Power, Performance and Bandwidth at the Edge?

Synopsys and TSMC Unite to Power the Future of AI and Multi-Die Innovation

AI Everywhere in the Chip Lifecycle: Synopsys at AI Infra Summit 2025

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.