Introduction: The Historical Roots of Hardware-Assisted Verification

The relentless pace of semiconductor innovation continues to follow an unstoppable trend: the exponential growth of transistor density within a given silicon area. This abundance of available semiconductor fabric has fueled the creativity of design teams, enabling exponentially advanced systems-on-chip (SoCs). Yet, the very scale that empowers new possibilities also imposes severe challenges on design verification.

As chips grew larger and more complex, the test environment required to validate them expanded proportionally in both scope and sophistication. These test workloads demanded longer execution times to achieve meaningful coverage. The combination of ballooning design sizes and heavier test workloads pushed traditional Hardware Description Language (HDL) simulation environments beyond their limits. In many cases, simulators were forced to swap designs in and out of host memory, creating bottlenecks that drastically slowed execution and reduced verification throughput.

Back in the early 1980s, a few pioneering startups sought alternatives beyond simulation engines. Zycad was among the first to experiment with dedicated hardware-based verification engines. While innovative, these early tools were limited in flexibility and had short lifespans. The breakthrough came shortly thereafter with the rise of reconfigurable platforms built around field-programmable gate arrays (FPGAs). By the mid-1980s two trailblazing companies, Ikos Systems and Quickturn Design Systems, began developing the first generation of hardware-assisted verification (HAV) tools, including hardware emulators and FPGA-based prototypes. Though initially the emulation platforms were large, heavy, unreliable, and expensive to acquire and operate, they introduced a new paradigm in design verification by enabling orders-of-magnitude speedup compared to simulation alone.

Early Deployment Mode: In-Circuit Emulation

The early adoption of hardware acceleration in the design verification process marked a pivotal shift in how semiconductor designs were tested and validated. The initial deployment mode for HAV was In-Circuit Emulation (ICE). This approach offered two key breakthroughs.

First, the hardware-based verification engine enabled the execution of the design-under-test (DUT) at speeds several orders of magnitude faster than traditional HDL simulation environments. Whereas HDL simulators typically operated and still operate at frequencies in the tens or hundreds of Hertz, the hardware-assisted platforms could run at Megahertz-level speeds enabling verification of much larger and more complex designs within practical timeframes.

Second, ICE made it possible to drive the DUT with real-world traffic, rather than relying solely on artificial stimuli such as software-based test benches or manually crafted test vectors. By connecting the emulator directly into the socket of the actual target system, it could validate the behavior of the design under realistic operating conditions. This not only improved the thoroughness of functional verification—vastly more testing could be executed in the same timeframe—but also its accuracy because of the fidelity of the real-world testbed, not achievable with a virtual testbench. Thoroughness and accuracy contributed to reducing the risk of taping-out a faulty design and avoided a massive impact on the financial bottom line.

The Devil in the Clock Domains: Need for Speed Adapters

From the outset, a fundamental challenge in ICE deployment became apparent: the inherent clock-speed mismatch between the target system and the DUT hosted on the HAV platform. Target systems—such as processor boards, I/O peripherals, or custom development environments—operate at full production speeds, typically ranging from hundreds of megahertz to several gigahertz. In contrast, the emulated DUT runs at much lower frequencies, often only a few megahertz, constrained by the intrinsic limitations of hardware emulation platforms.

This vast timing disparity, often spanning three or more orders of magnitude, makes cycle-accurate interaction impossible, resulting in potential data loss, synchronization issues, and non-functional behavior. To address this, speed adapters were introduced as an intermediary layer. Conceptually implemented using FIFO buffers, these hardware components were inserted between the emulator’s I/O and the target system to decouple the asynchronous, high-speed nature of the real world from the deterministic, slower execution of the DUT.

Early Implementations of Speed Adapters (1985-1995)

ICE promised dramatic improvements in verification productivity in virtue of high performance and accurate test workloads, however, the need for speed adapters introduced challenges of their own in flexibility, scalability, reusability, single user, debugging efficiency, remote access, and reliability.

Lack of Flexibility

Speed adapters are protocol-specific hardware implementations, each designed to support a particular interface standard, such as PCIe, USB, or Ethernet. This made them inflexible and non-generic. Implementing a new adapter for a different protocol often required custom engineering work, firmware development, and precise synchronization logic. Even small variations in protocol versions or signal timing could lead to incompatibilities. As a result, the setup process became complex, error-prone, and time-consuming.

Limited Reusability

Each speed adapter was essentially a one-off solution, tailored for a specific interface and usage scenario. Reusing an adapter across projects, even those with similar hardware, often proved impractical due to slight architectural or timing differences. Furthermore, since these adapters were fixed-function hardware, they did not allow for corner-case testing, protocol tweaking, or exploratory “what-if” analysis. This rigidity hampered their usefulness in iterative or exploratory verification workflows.

Frustrating and Error-prone Design Debug

One of the most serious drawbacks of ICE mode was the difficulty of DUT debugging. When the target system drove the design under test, the behavior of the design under verification (DUV) became non-deterministic. A bug might appear in one run and vanish in the next, making root cause analysis extremely difficult. This lack of repeatability stemmed from the asynchronous, event-driven nature of the interaction between the real system and the slower emulator. Without deterministic control over inputs and timing, capturing and tracing a failure became a frustrating and prolonged process.

Cumbersome Remote Access

In increasingly distributed engineering environments, remote accessibility became a key requirement. ICE mode, however, suffered from a fundamental limitation: it required physical access to plug or unplug the target system in/from the emulator. Without someone on-site, remote teams were effectively blocked from initiating or modifying test sessions, creating a bottleneck for globally distributed development teams and undermining continuous integration workflows.

Reliability Risks and Maintenance Overhead

Like any hardware, speed adapters had a finite mean-time-between-failures (MTBF). A malfunctioning adapter might introduce intermittent or misleading behavior, leading verification engineers to chase phantom bugs in the DUT when the issue sat in the speed adapter hardware. This could significantly delay debug cycles and erode confidence in the verification platform. As a preventative measure, regular maintenance and validation of the adapters was required, adding operational overhead and further complicating the test setup.

The Virtual Takeover: The Shift from ICE to Virtual Verification (1995-2015)

Within just a few years, the limitations of the first wave of speed adapters became apparent. The rigidity and simplicity of their designs limited the deployment scope to manage data traffic between two time domains. The inability to handle flow control, packet integrity, and full system synchronization created significant bottlenecks that could not scale with the growing complexity of SoCs. As a result, the EDA industry shifted its focus toward a more sustainable approach: virtualization.

ICE gradually lost its appeal and ceased to be the default method for system verification. It was pushed to the final stages of validation, used mainly for full system testing with real-world traffic just before tape-out.

Meanwhile, a promising approach emerged in the form of transaction-based emulation, often referred to as virtual ICE or transaction-level modeling (TLM). Instead of driving the DUT with physical target systems via physical speed adapters, this method used software-based virtual interfaces, like digital twins, of physical speed adapters to drive and monitor the DUV through high-level transactions.

This shift from bit-signal-accurate physical emulation to protocol-accurate virtual emulation marked a critical turning point in the evolution of HAV platforms. It enabled a new class of verification use modes that were more flexible, expansive in applications and better aligned with modern SoC design methodologies.

The advantages of this shift were numerous and transformative:

- Higher Performance: By replacing cycle-accurate pin-level I/O with abstracted transactions, the emulator could operate at significantly higher effective speeds, enabling faster verification cycles.

- Greater Debug Visibility: With all communication occurring in the digital domain in synch with the emulator the test environment became deterministic, making it easier to log, trace, and debug data flows without intrusive probes or external logic analyzers.

- Simplified Setup: Virtual platforms eliminated the need for complex cabling, custom sockets, or fragile speed adapters, making emulation setups more robust and scalable.

- Remote Accessibility: Engineers could now run emulations from anywhere via remote access to the host workstation, making collaboration easier across geographies.

- Software Co-Verification: Perhaps most importantly, transaction-based emulation enabled tight integration with embedded software environments. Developers could boot operating systems, run production firmware, and validate complete software stacks alongside hardware, long before first silicon became available.

Collectively, these breakthroughs elevated HAV platforms from a specialized tool to an indispensable cornerstone of the verification toolbox. By the late 2000s, no serious SoC development could achieve predictable schedules and quality without leveraging virtualized verification methodologies.

Third Generation of Speed Adapters (2015-Present)

Over the past decade the EDA industry, recognizing the unique testing capabilities of ICE, revisited the technology and committed significant efforts to overcoming the shortcomings of earlier generations of speed adapters.

The focus shifted toward mitigating critical bottlenecks that had long constrained flexibility, scalability, debugging accuracy, and system fidelity. This third generation of speed adapters introduced greater configurability, smarter buffering techniques, and a range of advanced mechanisms designed to bridge the gap between functional verification and true system-level validation. These enhancements paved the way for broader adoption of ICE in the verification of increasingly complex SoCs.

Major Enhancements of 3rd Gen SA

Enhanced Design Debug

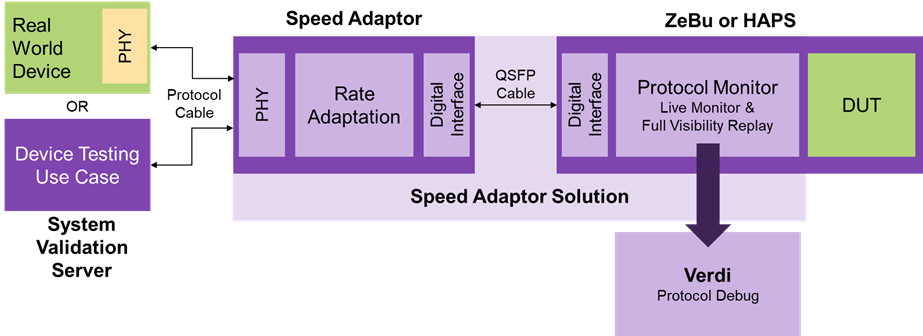

Modern speed adapters have significantly enhanced debug capabilities in ICE environments. Protocol analyzers and monitors are now instantiated inside the adapter on both the high-speed and low-speed sides of the adapter, enabling bidirectional traffic observation. By correlating activity across these interfaces, the system provides a protocol-aware, non-intrusive, high-level debug environment that was previously unattainable in ICE. See figure 1.

This approach mirrors what has long been available in virtual platforms. In virtual environments, whether handling Ethernet packets or PCIe transactions, transactors include C-based monitoring code that inspects the traffic. The results are displayed in a protocol-aware viewer, allowing engineers to analyze packet-level activity directly, similar to what an Ethernet packet sniffer would provide, without resorting to low-level waveform dumps. The result is improved debug efficiency and faster root-cause analysis in hardware-assisted verification.

Verification via Real PHY and Interoperability

All modern peripheral interfaces, whether PCI Express, USB, Ethernet, CXL, or others, are built on two fundamental blocks, a controller and a physical layer (PHY).

- The controller is a purely digital block that implements the logic of the communication protocol: encoding, packet handling, flow control, and error detection.

- The PHY is a mixed-signal design, bridging the digital and analog worlds. It manages voltage levels, current drive, impedance, timing margins, and the electrical signaling that allows data to move across pins, connectors, and transmission lines.

In transaction level flows, the PHY cannot be accurately represented. To work around this limitation, the PHY is substituted by a simplified or “fake” model that allows for basic register programming but omits the critical analog behaviors that dominate real-world operation. Such models cannot uncover issues that manifest only at the physical interface.

To overcome these blind spots, modern speed adapters integrate real PHYs into the hardware-assisted verification flow. By introducing genuine physical interfaces into the loop, design teams can validate their DUT against real-world conditions rather than abstracted models. This capability brings several tangible benefits:

- Accurate link training and initialization – protocols like PCIe, CXL, and USB require precise electrical handshakes to establish communication. These can only be exercised with a real PHY.

- Timing and signal integrity validation – engineers can detect marginal failures, jitter, or power-related issues invisible to fake models.

- Protocol compliance at the electrical layer – ensures interoperability with other vendors’ devices and adherence to industry standards.

- Higher silicon confidence – by testing against true physical interfaces, teams dramatically reduce the risk of surprises in silicon bring-up.

Today’s design teams are confronted with two very different, yet equally critical, challenges. On one hand, they must continue to validate and support legacy interfaces that remain essential in many deployed systems. In these cases, speed adapters can integrate an FPGA that implements a legacy PHY, enabling seamless connection to the external environment and ensuring backward compatibility with established standards.

On the other hand, teams are also working with advanced, next-generation protocols that are still in the process of being defined and refined. For these emerging standards, speed adapters incorporate real PHY IP test chips, providing direct access to the world analog behaviors that virtual models cannot capture.

Companies like Synopsys, with expertise in both PHY IP and hardware-assisted verification, are at the forefront of this shift. Their solutions allow design teams to test interoperability earlier, accelerate development cycles, and bring new products to market with far greater confidence.

System Validation Server

For protocols such as PCIe, relying on a standard host server as a test environment is not feasible. The limitation stems from the server’s BIOS, which enforces strict timeout settings. These timeouts are easily exceeded by the relatively long response times of an emulation system. Once triggered, the timeouts cause the validation process to stall or hang, preventing meaningful progress.

To achieve a complete ICE solution, this challenge must be addressed head-on. The answer lies in a purpose-built System Validation Server (see Fig. 2) equipped with a modified BIOS. By removing or adjusting the restrictive timeout parameters, the server can operate seamlessly with the slower responses of the emulated design.

This out-of-the-box solution offers immediate, practical benefits. Instead of spending months in back-and-forth iterations with an IT department to obtain and configure a host machine with a customized BIOS, validation teams can deploy a ready-made server that works from day one. The result is a dramatic reduction in setup overhead, faster time-to-validation, and a more reliable environment for exercising advanced protocols like PCIe under real-world conditions.

Ultra-High-Bandwidth Communication Channel

One of the most transformative advances in modern speed adapters is the introduction of ultra-high-bandwidth communication channels between the emulator and the adapter. Today’s leading-edge solutions can sustain throughput levels of up to 100 Gbps, enabling them to increase the overall validation throughput.

By approaching the communication rates of the real silicon, speed adapters allow engineers to stress-test SoCs under realistic workloads, validate protocol compliance at line rate, and observe system behavior under continuous, heavy traffic conditions.

Moreover, this capability ensures that networking-intensive applications, such as those found in data centers, 5G infrastructure, and high-performance computing systems, can be tested in environments that closely mimic deployment scenarios. The result is a dramatic increase in confidence that designs will not only function but also perform optimally once in the field.

Multi-user Deployment

A single speed adapter can be logically partitioned to support concurrent multi-user operation. The adapter’s port resources can be allocated in their entirety to a single user, or subdivided into up to three independent partitions, each assigned to a different user.

For instance, a 12-port Ethernet speed adapter may be configured as a monolithic resource (all 12 ports mapped to one user) or segmented into three logical groups of four ports each, enabling three users to access discrete subsets of the adapter in parallel.

The same partitioning capability applies to PCIe interfaces: the adapter can expose up to three independent PCIe links, each link operating in isolation and assigned to separate users. These features, resource partitioning, port multiplexing, and independent link allocation are natively supported in the architecture of the speed adapter.

Advanced buffering and flow-control techniques

The architectural refinements eliminated packet drops and ensured deterministic behavior across fast and slow clock domains. They also allowed speed adapters to scale with large verification workloads.

Conclusion

Today’s speed adapters combine flexible, multiuser deployment, stable and exceptionally fast high-speed links, deterministic flow control, improved DUT debuggability, proven resilience under continuous datacenter workloads. With these advances, third-generation speed adapters moved ICE from a niche verification mode into a mainstream, indispensable tool for system validation.

Today’s emulation platforms configured with this third generation of speed adapters finally bridge the gap between functional verification—which validates DUT functionality and I/O protocols—and system-level validation, which ensures that designs interact correctly with the physical world. This holistic approach closes a long-standing blind spot in hardware-assisted verification, enabling faster debug cycles, higher-quality silicon, and first-pass success in increasingly complex SoCs.

Also Read:

eBook on Mastering AI Chip Complexity: Pathways to First-Pass Silicon Success

448G: Ready or not, here it comes!

Synopsys Webinar – Enabling Multi-Die Design with Intel

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.