Part 2 of 2 – Performance Validation Across Hardware Blocks and Firmware in SoC Designs

Part 2 explores the performance validation process across hardware blocks and firmware in System-on-Chip (SoC) designs, emphasizing the critical role of Hardware-Assisted Verification (HAV) platforms. It outlines the validation workflow driven by real-world applications, and best practices for leveraging HAV platforms to ensure comprehensive performance validation and meet evolving design demands. Part 1 is found here

Performance Validation Models in the SoC Verification Flow

It is crucial to distinguish between performance validation and architectural exploration, as these processes serve different purposes in the design and development cycle. Architectural exploration focuses on selecting the optimal SoC architecture to achieve specific design goals, such as maximizing performance or minimizing silicon area. In contrast, performance validation evaluates the actual performance of an architectural implementation to ensure it meets the intended specifications and operational requirements.

Performance validation is a comprehensive, multi-phase process that begins at the architectural level and progressively refines through the abstraction hierarchy, culminating in post-silicon validation. Each stage ensures the design remains aligned with performance expectations, mitigating risks before final production.

In the pre-silicon phase, performance validation relies on two distinct abstraction models, in contrast to software validation, which can utilize hybrid modeling techniques:

- Approximately Timed (AT) Models: These models are used for early performance estimations, emphasizing functional correctness and coarse-grained timing analysis. Typically written in SystemC, AT models help identify potential bottlenecks and provide an initial understanding of the system’s behavior.

- Cycle-Accurate (CA) Models: Offering detailed, clock-level timing accuracy, CA models are essential for evaluating critical performance metrics such as cycles per second, frames per second, or packets per second. These metrics are key to assessing system efficiency and ensuring the design meets specified requirements. CA models are also implemented in SystemC. When CA models are unavailable, performance validation must be conducted using cycle-accurate RTL simulations.

As the design progresses from abstraction to physical implementation, the final phase of performance validation occurs during silicon engineering sample (ES) testing after the pre-silicon stage. This stage ensures that the actual silicon meets performance expectations under real-world operational conditions. It serves as the ultimate verification step to confirm that the device functions as intended and satisfies all specified requirements before mass production.

By differentiating these processes and employing appropriate tools at each stage, developers can optimize both the architecture and performance of their designs, reducing time-to-market and improving reliability.

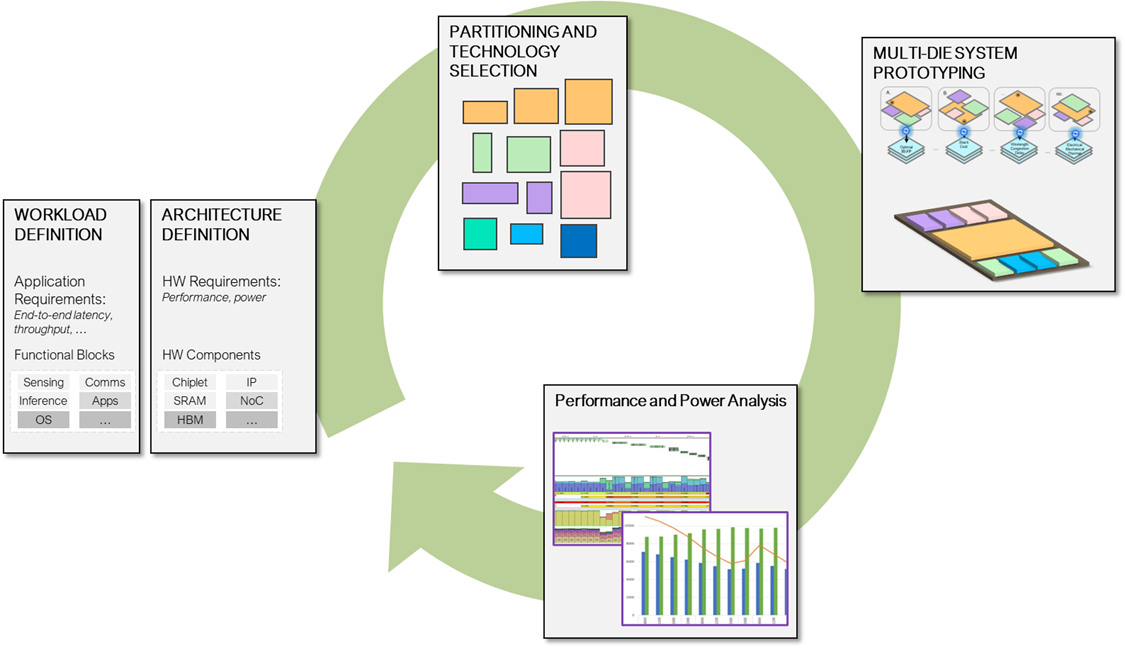

As the following figure shows, that analysis first happens at the architectural level (AT modeling) where it is explored if a particular architecture can support a certain workload performance using models of workload and architecture, given the constraints that a multi-die implementation will pose. Later at the CA level the exact performance is measured using the actual software workload on the detailed target architecture.

Performance Validation Methods

Performance validation can be performed at two key points: at the I/O design boundary or within the design itself.

Performance Analysis at the DUT I/O Boundary

This method measures performance externally, using the Design Under Test (DUT) interface. It often involves PCI Monitors: to assess DUT performance at the pin level, enabling precise, cycle-accurate testing. Real applications, such as I/O meter tools are executed in virtual environments to simulate traffic. Cycle-Accurate Data Capture: Monitors at the RTL pin level collect detailed performance metrics, providing insights into the DUT’s behavior under test conditions.

Performance Analysis Inside the DUT

Internal analysis is employed to diagnose issues. This approach involves DPI calls and internal monitors: These tools, combined with post-simulation scripts, help pinpoint performance bottlenecks within the system. Performance tracking: data is typically logged and managed in spreadsheets, with a framework of test cases designed to evaluate specific performance metrics.

Role of Monitors

Monitors play different roles, with some designed for transaction-level debugging and others focused solely on performance validation. The distinction is crucial.

In performance validation, the design clocks must remain running without interruption. Parameters analyzed are typically minimal and focused on transaction counts, such as read latency, the gap between two reads, read-after-write timing, and other performance indicators. Monitors check these metrics on the bus to assess performance.

In contrast, transaction-level debugging involves snooping the entire bus and tracking each transaction, often causing delays. For performance validation, the analysis is more streamlined, focusing on gaps between transactions, response latency, bandwidth usage, throttling, and backpressure management.

Performance Validation: The Role of HAV

Meeting verification requirements under realistic stress conditions necessitates the use of HAV platforms instead of traditional RTL simulation. Unlike RTL simulations, which are slow and impractical for running actual performance traffic, HAV platforms offer an efficient solution for thorough stress testing and performance measurement. HAV makes it possible to measure performance under real-world software and workload conditions.

In addition to supporting software bring-up and system validation, HAV platforms are increasingly driven by the need for precise power and performance analysis. HAV excels in measuring critical metrics like throughput and latency by counting clock cycles with exact precision. Testing these metrics requires real-world application payloads, as simple directed test cases are insufficient. A high-speed system is essential to execute complex application workloads effectively, ensuring meaningful and reliable performance evaluations.

To meet these demands, up to one-third of HAV resources are often dedicated solely to performance measurement. This allocation highlights the pivotal role HAV platforms play in modern performance validation, enabling engineers to address the complexities of today’s high-performance systems.

Performance Validation at the Firmware Layer

HAV plays a crucial role in performance tuning at the firmware level, an area gaining significant attention. For example, SSD providers1 frequently release updated firmware specifically designed to enhance performance, leveraging HAV to ensure these improvements are both measurable and reliable.

Firmware testing on actual silicon has become a vital part of performance validation frameworks. A main reason for adopting HAV is to enable the execution of firmware, which is not feasible in traditional simulation environments. The faster the validation platform, the better the ability to run real firmware effectively.

Running real firmware requires a PHY (physical layer). While PHY models do not include the full physical/analog properties, they facilitate the optimization of register access and other firmware interactions that impact performance. This early tuning can have a significant effect on overall system performance and efficiency.

The primary purpose of using a PHY model is to optimize firmware performance in conjunction with pre-silicon RTL, not to modify the RTL itself. This approach shifts the focus from measuring and validating the design’s performance to evaluating and enhancing system performance, including firmware, before chip release. This shift-left strategy allows teams to begin performance optimization much earlier in the development cycle.

Getting to the best performance

As outlined above, achieving optimal performance involves two key steps.

First, defining the optimal architecture to meet performance goals. This requires approximately timed models and a simulation and analysis framework that supports modeling and rapid simulation of various architectures and scenarios. Synopsys Platform Architect has become the industry-standard tool for this task, offering robust capabilities for exploring and optimizing system-level architectures.

Second, performance validation through emulation. The Synopsys ZeBu product family is critical for this stage, providing the fastest emulation performance available. Additionally, its extensive support for performance validation solutions enables both internal and external measurements while ensuring the ability to run accurate models of Synopsys PHY IPs. These capabilities are instrumental in delivering high-confidence validation results.

The question isn’t whether design teams should use these advanced tools, it’s how much time and resources they should invest in them. Greater investment in pre-silicon performance analysis and validation significantly increases the likelihood of delivering silicon with highly differentiated capabilities.

Read Part 1 of this series – Essential Performance Metrics to Validate SoC Performance Analysis

References

1: Update the firmware of your Samsung SSD

Also Read:

A Deep Dive into SoC Performance Analysis: What, Why, and How

Synopsys Brings Multi-Die Integration Closer with its 3DIO IP Solution and 3DIC Tools

Enhancing System Reliability with Digital Twins and Silicon Lifecycle Management (SLM)

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.