Deep learning (DL) has become the oracle of our age – the universal technology we turn to for answers to almost any hard problem. This is not surprising; its strength in image and speech recognition, language processing and multiple other domains amaze and shock us, to the point that we’re now debating AI singularities. But then, given a little historical and engineering perspective, we should remember that anything we have ever invented, however useful, has always been incremental and bounded. Only our imagination is unbounded. If it can do some things we can do, why should it not be able to do everything we can do and more?

So it should not come as a surprise that DL also has feet of clay. This is spelled out in a detailed paper from NYU, in 10 carefully elaborated limitations to the method. The author, Gary Marcus, is somewhat uniquely positioned to write this review since he is a professor of psychology and researches both natural and artificial intelligence. Lest you think that means he has only a theoretical understanding of AI, he founded his own machine learning company, later acquired by Uber. I quickly summarize his points below, noting his caveat that all points made are for what we know today. Advances can of course reduce or even eliminate some limits, but the overall impression suggests the big wins in DL may be behind us.

In his view, the CNN approach is simply too data hungry, not just in recognition but also in generalizing, requiring exponentially increasing levels of training data to generalize even at relatively basic levels. DL as currently understood is ultimately a statistical modeling method which can map new data within the bounds of the data it has seen but is unable to map reliably outside that set and especially cannot generalize or abstract.

Despite the name, DL is relatively shallow and brittle. While it seems from game-playing examples with Atari Breakout that DL can achieve amazing results such as learning to dig a tunnel through a wall, further research shows it learned no such thing. Success rates drop dramatically on minor variations to scenarios, such as moving the location of a wall or changing the height of a paddle. DL didn’t infer a general solution, and what it did infer breaks down quickly under minor variations. On a different and funny note, the British police wanted to use AI to detect porn on seized computers; unfortunately it keeps mistaking sand dunes for nudes, rather a problem since screensaver defaults often include pictures of deserts. More troubling, there are now numerous reports on how easily DL can be fooled through individual pixel changes.

DL has no obvious way to handle hierarchy, a significant limitation when it comes to natural language processing or any other function where understanding requires recognition of sub-components and sub-sub-components and how these fit together in a larger context. Understanding hierarchy is also important in abstraction and generalization. DL understanding however is flat. Systems like RNNs have made some improvements but have been shown in research last year to fall apart rather quicly when tested against modest deviations from the training set.

DL can’t handle open-ended inference. In reading comprehension, drawing conclusions by combining inferences from multiple sentences is still a research problem. Inferencing with even basic commonsense understanding (not stated anywhere in the text) is obviously harder still. Yet these are fundamental to broader understanding, at least understanding that we would consider useful. He offers a few examples which would present no problem to us but are far outside the capabilities of DL today: Who is taller, Prince William or his baby son Prince George? Can you make a salad out of a polyester shirt? If you stick a pin into a carrot, does it make a hole in the carrot or in the pin?

DL is not sufficiently transparent – this is a well-known problem. When a DL system identifies something, the reason for a match is buried in thousands or perhaps millions of low-level parameters, from which there is no obvious way to extract a high-level chain of reasoning to explain the chosen match. Which is maybe not a problem when matching dog breeds, but when decision outcomes are critical as in medical diagnoses, not knowing why isn’t good enough especially bearing in mind the earlier-mentioned brittleness of identification. Progress is being made but this is still very much at an early stage and it is still unclear how deterministic this can ultimately be.

Generalizing an earlier point, the DL training approach doesn’t provide a method to incorporate (and apparently in a lot of research even actively avoids) prior knowledge such as the physics of a situation. All work of which the author is aware works on bounded set of data with no external inputs. If we as a species were still doing that we would still be banging rocks together. Our intelligence very much depends on accumulated prior knowledge.

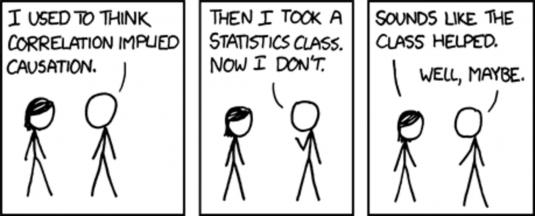

One problem in DL could equally be assigned to humans (and Big Data while we’re at it) – an inability to distinguish between correlation and causation. DL is a statistical fitting mechanism. If variables are correlated, they are correlated and nothing more. This doesn’t mean one caused the other; correlation might result through hidden variables or over-constraints. However understanding what might be a true cause is fundamental to intelligence, at least if we want AI to do better than some of us.

There is no evidence that DL would be able to handle evolving conditions, in fact there is a cautionary counter-example. Google Flu Trends aimed to predict flu trends based on Google searches on flu-related topics. Famously, while it did well for a couple of years, it missed the peak of the 2013 outbreak. Conditions evolve, and viruses evolve. Google Flu Trends didn’t use DL as far as I know, but there’s no obvious reason why a dataset for a problem in this general (evolving) class would not be used for DL training. Self-training (after bootstrapping from a labeled training set) may be able to help here, but it can’t model disruptive changes without also considering external factors of which even we may not be aware.

Prof. Marcus closes his ten points (which I compressed) with an assertion that DL still doesn’t rise to what we would normally consider a reliable engineering discipline, where we construct simple systems with reliable performance guarantees and out of those construct more complex systems for which we can also derive reliable performance guarantees. It’s still arguably more art than science, still not quite out of the lab in terms of robustness, debuggability and replicability and still not easily transferred to broader application and maintenance by anyone other than experts.

None of which is to say that Prof. Marcus disapproves of DL. In his view it is a solid statistical technique to capture information from large data sets to draw approximate inferences for new data within the domain of that data set. Where things go wrong is when we try to over-extend this “intelligence” to larger expectations of intelligence. His biggest fear is that AI investment could become trapped in over-reliance on DL, when other apparently riskier directions should be just as actively pursued. As a physicist I think of string theory, which became just such a trap for fundamental physics for many years. String theory is still vibrant, as DL will continue to be, but happily physics seems to have broken free of the idea that it must be the foundation for everything. Hopefully we’ll learn that lesson rather more quickly in AI.

Share this post via:

Semidynamics Unveils 3nm AI Inference Silicon and Full-Stack Systems