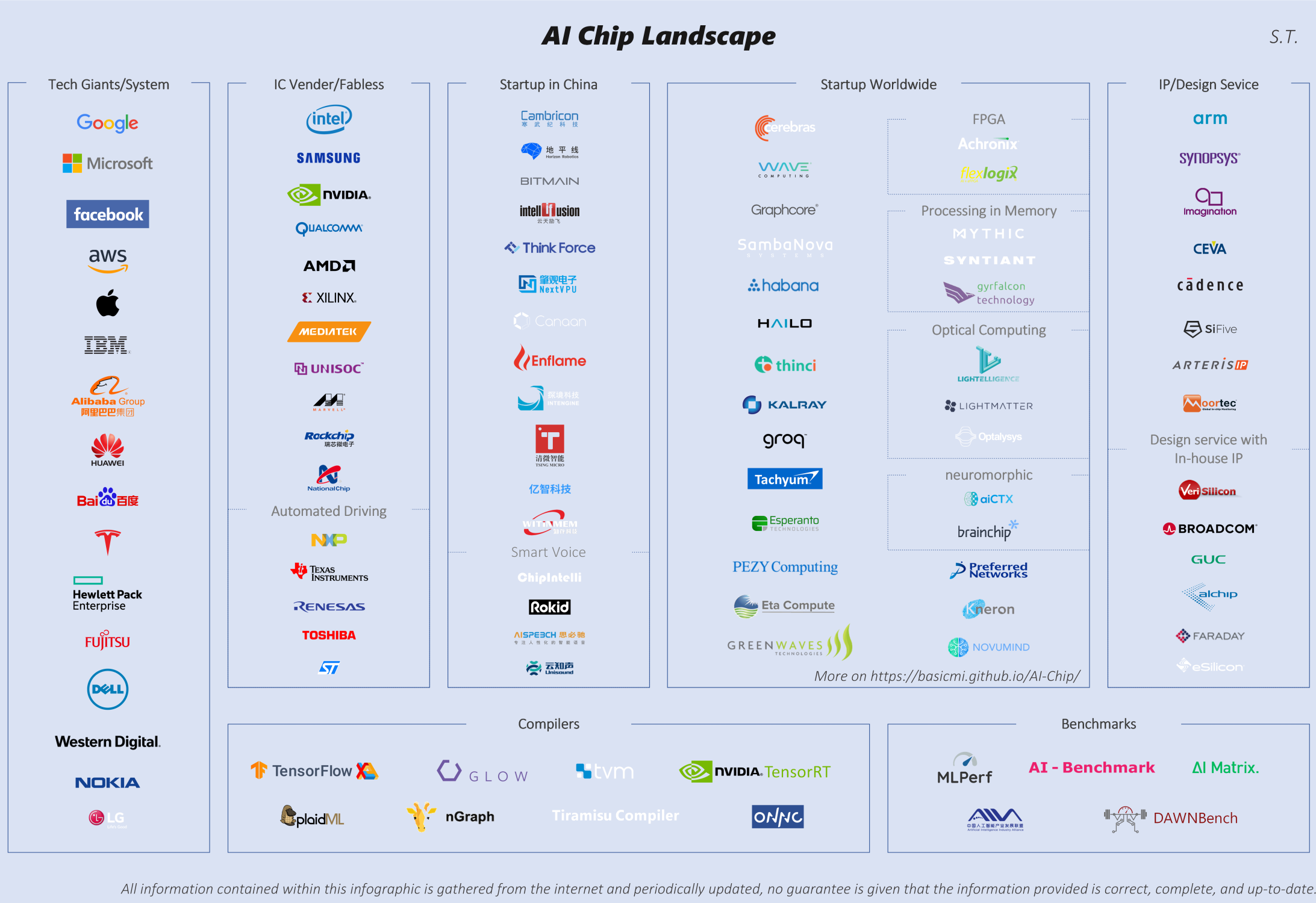

It’s been more than two years since I started the AI chip list. We saw a lot of news about AI chips from tech giants, IC/IP vendors and a huge number of startups. Now I have a new “AI Chip Landscape” infographic and dozens of AI chip related articles (in Chinses, sorry about that :p).

At this moment, I’d like to share some of my observations.

First and foremost, there is no need to argue about the necessity of dedicate AI hardware acceleration anymore. I believe, in the future, basically, all chips will have AI acceleration design inside. The only difference is how much area you will put there for AI. That is why we can find almost all of the traditional IC/IP vendors in the list.

Non-traditional chip makers designing their own AI chip has become a common practice and showing their special power in more and more cases. Tesla’s FSP chip could be highly customized to their own algorithms, which is evolving constantly by the “experience” of millions of cars on the road, and enforced with the help of Tesla’s strong HW/SW system teams. How do others compete with them? Google, Amazon, Facebook, Apple, Microsoft are working similarly, with the real world requirements, the best understanding of the application scenarios, strong system engineering capabilities, and deep pocket. Their chips are of course easier to succeed. How do traditional chip makers and chip start-ups compete with them? I think these will be the key questions that will shape the future of the industry.

A huge number of AI chip startups emerge, which outnumbered any other segments or any other time in the IC industry. Although it is slow down a bit now, we still hear money-raising news from time to time. The first wave of startups is now moving from showing architecture innovations to fighting in the real world to win customers with first generation chip and toolchain. For latecomers, “to be different” is getting harder and harder. Companies who use emerging technologies, such as in-memory computing/processing, analog computing, optical computing, neuromorphic, etc., are easier getting attention, but they have a long way to go before productizing their concept. In the list, you can see that, even in these new areas, there already are multiple players. Another type of differentiation is to provide vertical solutions instead of just the chip. But, if the technical challenge of such vertical applications is small, then the differentiation advantage is also small; for some more difficult applications, such as automatic driving, the challenge they face is the need to mobilize a large number of resources to do the development. Whatever, the startups have to fight for their futures. But, if we look at the potential usage of AI in almost everywhere, it is worth betting.

“Hardware is easy, software is much more difficult” is something we all agree on now. The toolchain that comes with the chip is the biggest headache and is with big value as well. In many speeches from AI chip vendors, they spend more and more time introducing their software solutions. Moreover, the optimization of software tools is basically endless. After you have single-core toolchain, you need to start thinking about multi-core, multi-chip, multi-board solutions; after you have compiler or library for neural network, then you have to think about how to optimize non-NN algorithm in the heterogeneous system for more complex applications. On the other hand, it is not just a software problem at all. You need to figure out the best tradeoff of software and hardware to get optimized results. From last year, we see that more and more people working on the Framework and Compiler for optimization, especially the compiler part. My optimistic estimate is that the compiler for just NN part will be mature and stable within a year. But other issues I mention above require continuous efforts and much longer time.

Among benchmarks of AI hardware, the most solid work we saw is MLPerf (just released the Inference recently) with most of the important players joining. The problem of MLPerf is that the efforts of the deployment are not trivia. Only a few large companies have submitted the training results. I am looking forward to more results in the coming months. At the same time, AI-Benchmark from ETH Zurich got attention, which is similar to the traditional mobile phone benchmark, using the results of running application to score the chip’s AI ability. Although it is not fully fair and accurate, the deployment is simple and it already covered most of the Android phone chips.

The AI chip race is a major force to drive the “the Golden age of architecture”. Many failed attempts 20 or 30 years ago seem to be worthy to revisit now. The most successful story is the systolic array, which is used in Google’s TPU. However, for many of them, we have to be cautious to ask: “Is the problem cause its failure in the past is gone today?”

Similar to other segments of AI industry, the speed of adopting academic works into the business world is unprecedented. The good news is that new technologies can get into products faster, and researchers can get rewards quicker, which could be a great boost to the innovations. In most cases, academic research focuses on a breakthrough at one point. However, implementing a chip and its solutions requires a huge amount of engineering works. So, the distance in between is actually very large. Nowadays, people more like talking about innovation at one point, but neglect (maybe intentionally ) the “dirty work” required to implement the product. This could be dangerous to the investors and the innovators.

Last but not least, one interesting observation is that the AI chip boom is significantly driving the development of the related areas, such as EDA/IP, design service, foundry, and many others. We see the progress in the areas, like new types of memory, packaging (chiplet), on-chip/off-chip networks, are all speeding up, which may eventually lead to the next round of exciting AI chip innovations.

Share this post via:

CEO Interview with Aftkhar Aslam of yieldWerx