Overview

High Bandwidth Memory (HBM) is a high-performance RAM interface designed to deliver extremely wide I/O and low power consumption. It is primarily used in high-end GPUs, AI accelerators, supercomputers, and advanced FPGAs. HBM achieves high memory bandwidth by stacking multiple DRAM dies vertically and connecting them with Through-Silicon Vias (TSVs) and microbumps, packaged alongside the processor die using 2.5D interposers or 3D chiplets.

Technology Background

Key Features

-

Wide I/O bus: Thousands of bits per interface (typically 1024 bits per stack).

-

Low power per bit transferred: Due to short vertical interconnects.

-

Small physical footprint: Stacked vertically, reducing PCB real estate.

-

2.5D/3D integration: Mounted next to SoCs using silicon interposers or bonded using hybrid bonding techniques.

Memory Stack Structure

HBM stacks are built using multiple DRAM dies (4, 8, or 12), each die subdivided into multiple independent channels (typically 8 or 16), enabling parallel data access.

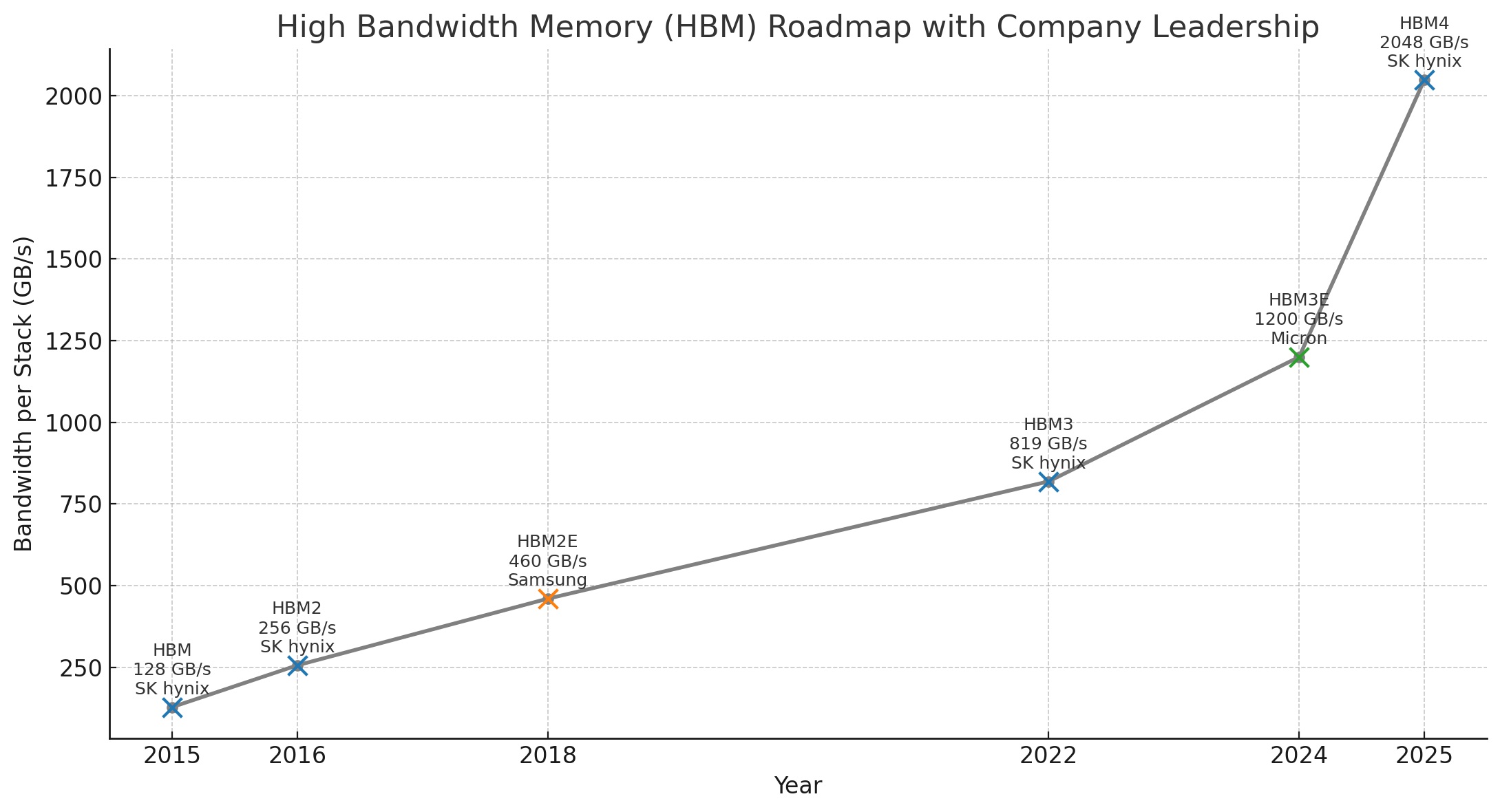

Evolution of HBM Standards

| Generation | Year | Bandwidth/Stack | I/O | Voltage | Max Die Stacks | Notes |

|---|---|---|---|---|---|---|

| HBM (1.0) | 2013–2015 | ~128 GB/s | 1024 bits | 1.2V | 4 | Used in AMD Fury X |

| HBM2 | 2016 | ~256 GB/s | 1024 bits | 1.2V | 8 | Used in NVIDIA V100 |

| HBM2E | 2018–2020 | ~460 GB/s | 1024 bits | 1.2V | 8 | Higher frequency |

| HBM3 | 2022 | ~819 GB/s | 1024 bits | ~1.1V | 12 | Used in NVIDIA H100 |

| HBM3E | 2024 | ~1.2 TB/s+ | 1024 bits | ~1.1V | 12 | Higher speed (6.4–9.2 Gbps) |

| HBM4 | 2025–2026 | ≥2 TB/s | 2048 bits | ~1.1V | 12+ | Uses finer 1β/1c process, hybrid bonding |

Industry Players

SK hynix

-

Market leader in HBM2, HBM3, and first to sample HBM4 to NVIDIA in 2025.

-

Supplies HBM to major AI clients (NVIDIA Rubin, Microsoft Azure).

-

Strong CoWoS and 2.5D integration experience.

Samsung

-

Second largest supplier, producing HBM2E, HBM3, and developing 12‑Hi HBM4.

-

Focuses on integrating 1c DRAM + hybrid bonding for future 3D-ICs.

-

Partners with AMD, Broadcom, and others.

Micron Technology

-

Entered HBM with HBM2E, gained traction with HBM3E in 2023.

-

Sampling 36GB HBM4 (12‑Hi) with 2 TB/s bandwidth as of mid-2025.

-

Targeting hyperscale clients with a new Cloud Memory Business Unit.

Advanced Packaging Technologies

HBM typically uses advanced packaging platforms for integration:

-

2.5D Interposer: DRAM stacks and SoC mounted side-by-side on a silicon interposer.

-

CoWoS®: TSMC’s interposer-based solution for AI and HPC.

-

SoIC®: 3D hybrid bonding from TSMC, enabling DRAM stacking directly atop SoC.

-

EMIB: Intel’s bridge-based 2.5D interconnect alternative to interposers.

Use Cases & Applications

-

AI/ML Accelerators: NVIDIA H100/H200, AMD Instinct MI300, Google TPU, AWS Trainium.

-

GPUs: High-end consumer and data center GPUs (e.g., AMD Vega, NVIDIA A100).

-

Supercomputing: Systems like Frontier, Aurora, and upcoming exascale projects.

-

Networking & FPGAs: Used in high-throughput networking, 5G baseband processing, and smart NICs.

-

Hyperscalers: Meta, Microsoft, Amazon, and Google use HBM in custom AI silicon.

Challenges and Trends

1. Cost & Yield

-

HBM is significantly more expensive than GDDR due to TSV, interposer cost, and assembly complexity.

-

Yields are sensitive to die defects—especially with higher stack counts (e.g., 12‑Hi).

2. Thermal Management

-

High bandwidth and dense stacking increase heat density.

-

Thermal interface materials (TIM) and top-side cooling are critical.

3. Hybrid Bonding & 3DIC

-

Future versions (HBM4 and beyond) adopt hybrid bonding to reduce interconnect pitch and power.

-

Increasing move toward vertical SoC-to-memory stacking (true 3DIC).

HBM4 and the Future

-

HBM4 represents a massive leap with 2048-bit I/O, faster speeds (6–9 Gbps), and over 2 TB/s bandwidth.

-

It is expected to power next-gen AI platforms, including NVIDIA Rubin, AMD Instinct MI400, and custom silicon from Google, Amazon, and Microsoft.

-

HBM5 is already being discussed, possibly arriving after 2027, emphasizing stacked compute-memory and even higher efficiency.

Moore’s Law Wiki