Summary

-

DDR (DDR5 today): General-purpose system memory for PCs/servers; balances bandwidth, capacity, cost, and upgradability.

-

LPDDR (LPDDR5X → LPDDR6): Mobile/embedded DRAM optimized for energy efficiency with high burst bandwidth; soldered, not upgradeable.

-

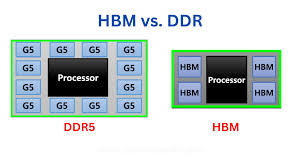

HBM (HBM3/3E → HBM4): 3D-stacked, ultra-wide interfaces for extreme bandwidth per package; used in AI/HPC accelerators with 2.5D/3D packaging.

Quick comparison (2025 snapshot)

| Attribute | DDR5 | LPDDR5X / LPDDR6 | HBM3 / HBM3E / HBM4 |

|---|---|---|---|

| Typical use | Desktops, workstations, servers | Phones, ultra-thin laptops, automotive/edge AI | AI/HPC GPUs & custom accelerators |

| Form factor | Socketed DIMMs (UDIMM/SO-DIMM/RDIMM/LRDIMM) | Soldered BGA; often PoP over the SoC | 3D TSV stacks on a silicon interposer (2.5D/3D) |

| Channeling | 2×32-bit per DIMM (dual 32-bit sub-channels) | Multiple narrow sub-channels for concurrency | Many wide channels per stack (e.g., 16–32) |

| Peak data rate (headline) | ~6.4 → ~9.6 GT/s (ecosystem bins vary) | ~7.5 → “teens” GT/s depending on gen/bin | HBM3 ~6.4 Gb/s/pin; HBM3E ~9+; HBM4 targets similar per-pin but doubles interface width |

| Bandwidth per package | ~50–80+ GB/s per DIMM (rate-dependent) | Tens of GB/s per package | HBM3 ≈0.8 TB/s; HBM3E ≈1.2 TB/s; HBM4 ≈2 TB/s (per stack) |

| Capacity (typical) | High per module; very high per system | Moderate per package; board-area constrained | Moderate per stack; scales by stacking multiple stacks |

| Upgradeability | Yes (socketed) | No (soldered) | No; fixed at manufacture |

| Power focus | Balanced perf/$ | Minimum energy/bit, deep sleep states | Best bandwidth/W at very high throughput |

| Cost & complexity | Lowest packaging complexity | Low-moderate; tight SI/PI on mobile boards | Highest packaging cost/complexity (interposer, TSV) |

What each family optimizes for

DDR (currently DDR5)

-

Goals: Universal main memory with strong capacity per dollar and broad platform compatibility.

-

Architecture highlights:

-

Dual 32-bit channels per DIMM (better parallelism than a single 64-bit).

-

On-DIMM power management (PMIC) for cleaner power at high speeds.

-

On-die ECC to improve internal cell reliability (separate from platform-level ECC).

-

-

Strengths: Inexpensive per GB, easy to upgrade/replace, massive ecosystem.

-

Trade-offs: Higher I/O power than LPDDR in mobile contexts; far lower bandwidth density than HBM.

LPDDR (LPDDR5X → LPDDR6)

-

Goals: Lowest energy/bit with fast entry/exit to deep power states; high burst bandwidth in compact designs.

-

Architecture highlights:

-

Narrow sub-channels to increase concurrency at low I/O swing.

-

Aggressive low-power features (various retention/standby modes, DVFS-style operation).

-

-

Strengths: Excellent perf/W; ideal for battery-powered and thermally constrained designs.

-

Trade-offs: Soldered (no upgrades), smaller capacities per package, absolute bandwidth below HBM.

HBM (HBM3/3E → HBM4)

-

Goals: Maximum bandwidth per package and excellent bandwidth/W via very wide interfaces.

-

Architecture highlights:

-

3D stacks connected with through-silicon vias (TSVs).

-

Mounted beside the processor on a silicon interposer (2.5D) or advanced 3D package.

-

Extremely wide buses (e.g., 1024-bit in HBM3; 2048-bit in HBM4).

-

-

Strengths: Orders-of-magnitude more bandwidth per package; essential for large AI models and memory-bound HPC.

-

Trade-offs: Highest cost and manufacturing complexity; thermal density; not field-upgradeable.

Packaging & integration

-

DDR5: Socketed DIMMs connect over motherboard traces; PMIC and SPD hub sit on the module. Client uses UDIMM/SO-DIMM; servers use RDIMM/LRDIMM.

-

LPDDR: Soldered BGA, often Package-on-Package (PoP) over the SoC to minimize trace length, area, and I/O power.

-

HBM: Multiple DRAM dies plus a base die; the stack is placed adjacent to the GPU/ASIC on an interposer with thousands of micro-bumps.

Performance & power snapshot (rules of thumb)

-

Bandwidth density: HBM ≫ LPDDR ≳ DDR on a per-package basis.

-

DDR5 per DIMM: ~51 GB/s at 6.4 GT/s; ~76.8 GB/s at 9.6 GT/s.

-

LPDDR packages: Typically tens of GB/s, scaling with channel count and data rate.

-

HBM per stack: ~0.8 TB/s (HBM3), ~1.2 TB/s (HBM3E), ~2 TB/s (HBM4).

-

-

Latency: All are DRAM; first-access latency is in the same ballpark across families. HBM is optimized for throughput/concurrency rather than minimum single-access latency.

-

Energy/bit: LPDDR leads in low/idle power and energy per transferred bit; HBM leads in bandwidth per watt at very high throughput; DDR is the cost- and capacity-balanced middle.

Reliability & ECC

-

DDR5: On-die ECC corrects small internal cell errors; platform-level ECC still requires ECC DIMMs and CPU/chipset support.

-

LPDDR (5X/6): Typically includes on-die error-mitigation and self-test features; end-to-end ECC support depends on the SoC/platform.

-

HBM: Enterprise accelerators often implement end-to-end ECC across HBM channels; specific schemes vary by vendor and generation.

Standards timeline (high level)

-

DDR5: Introduced mid-2020s; ecosystem speed bins continue climbing beyond 6400 MT/s toward ~8400–9600 MT/s.

-

LPDDR: LPDDR5X is widely deployed; LPDDR6 brings higher data rates, refined sub-channeling, and additional efficiency features.

-

HBM: HBM3 ramped for AI/HPC; HBM3E increased per-pin rates and stack heights; HBM4 doubled interface width to boost per-stack bandwidth.

Choosing between them

-

Choose DDR5 if you need affordable capacity, decent bandwidth, and field upgrades (PCs, mainstream and many server workloads).

-

Choose LPDDR if battery life, thermals, and board area are paramount (phones, tablets, ultra-thin laptops, automotive/edge systems).

-

Choose HBM if your workload is bandwidth-bound (AI training/inference, CFD, graph analytics) and your design can justify interposer packaging and cost.

Common misconceptions

-

“DDR5 has ECC so I’m fully protected.” On-die ECC ≠ platform ECC; system-level ECC still needs ECC DIMMs and CPU/chipset support.

-

“HBM always makes everything faster.” HBM maximizes throughput, not necessarily lower first-access latency; plus it adds packaging cost/constraints.

-

“LPDDR is slow.” Modern LPDDR reaches very high data rates with excellent perf/W; it’s chosen for efficiency, not because it’s inherently low-performance.

Website Developers May Have Most to Fear From AI