I learn a lot these days through webinars and videos because IC design tools like schematic capture and custom layout are visually oriented. Today I watched a video presentation from Steve Lewis and Stacy Whiteman of Cadence that showed how Virtuoso 6.1.5 is used in a custom IC design flow: Continue reading “Parasitic-Aware Design Flow with Virtuoso”

Addressing the Nanometer Custom IC Design Challenges! (Webinars)

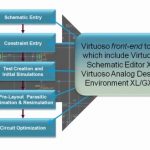

Selectively automating non-critical aspects of custom IC design allows engineers to focus on precision-crafting their designs. Cadence circuit design solutions enable fast and accurate entry of design concepts, which includes managing design intent in a way that flows naturally in the schematic. Using this advanced, parasitic-aware environment, you can abstract and visualize the many interdependencies of an analog, RF, or mixed-signal design to understand and determine their effects on circuit performance.

Watch technical presentations and demonstrations on-demand and learn how to overcome your design challenges with the latest capabilities in Cadence custom/analog design solutions.

Virtuoso 6.1.5 – Front-End Design

Steve Lewis, Product Marketing Director

Highlights of new front-end design tools and features (including a new waveform viewer, Virtuoso Schematic Editor, and Virtuoso Analog Design Environment), and how to identify and analyze parasitic effects early.

View Sessions

Virtuoso Multi-Mode Simulation

John Pierce, Product Marketing Director

Updates on the latest simulation capabilities including Virtuoso Accelerated Parallel Simulator distributed multi-core simulation mode for peak performance; a high-performance EMIR flow; Virtuoso APS Accelerated Parallel Simulator RF analyses; and an enhanced reliability analysis flow.

View Sessions

Virtuoso 6.1.5 – Top-Down AMS Design and Verification

John Pierce, Product Marketing Director View Session

Highlights of the latest in advanced mixed-signal verification methodology, checkboard analysis, assertions, and how to travel seamlessly among all levels of abstraction of the design.

View Sessions

Virtuoso 6.1.5 – Back-End Design

Steve Lewis, Product Marketing Director View Session

Highlights of the latest in constraint-driven design; Virtuoso Layout Suite; links between parasitic-aware design, rapid analog prototyping, and QRC Extraction; and top-down physical design: floorplanning, pin optimization, and chip assembly routing with Virtuoso Spaced-Based Router.

View Sessions

Virtuoso 6.1.5 – MS Design Implementation

Michael Linnik, Sr. Sales Technical Leader View Session

Highlights of the latest mixed-signal implementation challenges and solutions that link Virtuoso and Encounter technologies on the OpenAccess database, including analog/digital data interoperability, common mixed-signal design intent, advances in design abstraction, concurrent floorplanning, mixed-signal routing, and late-stage ECOs.

View Sessions

What’s New in Signoff

Hitendra Divecha, Sr. Product Marketing Manager

Highlights of standalone and qualified in-design signoff engines for parasitic extraction, physical verification, power-rail integrity analysis, litho hotspot analysis, and chemical-mechanical polishing (CMP) analysis.

View Sessions

Jasper Customer Videos

Increasingly at DAC and other shows, EDA companies such as Jasper are having their customers present their experiences with the products. Everyone has seen marketing people present wonderful visions of the future that turn out not to materialize. But a customer speaking about their own experiences has a credibility that an EDA marketing person (and I speak as one myself) do not. In fact EDA marketing doesn’t exactly have a great reputation (what’s the difference between an EDA marketing person and second-hand car salesman? the car salesman knows when he is lying).

At DAC Jasper had 3 companies present their experiences using various facets of formal verification using JasperGold. These are in-depth presentations about exactly what designs were analyzed and how, and what bugs they found using formal versus simulation.

The presentations were videoed and are available here (registration required).

ARM

Alan Hunter from Austin (which based on his accent is a suburb of Glasgow) talked about using JasperGold for validating the Cortex-R7. It turns out that a lot of RTL was available before the simulation testbench environment was set up so designers started to use formal early (for once). They found that it was easier to get formal set up than simulation and, when something failed, the formal results were easier to debug than a simulation fail.

He then discussed verifying the Cortex A15 L2 cache. They had an abstract model of the cache as well as the actual RTL. The results were that they discovered a couple of erros in the specification using the formal model. They could also use the formal model to work out quickly how to get the design into certain states quickly. And they found one serious hardware error.

With A7, where formal was used early, they focused on bug-chasing and were less concerned about having full proof coverage. But with A15 they were more disciplined and more focused on proving both the protocol itself and the reference implementation were rock solid under all the corner cases.

NVIDIA

NVIDIA talked about using JasperGold for Sequential Equivalence Checking (SEC). Equivalance Checking (EC), I suppose I should say Combination Equivalence Checking to distinguish it from Sequential, is much simpler. It identifies all the registers in the RTL and finds them in the netlist and then verifies that the rest of the circuit matches the RTL. The basic assumption is that the register behaviour in the RTL is the same as in the netlist. SEC relaxes that because, for example, a register might be clock-gated if its input is the same as its output. For example, if a register in a pipeline is not clocked on a clock cycle, the downstream register does not need to be clocked on the next clock cycle since we know the input has not changed. In effect, the RTL has been changed. This type of optimization is common for power reduction, either done manually or by Calypto (who I think have the only automatic solution in the space). Half the bugs found in one design were from simulation but the other half came from FV. About half of the bugs found by FV would eventually have been discovered by simulation…but would have taken another 9 months of verification.

But wait, JasperGold doesn’t do sequential equivalence checking does it? No, it doesn’t but it will. NVIDIA is an early customer of the technology and have been using it on some very advanced designs to verify complex clock gating. Watch out for the Jasper SEC App.

ST Microelectronics

ST talked about how they use JasperGold for verification of low power chips. The power policy is captured in CPF and the two real alternatives to verification are power-aware RTL simulation and FV. Power-aware RTL simulation is very slow, still suffers from the well-known X-optimism or X-pessimism problem, along with an explosion of coverage due to the power management alternatives. And, like all simulation, it is incomplete.

Alternatively they can create a power-aware model, in the sense that the appropriate parts of CPF (such as power domains, isolation cells and retention cells) are all included in the model. This way they can debug the power optimization and even analyze power-up and power-down processes, in particular to ensure that no X values are propagated to the rest of the system.

ARM and TSMC Beat Revenue Expectations Signaling Strength in a Weakening Economy?

Fabless semiconductor ecosystem bellwethers, TSMC and ARM, buck the trend reporting solid second quarters. Following “TSMC Reports Second Highest Quarterly Profit“, the British ARM Holdings “Outperforms Industry to Beat Forecasts“. Clearly the tabloid press death of the fabless ecosystem claims are greatly exaggerated.

“ARM’s royalty revenues continued to outperform the overall semiconductor industry as our customers gained market share within existing markets and launched products which are taking ARM technology into new markets. This quarter we have seen multiple market leaders announce exciting new products including computers and servers from Dell and Microsoft, and embedded applications from Freescale and Toshiba. In addition, ARM and TSMC announced a partnership to optimize next generation ARM processors and physical IP and TSMC’s FinFET process technology.” Warren East, ARM CEO.

- ARM’s Q2 revenues were up 12% on Q1 at £135m with profit up 23% at £66.5m.

- H1 revenues were up 12% on H1 2011 at £268m, with profit up 22% at £128m.

- 23 processor licenses signed across key target markets from microcontrollers to mobile computing

- Two billion chips were shipped into a wide range of applications, up 9% year-on-year compared with industry shipments being down 4%

- Processor royalties grew 14% year-on-year compared with a decline in industry revenues of 7%

- 3 Mali graphics processor licensess were signed in Q2, of which two were with new customers for Mali technology

- 5 physical IP Processor Optimisation Packs were licensed.

ARM enters the second half of 2012 with a record order backlog and a robust opportunity pipeline. Relevant data for the second quarter, being the shipment period for ARM’s Q3 royalties, points to a small sequential increase in industry revenues. Q4 royalties are harder to predict as macroeconomic uncertainty may impact consumer confidence, and some analysts have become less confident in the semiconductor industry outlook in the second half. However, building on our strong performance in the first half, we expect overall Group dollar revenues for full year 2012 to be in line with market expectations.

Even more interesting is the recently announced TSMC / ARM multi-year agreement that extends beyond 20 nm technology (16nm) to enable the production of next-gen ARMv8 processors that use FinFETtransistors and leverages ARM’s Physical IP that currently covers a production process range from 250 nm to 20 nm.

“By working closely with TSMC, we are able to leverage TSMC’s ability to quickly ramp volume production of highly integrated SoCs in advanced silicon process technology,” said Simon Segars, executive vice president and general manager, processor and physical IP divisions, ARM. “The ongoing deep collaboration with TSMC provides customers earlier access to FinFET technology to bring high-performance, power-efficient products to market.”

“This collaboration brings two industry leaders together earlier than ever before to optimize our FinFET process with ARM’s 64-bit processors and physical IP,” said Cliff Hou, vice president, TSMC Research & Development. “We can successfully achieve targets for high speed, low voltage and low leakage, thereby satisfying the requirements of our mutual customers and meeting their time-to-market goals.”

This agreement makes complete sense with 90% of ARM silicon going through TSMC and the PR battle Intel is now waging against both ARM and TSMC. But lets not forget the Intel Atom / TSMC agreement of March 2009:

We believe this effort will make it easier for customers with significant design expertise to take advantage of benefits of the Intel Architecture in a manner that allows them to customize the implementation precisely to their needs,” said Paul Otellini, Intel president and CEO. “The combination of the compelling benefits of our Atom processor combined with the experience and technology of TSMC is another step in our long-term strategic relationship.”

Sorry Paul, clearly this was not the case. TSMC is customer driven and Atom had no customers. So there you have it. The agreement was “put on hold” less than a year later:

Intel spokesperson Bill Kircos said no TSMC-manufactured Atoms are on the immediate horizon, though he added that the companies have achieved several hardware and software milestones and said they would continue to work together. “It’s been difficult to find the sweet spot of product, engineering, IP and customer demand to go into production,” the Kircos said.

Given that wrong turn, the current Intel strategy is to offer ASIC services versus the traditional foundry COT (customer owned tooling) for Atom SoCs using a CPU centric 22nm process. This turnkey ASIC service is currently called Intel Foundry Services to which we have heard plenty but have yet to see any silicon. Just my observation of course.

MemCon Returns

Back before Denali was acquired by Cadence they used to run an annual conference called MemCon. Since Denali was the Switzerland of EDA, friend of everyone and enemy of none, there would be presentations from other memory IP companies and from major EDA companies. For example, in 2010, Bruggeman, then CMO of Cadence, gave the opening keynote but there were also presentations from Synopsys, Rambus, Micron, Mosys, Samsung and lots of others. Plus, of course, several presentations from Denali. The format and time varied, sometimes it was 2 days in June, sometimes 1 day in July.

When Cadence acquired Denali, they folded MemCon into CDNLive, Cadence’s series of user group meetings, the largest of which was in San Jose so there was no standalone MemCon 2011.

But for 2012, MemCon is back again. Tuesday September 18th at the Santa Clara convention center. The full agenda is not yet available (EDIT: yes it is, it is here) but current sponsors and speakers include

- Agilent

- Cadence

- Discobolus Designs

- Everspin

- Kilopass

- Micron

- Objective Analysis

- Samsung

MemCon is free to attend but the number of places is limited and so you must pre-register and can’t just show up at the last minute. The registration page for MemCon is here.

The Future of Lithography and the End of Moore’s Law!

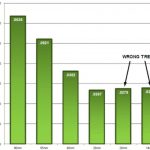

Thisblog with a chart showing that the cost of given functionality on a chip is no longer going to fall is, I think, one of the most-read I’ve ever written on Semiwiki. It is actually derived from data nVidia presented about TSMC, so at some level perhaps it is two alpha males circling each other preparing for a fight. Or, in this case, wafer price negotiations.

However, I also attended the litho morning at Semicon West last week, along with a lot more people than there were chairs (I was smart enough to get there early). I learned a huge amount. But nobody really disputed the fact that double and triple patterning make wafers a lot more expensive than when we only need to use single patterning (28nm and above). The alternatives were 3 new technologies (or perhaps a combination). Extreme ultra-violet lithography, direct write e-beam and directed self assembly. EUV, DWEB or DSA. Pick your acronym.

I wrote about all of them in more detail. Here are links. Collect the whole set.

- Overview of future lithography

- Direct write e-beam

- Extreme ultra-violet (EUV)

- EUV masks

- Lack of a pellicle on EUV masks

- Directed self-assembly

I talked to Gary Smith who had been at an ITRS meeting. He says ITRS are not worried and that they think 450mm (18″) wafers will solve all the cost issues. I haven’t seen any information about the expected cost differences between 300mm and 450mm but for sure it is not negligible. Twice as many die for how much more per wafer? A lot of 450nm equipment needs to be created and purchased too, although presumably in most cases you only need half as much of it for the same number of die.

Gary also had lunch with the ITRS litho people and they still see EUV as the future. After listening to these presentations I’m not so sure. The issue everyone is focused on (see what I did there?) is the lack of a powerful light source for EUV. But the mask blank defect issue and the lack of a pellicle defect issue also look like killer problems.

Maybe I shouldn’t worry. After all, all these people know way more than I do about lithography. I’m a programmer by background, after all.

But I was at the common platform technology forum in March and Lars Liebmann of IBM said: “I worked on X-ray lithography for years and EUV is not as far along as X-ray lithography was when we finally discovered it wasn’t going to work.”

Now that’s scary.

The reason this is so important is that Moore’s law is not really about the number of transistors on a chip, it is about the cost of electronic functionality dropping. If the graph above turns out to be true, it means a million gates will never get any cheaper. That means an iPad is as cheap as it will ever be. An iPhone is never going to just cost $20. We will never have cell-phones that are so cheap that like calculators we can give them away. Yes, we may have new sexy electronic devices. But the cost of the product at introduction is the cost that it will always be. It won’t come down in price if we just wait, as we’ve become used to. Electronics will become like detergent, the same price year after year.

The Total ARM Platform!

In the embedded world that drives much of today’s ASIC innovation, there is no bigger name than ARM. Not to enter the ARM vs. Intel fray, but it’s no exaggeration to say that ARM’s impact on SoCs is as great as Intel’s on the PC. Few cutting edge SoCs are coming to market that do not include some sort of embedded processor. And a disproportionately large number of those processors are from ARM.

It stands to reason then, that the capability to design and implement ARM into ASIC SoCs is paramount and so I used some of my time at DAC to check out what was happening in the ARM design and implementation space. To that end, I was very pleased with what I found at the GUC demonstration.

For those few of you who may not know, GUC, orGlobal Unichip Corp., is leading the charge into a new space called the Flexible ASIC Model[SUP]TM[/SUP]. The Flexible ASIC Model, according to GUC, allows semiconductor designers to focus on their core competency while providing a flexible handoff point for each company, depending on where their core competency begins and ends. The model accesses foundry design environments to reduce design cycle time, provides IP, platforms and design methodologies to lower entry barriers, and integrates technology availability (design, foundry, assembly, test) for faster time–to–market. In a nutshell, the company should have a great deal of insight into how to integrate ARM based processors into ASIC innovation.

Not surprisingly, GUC has dedicated significant resources to successfully embedding ARM processors into ASIC designs. The service covers a robust and proven hardening flow that targets leading edge manufacturing process technologies, ARM-specific IP and design, successful test chips for ARM926, ARM1176 and the Cortex series, software support and a development platform that includes fast system prototyping.

The ARM hardening process starts with RTL validation that includes specification confirmation and memory integration. The next step, synthesis, covers critical path optimization and timing constraint polishing. Design for test (DFT) includes MBIST integration, scan insertion and compression, at-speed DFT feature integration and test coverage tuning. Place and route services cover floor planning and placement refinement, timing closure, dynamic and leakage power analysis, IR/EM analysis, dynamic IR analysis, design for manufacturing (DFM), and DRC/LVS. Final quality assurance (QA) covers library consistency review, log parsing, report review and checklist item review.

GUC’s ARM core hardening service also includes document deliverables including application nodes and simulation report. Normally, after receiving customers’ specification requests, GUC provides a preliminary timing model within one month and completes the design kit within two months.

Most importantly, their methodology has been proven. GUC recently broke the one Gigahertz barrier with an ARM Cortex-A9 processor and their history with ARM stretches back over a decade. During that time, the company has successfully run more than 90 ARM core tape outs with proven production at high yields for different applications (high performance, low power) on multiple TSMC process nodes (28nm, 40nm, 65nm, 90nm).

The importance that ARM cores will play as ASIC SoC innovation moves forward is still largely untold. But what is clear is that an ARM hardening process will be required for ASIC success. Given the complexity, this may be a difficult service to move in-house and so finding ASIC companies with critical, proven ARM hardening capabilities will become an increasingly important ingredient in the success formula.

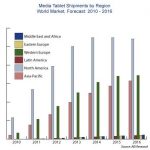

Media Tablet Strategy from Google and Microsoft: illusion about the effective protection of NDAs…

Extracted from an interesting article from Jeff Orr from ABI research, “We have all heard about leaked company roadmaps that detail a vendor’s product or service plans for the next year or two. Typically, putting one’s plans down in advance of public announcement has two intended audiences: customers who rely on roadmaps to demonstrate that a vendor has “staying power,” and supplier partners who communicate commitment to the supply chain and relay future requirements. These plans generally are well guarded secrets and those getting to view them often have to sign a non-disclosure agreement saying they will not share the information with other parties. But what happens when suppliers decide to enter the market and compete head-on with those vendors that have confided their companies’ futures?”

This article is targeting Microsoft and Google strategies, which is basically to start competing with their (probably former?) partners, the Media Tablet manufacturers. In the conclusion, the author doesn’t look overoptimistic about the positive return from such a strategy: if it’s successful or if the OS vendor can finally reach level of sales for his tablet comparable to Apple, and by the way the same level of profit, as this should be the ultimate goal (!), then “the OS vendors will find it increasingly difficult to rebuild the former trust with the device ecosystem”. And, if the OS vendor fails, “they will have caused irreparable harm to the mobile device markets”. Look like a superb lose-lose strategy, isn’t it?

The important information which can also be found in this article, even if it is relatively hidden, is: theprotection given by an NDA is an illusion!

In other words, signing an NDA with a partner (who could become a competitor) is absolutely useless. You may protect a technology or some product features with patents. But a NDA has never prevented a so-call partner to steal your idea, or to duplicate your product roadmap! I remember one of my customer, at the end of the 1990’s, developing and ASSP with our ASIC technology. The device was ARM based modem providing Ethernet connection for printers and the like; it was a good business for both of us, generating large volumes, when this customer decided to challenge our prices and submit a RFQ to one of our competitor (based in Korea, with a “S” at the beginning of the name). This customer finally stayed with us, but less than one year later, Sxxx was launching a direct competing product! I am sure that our customer has signed a NDA with this ASIC supplier…

I firmly believe that NDA are USELESS (except if you love to waste time in additional legal work). I also firmly believe that I will continue to sign NDA with customers or partners for a long time, just because that’s the way it works!

Eric Esteve from IPnest

How Many Licenses Do You Buy?

An informal survey of RTDA customers reveals that larger companies tend to buy licenses based on peak usage while smaller companies do not have that luxury and have to settle for fewer licenses than they would ideally have and optimize the mix of licenses that they can afford given their budget. Larger companies get better prices (higher volume) but the reality is that often they still have fewer than they would like. Or at least fewer than the engineers who have to use the licenses would like.

Licenses are expensive so obviously having too many of them is costly. But having too few is costly in its own way: schedules slip, expensive engineers are waiting for cheap machines, and so on. Of course licenses are not fungible, in the sense that you can use a simulation license for synthesis, so the tradeoff is further complicated by getting the mix of licenses right for a given budget.

There are two normal ways to change the mix of licenses for a typical EDA multi-year contract. One is at contract renewal to change the mixture from the previous contract. But large EDA companies often also have some sort of remix rights, which lets the customer exchange lightly (or never) used licenses for ones which are in short supply. There are even more flexible schemes for handling some peak usage, such as Cadence’s EDAcard.

Another key piece of technology is to have a fast scheduler which can move jobs from submission to execution faster. When one job finishes and gives up its license, that license is not generating value until it is picked up by the next job. Jobs are not independent (in the sense that the input to one job is often the output from another). The complexity of this can be staggering. One RTDA user has a task that requires close to a million jobs and involves 8 million files. As we need to analyze at more and more process corners, these numbers are only going to go up. Efficiently scheduling this can make a huge difference to the license efficiency and to the time that the entire task takes.

There are a number of different ways of investigating whether the number of licenses is adequate and deciding what changes to make.

- Oil the squeaky wheel: some engineers complain loudly enough and the only way to shut them up is to get more licenses.

- Peak demand planning: plan for peak needs. This is clearly costly and results in relatively low average license use for most licenses

- Denials: look at logs from the license server. But if using a good scheduler like NetworkComputer there may be no denials at all but that is still not a sign that the licenses are adequate

- Average utilization: this works well for heavily used tools/features whereas for a feature that is only used occasionally the average isn’t very revealing and can mask that more licenses would actually increase throughput

- Vendor queueing: this is when instead of getting a license denial the request is queued by the license server. A good scheduler can take advantage of this by starting a limited number of jobs in the knowledge that a license is not yet available but should soon be. It is a way of “pushing the envelope” on license usage since the queued job has already done some preliminary work and is ready to go the moment a license is available. But as with license denial, vendor queuing may not be very revealing since the job scheduler will ensure that there is only a small amount.

- Unmet demand analysis: this relies on information kept by the job scheduler. It is important to take care to distinguish jobs that are delayed waiting for a license versus jobs that are delayed waiting for some other resource, such as a server. Elevated levels of unmet demand are a strong indicator of the need for more licenses.

A more formal approach can be taken with plots showing various aspects of license use. The precise process will depend on the company, but the types of reports that are required are:

- Feature efficiency report: the number of licenses required to fulfill requests of specific software 95%, 99% and 99.9% of the time

- Feature efficiency histograms: showing the percentage of time each feature is in use of a time frame, and the percentage of time that individual licenses are actually used

- Feature plots: plots of a period of time showing capacity, peak usage and average usage, along with information on licenses requests, grants and denials

These reports can be used to make analyzing the tradeoffs involved in licenses more scientific. Of course it is never going to be an exact science, the jobs running today are probably not exactly the same ones as will run tomorrow. And, over time, the company will evolve: new projects get initiated, new server farms come online, changes are made to methodology.

No matter how many licenses are decided upon, there will be critical times when licenses are not available and projects are blocked. The job scheduling needs to be able to handle these priorities to get licenses to the most critical projects. At the same time, the software tracking usage must be able to provide information on which projects are using the critical licenses to allow engineering management to make decisions. There is little point, for example, in redeploying engineers onto a troubled project without also redeploying licenses for the software that they will need.

Libraries Make a Power Difference in SoC Design

At Intel we used to hand-craft every single transistor size to eek out the ultimate in IC performance for DRAM and graphic chips. Today, there are many libraries that you can choose from for an SoC design in order to reach your power, speed and area trade-offs. I’m going to attend a Synopsys webinar on August 2nd to learn more about this topic and then blog about it.

I met the webinar presenter Ken Brock back in the 80’s at Silicon Compilers, the best-run EDA company that I’ve had the pleasure to work at.

WebinarOverview:Mobile communications, multimedia and consumer SoCs must achieve the highest performance while consuming the minimal amount of energy to achieve longer battery life and fit into lower cost packaging. Logic libraries with a wide variety of voltage thresholds (VTs) and gate channel lengths provide an efficient method for managing energy consumption. Synopsys’ multi-channel logic libraries and Power Optimization Kits take advantage of low-power EDA tool flows and enable SoC designers to achieve timing closure within the constraints of an aggressive power budget.

This webinar will focus on:

- How combining innovative power management techniques using multiple VTs/channel lengths in different SoC logic blocks delivers the optimal tradeoff in SoC watts per gigahertz

- Ways to maximize system performance and minimize cost while slashing power budgets of SoC blocks operating at different clock speeds

Length: 50 minutes + 10 minutes of Q&A

Who should attend: SoC design engineers, system architects, project managers

Ken Brock, Product Marketing Manager for Logic Libraries, Synopsys

Ken Brock is Product Marketing Manager for Logic Libraries at Synopsys and brings 25 years of experience in the field. Prior to Synopsys, Ken held marketing positions at Virage Logic, Simucad, Virtual Silicon, Compass Design Systems and Mentor Graphics. Ken holds a Bachelor’s Degree in Electrical Engineering and an MBA from Fairleigh Dickinson University.