I already talked about how Cadence is splitting Virtuoso into two. Anyway, it is now officially announced. The 6.1 version will continue to be developed as a sort of Virtuoso classic for people doing designs off the bleeding edge that don’t require the new features. And a new Virtuoso 12.1 intended for people doing 20nm and below known as Virtuoso Advanced Node. I’m going to call it VAN for short, although I don’t think that is any sort of official name for it.

I sat down with Steve Lewis (it’s always odd doing press events with people that used to work for me) to get more details.

Major releases of Virtuoso (the first digit changing from 5 to 6 for example) have involved major incompatibilities in the database and SKILL libraries. This has contributed to a very slow transition in the customer base. But great care has been taken here so that 6.1 and 12.1 use the same OA database compatible and SKILL compatible. After all, if you are doing 20nm design, you haveto transition to 12.1.

Obviously one thing is that VAN does is has full support for double patterning. I’ve blogged so much about double patterning recently that I’m going to assume everyone already knows about it and about the sort of features that VAN has to have to support it properly. Instead, I’m going to look at two other big issues in 20nm and below: layout dependent effects (LDE) and local interconnect.

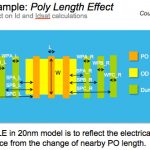

In 20nm, the design rules are much less pass-fail than they used to be. The most dramatic LDE is well-proximity effect. There is a minimum distance that a transistor must be from a well edge. But if the transistor is not going to be affected electrically by being near the well edge then it needs to be much further away. Or else you have to analyze the effect and make sure everything is still OK since these are not second or third order effects, they have significant impact.

One thing that VAN does is to blur the old distinction between Composer (schematic) and layout. The old model was that the circuit designer would create the schematic and then throw it over the wall (or often the ocean) to the layout designer to implement it. That doesn’t work in 20nm because there are too many LDEs. In VAN it is now possible to put some layout information into the schematic and then do a sort of hybrid analysis using layout information where it is provided and schematic data where there isn’t. They call this variability-aware-design.

In particular, the layout is analyzed including all the LDE such as well-proximity. Then when the layout designer finally creates the full design, there is lots of layout data already included at varying levels of detail. It reminds me of the same issues 10 years ago in synthesis requiring physical information and it has some of the same issues. Just as the RTL designers didn’t know much about P&R and vice-versa, the circuit designers don’t know much about layout and the layout people don’t know much about circuit design. But analog design is becoming much more like RF design, where the actual layout has always been the design and there has never been the notion that schematic and layout could be kept completely separate.

Another new thing at 20nm is local interconnect. This is an interconnect layer between the transistor level and metal1. In the fab world, the part of the process that creates the transistors is known as front end of line or FEOL (nothing to do with what EDA calls front-end design). The interconnect and via part of the process is called back end of line or BEOL. So now we have the interesting oxymoron of middle end of line or MEOL. Local interconnect has very strict design rules and, since it is contactless and connects to whatever it passes over it also has very limited use. But within standard cells and other small designs, it can make a big difference to both area and performance.

This means that at 20nm and below there are new challenges for the router to make use of local interconnect when possible.

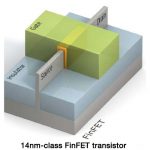

Obviously, one other feature in VAN is support for FinFETs. This mostly affects extraction rather than requiring a sea-change in how layout is done.

There are lots of other little details, like fractured vias (created from multiple layers of local interconnect for example) and support for some of the other complicated 20nm and below design rules.

Download Cadence’s white paper on 20nm custom and analog design here.