Often a question is raised about how SystemC improves verification time when the design has to go through RTL in any case. A simple answer is that with SystemC, designs can be described at a higher level of abstraction and then automatically synthesized to RTL. When the hands-on design and verification activity is at a higher level, there are fewer opportunities to introduce bugs, and it is much easier to identify them before RTL is generated. It’s true that at cycle accurate level of verification, RTL and SystemC will provide the same level of performance, however considering the time required by cycle accurate level of verification, it’s wise to reduce those iterations by doing quicker verification at higher levels of abstractions before RTL. The levels of abstraction in ESL (Electronic System Level) space are very neatly described in a book http://www.cadence.com/products/fv/tlm/Pages/tlm.aspx published by Cadence. Further Cadence has developed a modelled approach to verification methodology which spans through the FVP (Functional Virtual Prototype), HLS (High Level Synthesis) and RTL (Register Transfer Level) levels of abstraction. In the middle of April, in ISCUG conference, Mr. Prashanth G Baddam made a presentation on this methodology, jointly authored by Mr. Prashanth G Baddam and Mr. Yosinori Watanabe.

Prashanth G Baddam, Yoshinori Watanabe

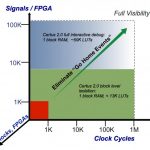

Prashanth very concisely described about what can be verified in the models at each of these levels in order to optimize total verification time, as every item cannot be verified on every abstract model. Hence after the verification plan creation for hardware under verification, categorization of its verification items with respect to the abstract models is important as shown below.

At FPV level, the models are in SystemC programmer’s view with bus interfaces described in TLM2.0. Testbench is single at all levels described in SystemVerilog or e utilizing UVM standard. At HLS level, the model needs to be synthesizable; concurrent blocks for function and refined TLM for communication interfaces. The testbench is extended to verify the interfaces, and to add more tests for the refined functionality. At RTL, the design is closer to hardware with micro architecture details like complete interface information and state transition models. Interfaces are at signal-accurate protocol. The testbench is further extended to use protocol specific interface agents and existing VIPs (Verification IP). More checking specific to RTL is added.

It is evident that at times it becomes necessary to probe values of particular variables, either declared as private members within SystemC module or local to functions for white-box monitoring. As this is tedious to do manually, Cadence provides a library called wb_lib to make this task easy. This library consists of APIs for monitoring local and private variables which can be accessed from the testbench.

Cadence provides a complete metric-driven methodology for verifying any system from TLM to RTL level with powerful coverage capabilities. It provides verification plan editor where verification items at different levels can be identified which get refined as the level moves towards RTL.

With verification starting at the design decision stage, more of it being covered early in the design cycle which is complemented with finer level of verification at later stages and reuse of testbench at all these levels shortens the verification time considerably; 30-50% shorter debug cycle, 2X faster verification turnaround and around 10X faster IP reuse.

The methodology provides broad architectural exploration to gain higher throughput and lower power consumption and size. This also improves efficiency in exploring best verification strategies. The detailed presentation is posted at http://www.iscug.in/sites/default/files/ISCUG-2013/ppt/day1/3_5_MultilevelVerification_ISCUG_2013.pdf

Cadence is closely collaborating with its key customers to tailor this methodology for their specific production environment. That’s encouraging news!!