The good news is that the next five DAC events will take place in Moscone Center in San Francisco! If going to Las Vegas from the Bay area is an easy trip, coming from Europe to Las Vegas makes it a 24+hours journey… One obvious consequence was the poor attendance to the exhibition floor. But let’s be positive and notice that the number of small to mid-size IP vendors has grown again.

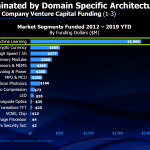

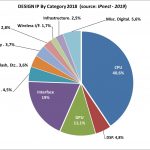

No doubt that the future of EDA is IP, that’s why it’s important to count their respective revenues separately. By doing so, you can see that the EDA market is still growing, but at the same rate than the semiconductor market, when the IP market is growing MORE than the semi market. IPnest prediction: the IP market itself will weight as much as ALL EDA categories (as reported by ESDA) by 2027-2028…

Let’s move to the sessions that I have attended or chaired, two invited paper session and one panel:

“How PAM4 and DSP Enable 112G SerDes Design”

Chair & Organizer: Eric Esteve – IPnest, Marseille, France

I have been very proud to chair this session, as we had one very good presentation from Rita Horner (Synopsys) and an excellent one from the vibrant Tony Piallis (Alphawave). I spent time equally, looking at the screen and looking at the audience and I can testimony that people were really fascinated when listening to Tony. There is no “best speaker” price for invited paper, but I would certainly award it to Tony!

He has detailed how works an analog SerDes, really explaining the various design techniques to be implemented and the associated weaknesses. Don’t forget that up to 28 Gbps, SerDes were NRZ analog based and were doing the job! Which made his presentation full of life is that Tony has designed Analog SerDes since early 2000’s, when the state of the art was 2.5 Gbps. It was not a theoretical lesson, but an architect sharing experience.

The second part of the presentation addressed DSP based SerDes, showing how SerDes design can be improved and more predictable (no more process sensitive like with analog). That’s why DSP based SerDes can now reach 112 Gbps and allow the data center to support 800G internet (x8 lanes) or chip2chip 100G XSR connection.

The paper from Rita Horner was complementary, as she explained how 56G and 112G PAM 4 PHY can be used to build 400G or 800G Ethernet interconnects at every level in data center: intra rack, inter racks, room to room or regional. Thank you, Rita, for making this complex architecture easy to understand to people like me!

As a conclusion, if the speakers were coming from two big EDA (Synopsys and Cadence) and one startup (Alphawave), in fact Cadence acquisition of NuSemi (2017) and Synopsys acquisition of Silabtech (2018) show that the IP startup dynamism is key to develop and bring to the mainstream market advanced technology like DSP based PAM4 SerDes!

Wanted: Analog IP Design Methodologies to Catch up with Digital Time-to-market

Chair: Paul Stravers – Synopsys, Inc., Eindhoven, The Netherlands

Co-Chair: Eric Esteve – IPnest

This session was proposed to the DAC IP Committee as we can see that SoC development can be penalized by the late integration of Analog IP, taking longer to design than Digital function. To comply with SoC TTM requirement, the chip maker may decide to integrate an old, but silicon proven version of analog IP. This safe approach may also penalize SoC performance: larger area, higher power consumption or not optimal performance of the old analog IP.

We have call for papers showing what type of new methodologies could be used to remove these barriers and bring SoC to the market with state-of-the-art integrated digital AND analog functions. This session included three invited papers from STMicro, Movellus and Intento Design (you can see more by using the above link).

Stephane Vivien from STMicro has shown the lesson learnt from a real, industrial case: “How to Resize Imager IP to Improve Productivity”. His presentation was not theoretical, but showing the question to be answered, the tools to be selected and the methodology to be invented to port a specific analog IP silicon proven on node n to a more advanced node (n-1 or n-2). STMicro was satisfied by the new methodology and the selected tools (including from ID-Xplore from Intento Design and WickeD from MunEDA) as the analog IP resizing took 4 weeks instead of 3 months by using ID-Xplore and lead to similar or better analog performance.

Jeffrey Fredenburg, co-founder of Movellus, was presenting “Automated Analog Design from Architecture to Implementation”. Because “Analog is always behind” the recently founded start-up Movellus has decided to create a new methodology. If you can convert analog components into digitally controlled cells, you can now use a digital flow and save time. Jeffrey has presented the design of functions ranging from a “400 MHz Digital PLL Oscillator” to “1.0 to 5.5 GHz PLL targeting GF 14nm”, including the characterization results. Apparently, the new methodology is working!

To end the session, Ramy Iskander, CEO of Intento Design, has introduce the above-mentioned tool ID-Xplore, a “Cognitive Software for Designing First-Time Right Analog IP”. From the first presentation, we know that this tool also is working! Even if the presentation was quite theoretical, the conclusion was impressive as Ramy affirm that “Cognitive EDA will drastically boost design productivity, production quality and time-to-market by at least two order of magnitudes”

The session was successful not only because all speakers have described advanced tools or methodologies, but because they did it in such a way that Philistine people (like Paul or myself) could clearly understand this complex topic. I must say that we had an high attendance, and nobody decided to leave the room!

Open Source ISAs – Will the IP Industry Find Commercial Success?

Moderator: Eric Dewannain – Samsung Semiconductor, Inc., San Jose, CA

Organizer: Randy Fish – UltraSoC Technologies Ltd., Cambridge, United Kingdom

Panelists:

Jerry Ardizzone – Codasip Ltd., Campbell, CA

Bobe Simovich – Broadcom Corp., San Jose, CA

Emerson Hsiao – Andes Technology Corp., San Jose, CA

Steve Brightfield – Wave Computing, Campbell, CA

Kamakoti Veezhinathan – Indian Institute of Technology Madras, Chennai, India

This panel was well organized, the peoples invited by Randy where the right one to discuss the topic and Eric Dewannain has done a great job by asking the key questions… but, it seems (to me at least) that such a 1 hour and 30 min panel is not the best way to introduce an emerging and interesting topic. Is it too long? Is it because the panelists are here first to pass there marketing pitch? Anyway, we must thank the moderator, Eric, as he has done a great job during the panel! No surprise from an engineer who has started at Intel (X86 Program manager), moving to TI as DSP Marketing Director & GM before joining Tensilica and Cadence as GM DSP IP and who is now with Samsung, this guy knows about computer IP!

The DAC 2019 IP Sessions that I have attended were great, I have learnt a lot about complex technologies, from PAM4 SerDes to new methodology to design Analog IP or RISC-V, let’s make an even better DAC 2020 in San Francisco where the IP track will be merged with Designer track.

From Eric Esteve from IPnest