We are in the midst of one of the most transformative periods for data center infrastructure. The explosion of AI, cloud-scale workloads, and hyperscale networking is forcing rapid innovation not only in compute and storage, but in the very fabric that connects them. At the recent Hot Chips conference, Pat Fleming gave a talk on this very topic and provided insights into Intel’s second-generation Infrastructure Processing Unit (IPU), the E2200. Pat is a Senior Principal Engineer at Intel.

From CPUs to IPUs: Why Offload Matters

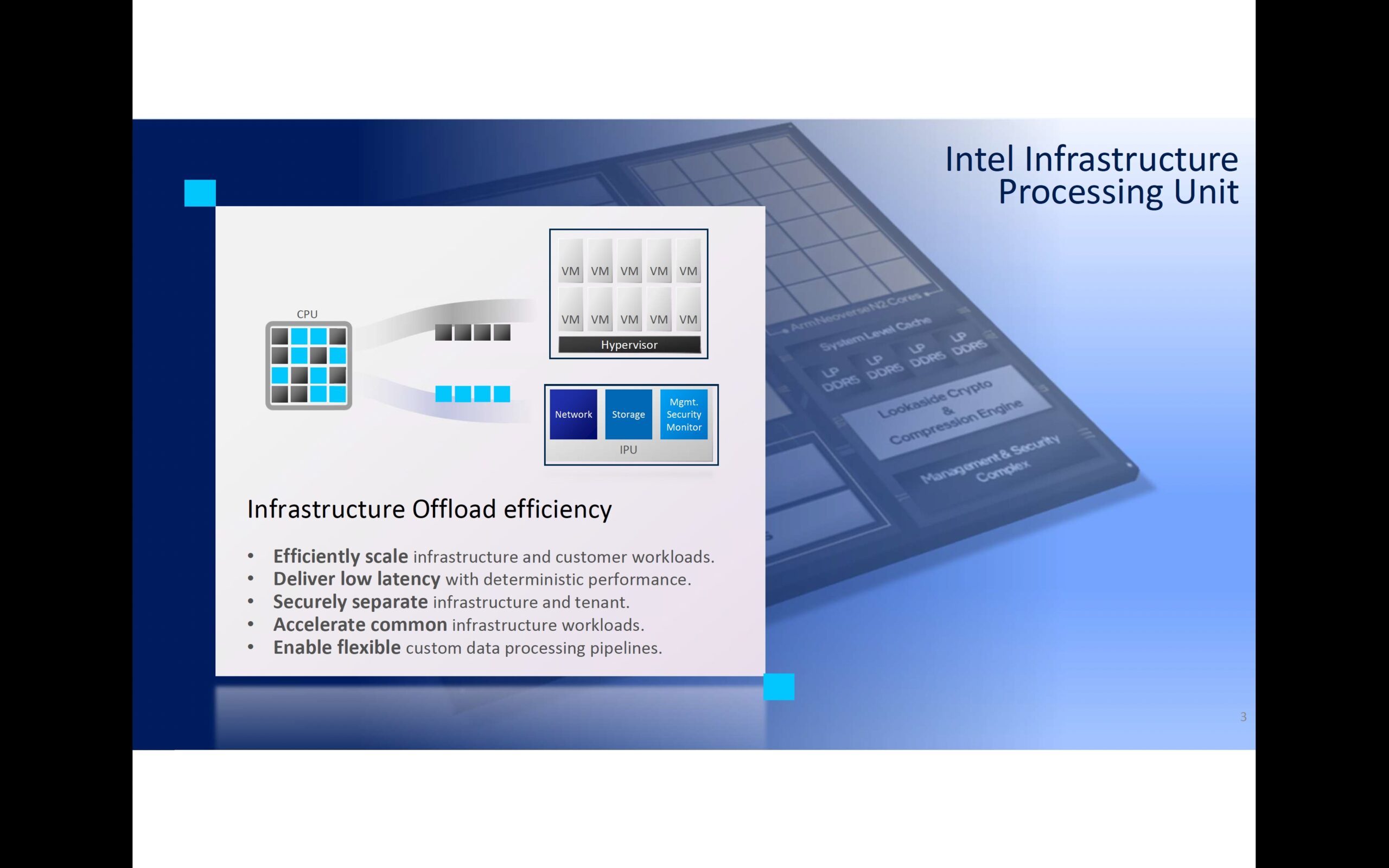

Traditionally, CPUs have shouldered both customer workloads—applications and virtual machines—as well as infrastructure services such as storage, virtual switching, encryption, and firewalls. As workloads scale, particularly AI and multi-tenant environments, this model becomes inefficient. Infrastructure services consume more CPU cycles, leaving fewer resources for applications. The IPU addresses this challenge by offloading infrastructure services to a dedicated programmable processor. This separation ensures that host CPUs are optimized for customer workloads, while the IPU handles the fabric services with higher efficiency, lower latency, and stronger security isolation.

Inside the Intel IPU E2200

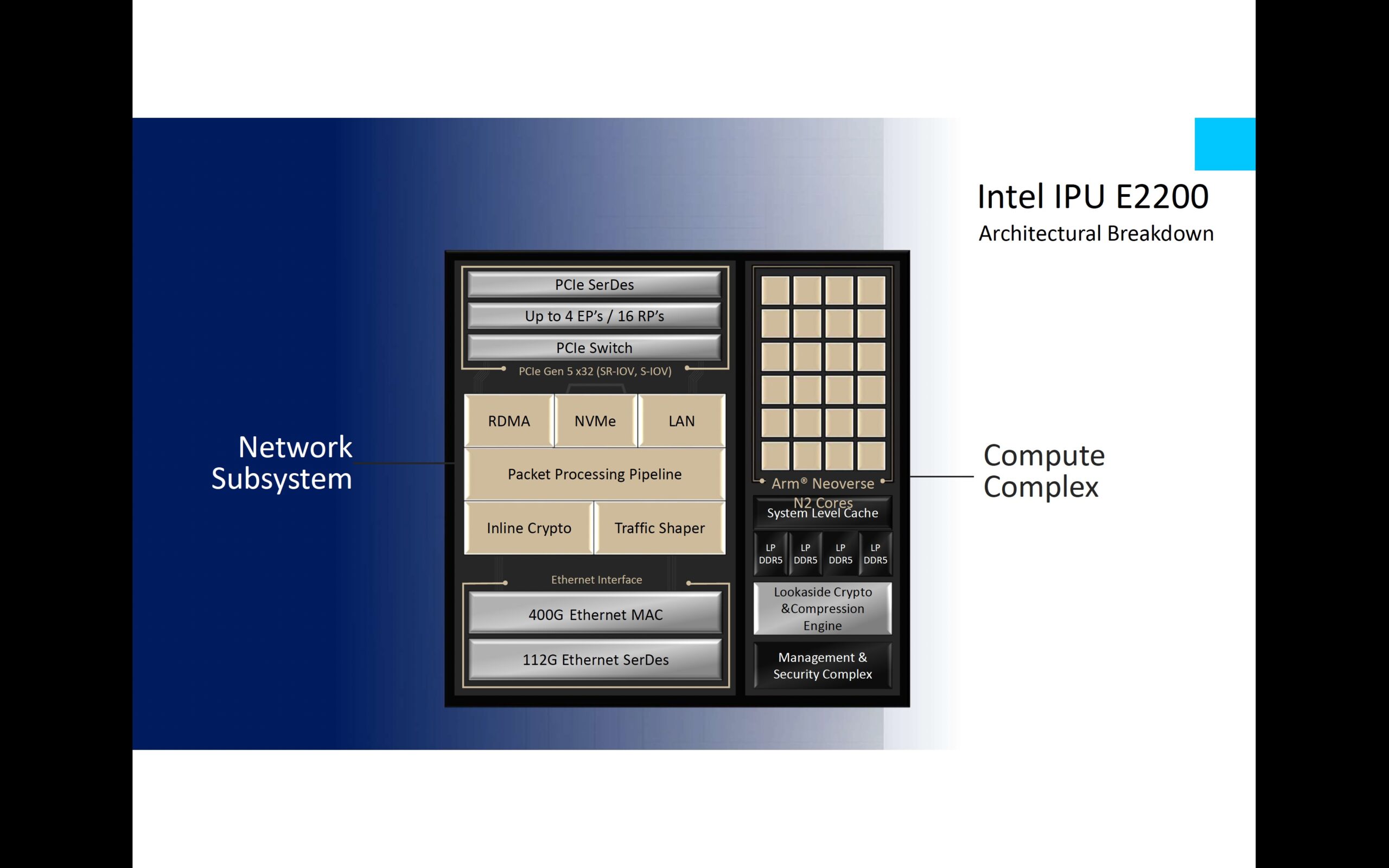

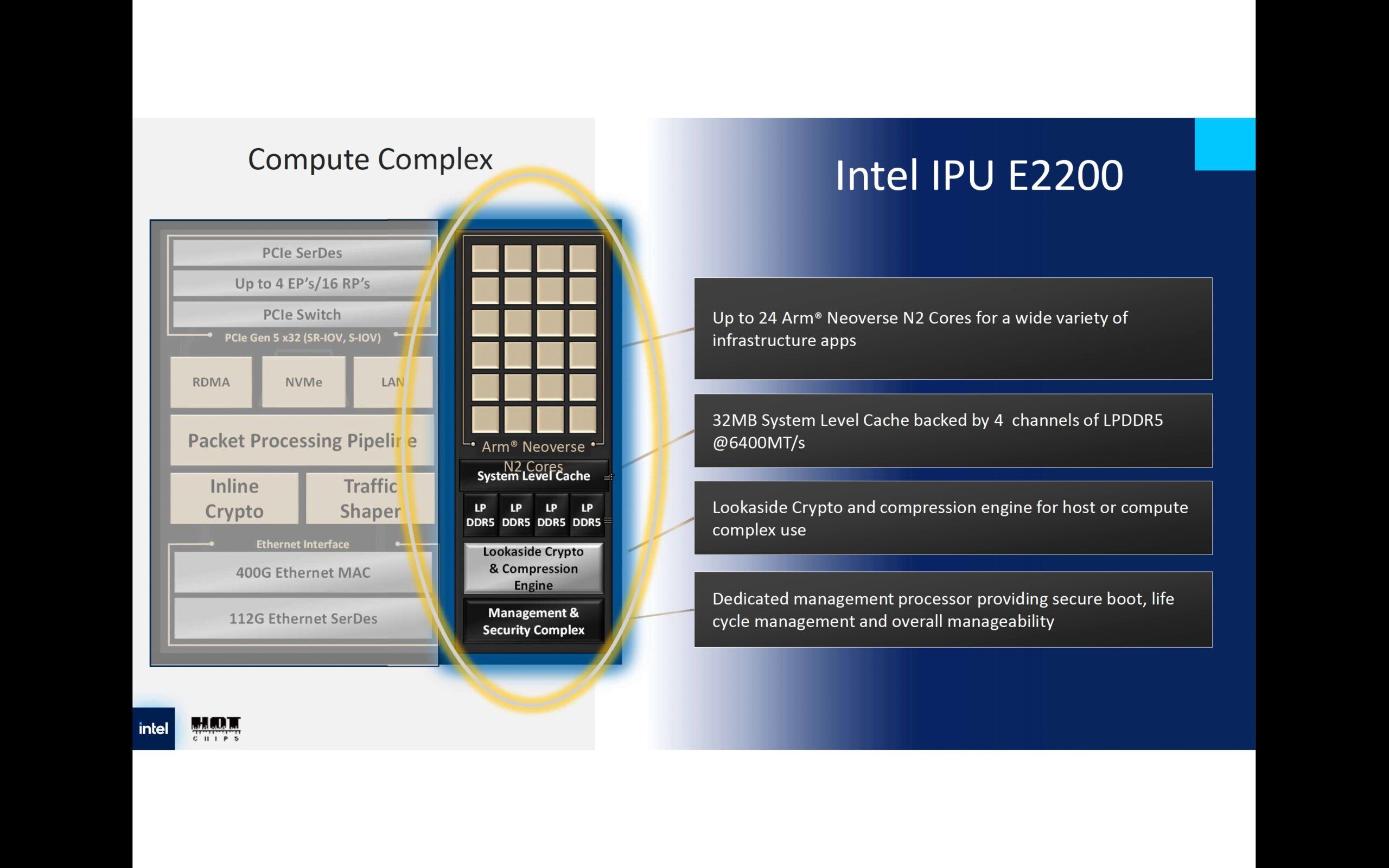

The E2200 builds on the first generation with significant advancements in networking, compute, memory, and programmability. It delivers up to 400 gigabits per second of networking throughput—doubling the performance of its predecessor—while incorporating up to 24 Arm® Neoverse N2 cores, a 32-megabyte system-level cache, and four channels of LPDDR5 memory operating at 6400 MT/s.

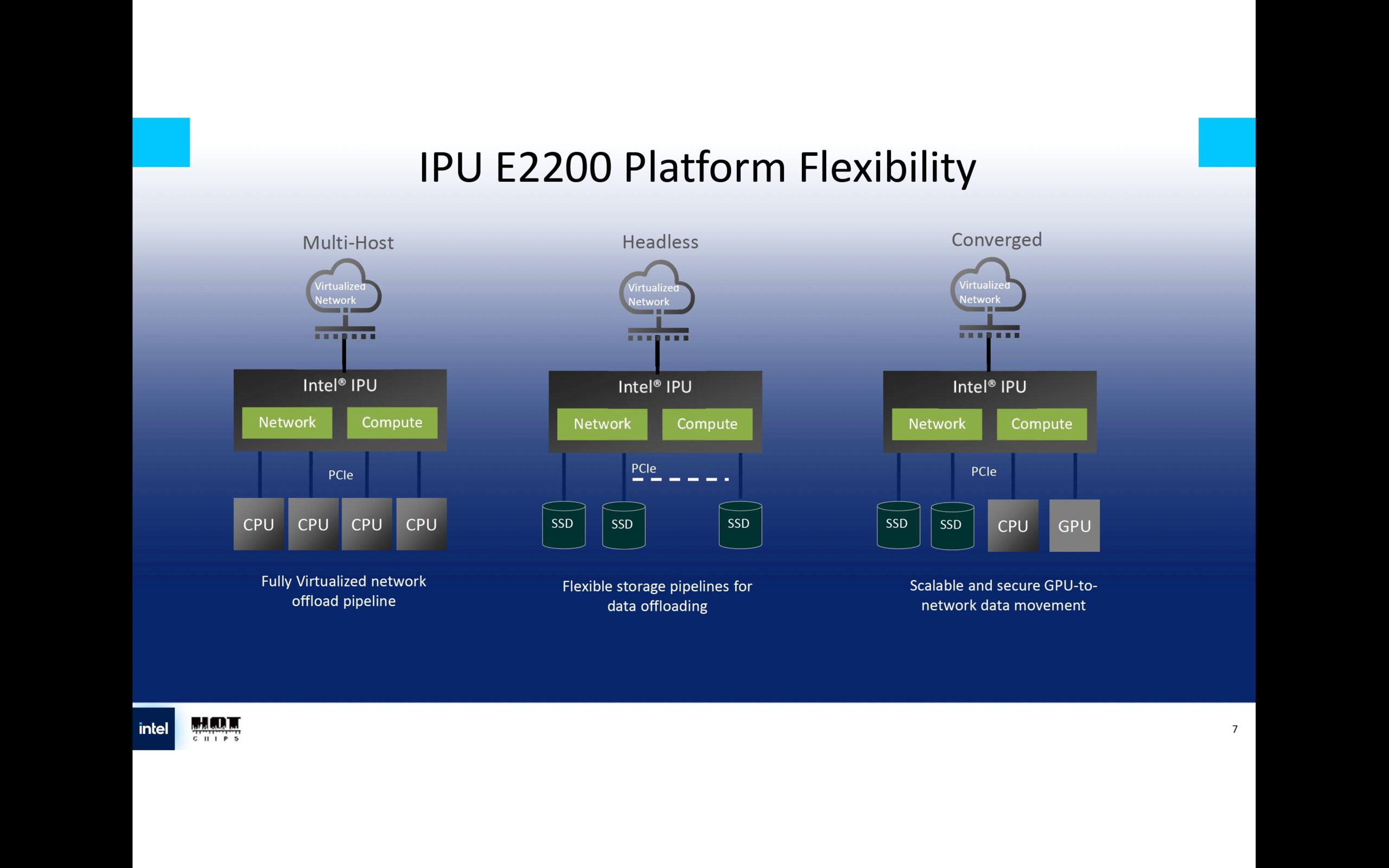

The platform supports PCIe Gen 5 x32 with SR-IOV and S-IOV, enabling up to four endpoints or sixteen root ports. This level of configurability makes it adaptable to diverse deployment scenarios. A defining characteristic of the E2200 is its tightly integrated compute and networking subsystems, linked through the shared cache and accelerators. This design allows the device to deliver custom, high-performance data pipelines across workloads, reinforcing its role as a programmable platform that can evolve with data center demands.

Programmability at Scale

At the heart of the E2200 lies a P4-programmable packet pipeline, providing flexibility for a wide range of use cases. The FXP packet processor handles millions of flows with deterministic latency and can be configured for advanced functions such as exact match, wildcard match, longest prefix match, and packet editing. Inline cryptography supports line-rate IPsec and PSP protocols, including AES-/GCM/GMAC/CMAC, and ChaChaPoly.

Networking is underpinned by 112G Ethernet SerDes and a 400G Ethernet MAC, while the transport subsystem is powered by Falcon, Intel’s reliable transport protocol. Falcon supports RDMA and RoCE with tail latency optimization, hardware congestion control, selective acknowledgment, and multipath capabilities. Traffic shaping is handled by a timing wheel algorithm that provides accurate rate enforcement and supports DCB arbitration mode, ensuring predictable low-latency performance at scale.

Compression and Crypto Capabilities

The E2200 incorporates a lookaside crypto and compression engine (LCE) that extends capabilities beyond the inline dataplane encryption. The LCE supports AES, SHA-1/2/3 with HMAC, RSA, Diffie-Hellman, DSA, ECDH, and ECDSA, as well as compression algorithms including Zstandard, Snappy, and Deflate. Importantly, it features a “Compress + Verify” function that guarantees data recoverability after transforms, a critical requirement for storage workloads.

Intel claims that its compression technology leads the industry in both throughput and ratio, particularly for database and storage workloads. In addition, the E2200 offers a fourfold increase in security associations, backed by memory scalability, ensuring robust isolation across flows.

Why Arm Cores?

A common question in many people’s mind is, why did Intel choose to use Arm cores within the E2200 instead of its own CPUs. Fleming explained that the decision was based on performance, programmability, and time-to-market considerations, rather than brand loyalty. For this generation, Arm Neoverse N2 cores proved to be the most suitable option. Future products may adopt different architectures, depending on the requirements.

Deployment Flexibility

The E2200’s architecture is designed to be versatile across different deployment models. It can operate in headless mode, functioning as a standalone switch and offload device, or in multi-host mode, where it securely shares infrastructure across multiple tenants. It also plays a crucial role in AI clusters by providing low-latency, reliable transport between GPUs, storage, and the broader network fabric. Storage acceleration is another major use case, with the IPU handling compression, encryption, and data movement at scale.

Summary

The Intel IPU E2200 is designed to accelerate the industry’s transition toward programmable, isolated, and efficient infrastructure. By separating customer workloads from infrastructure services, enabling advanced programmable pipelines, and integrating leading-edge compression and cryptography, the E2200 positions itself as a cornerstone for next-generation data centers.

Hyperscalers often lead with custom innovations that later filter down to standards bodies and broader adoption. With the E2200, Intel aims to bring that innovation forward, ensuring that programmable infrastructure becomes the norm in meeting the explosive demands of AI, storage, and virtualized services.

Also Read:

Revolutionizing Chip Packaging: The Impact of Intel’s Embedded Multi-Die Interconnect Bridge (EMIB)

Should the US Government Invest in Intel?

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.