Steven Woo, Fellow and Distinguished Inventor presented at the just concluded Linley Spring Processor Conference a talk about AI in the Era of Connectivity. As he put it, the world is becoming increasingly connected, with a marked surge of digital data, causing a dependence on said data. With the explosion of digital data and AI, the interaction is such that they are feeding off each other. Consequently, architectures are evolving to more efficiently capture, secure, move, and process the growing volume of digital data.

Data Centers are evolving, and data processing is moving to the edge. Data is increasingly valuable and sometimes more so than the infrastructure itself so securing this data is essential. Power efficiency is also a key consideration. There is an interesting evolution/revolution in how data is captured, processed and moved. AI techniques have been around for decades, so why the sudden resurgence of interest? Faster compute and memory along with large training sets have enabled modern AI. With the transistor feature size limits being reached and the increased need for performance coupled with energy efficiency mandates, clearly new approaches are needed and indeed emerging.

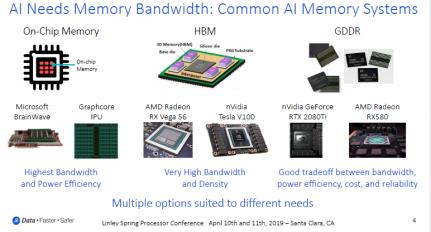

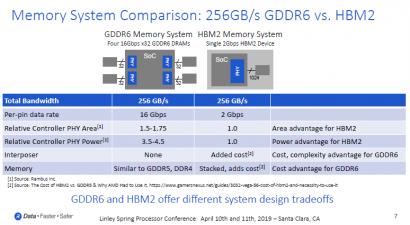

AI relying on CNN (convolutional neural network) is suddenly taking off due to its increasing accuracy as the data and the model size increase. To support this evolution, Domain Specific Architectures (DSAs), have emerged with specialized processors targeted specifically for some tasks away from general purpose compute. Memory systems are critical in these systems and can range from On-Chip Memory to High Bandwidth Memory (HBM) and GDDR. On-Chip Memory provides the highest bandwidth and power efficiency, with HBM exhibiting very high bandwidth and density, while GDDR sits in the middle and provides a good trade-off between bandwidth, power efficiency, cost and reliability.

With Data growing in value, security is challenged by increased sophistication of intrusion attempts and exploitation of vulnerabilities. The surface area to attack is also growing due to infrastructure diversity, pervasiveness and user interaction type, with the spectreof a meltdown foreshadowing, pun notwithstanding, more havoc to come.

Rambus has a new approach called Siloed Execution that improves security where physically distinct CPUs separate secure operations from other ones that require fast performance. The security CPU can be simplified and armored for tighter security for secret keys, secure assets and apps, and privileged data, remaining uncompromised even if the general-purpose CPU is hacked. Rambus has such a secure CPU, the CryptoManager Root of Trust which provides secure functionality for secure boot, authentication, run-time integrity and a key vault. It includes a custom RISC-V CPU, secure memory and crypto accelerators such as AES and SHA. With a secure CPU integrated on the chip you can monitor run-time integrity in real time in the system and make software/hardware adjustments as needed.

The AI infrastructure connection is helped by allowing cloud shared neural network hardware to be used by multiple users who can now encrypt their training sets and even their models and the security CPU can manage different keys to decrypt that information for each user. Rambus’ CryptoManager Root of Trust would allow a multi root capability, with a key decrypting one user data, allowing access to the model parameters for training and inference, then a second user can have their data decrypted with a separate set of keys and run on the same hardware.

On the memory side there is a wide range of solutions available that are appropriate for some applications with no one size fits all and on the security side the data itself is becoming in some ways more valuable than the infrastructure. It is important to not only secure the infrastructure but also it is important to secure the training data and models as it can be your competitive advantage. Over time, what will be needed is allowing the user to have simpler ways to describe their models by using compilers to transform something that is hard to describe to the user but that runs extremely well on hardware. What is needed is how to describe the job and less and less will be needed on how neural networks work and software will enable this transformation over what the latest available hardware provides.

Dr. Woo stressed that AI is driving in a sense a computer architecture renaissance. Memory systems now offer multiple gradations of AI options for data centers, edge computing and endpoints. As the data value is increasing, with growing security challenges, security by design is imperative as complexity and data grows with no sign of slowing down. If you get AI, then get security, and get going with functional integration and task separation, all in one AIdea. Sounds like a good name, get the AIdea?

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.