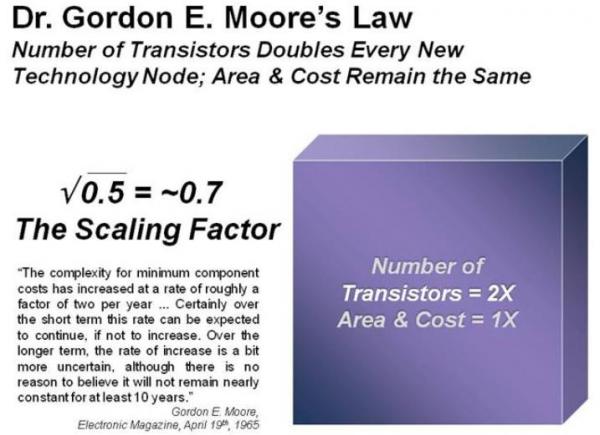

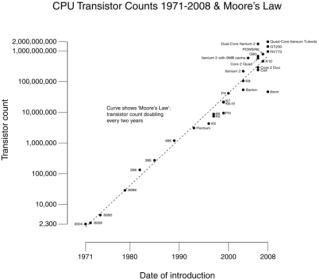

Moore slightly altered the formulation of the law over time, bolstering the perceived accuracy of Moore’s law in retrospect. Most notably, in 1975, Moore altered his projection to a doubling every two years. Despite popular misconception, he is adamant that he did not predict a doubling “every 18 months”.

So clearly Moore’s law is more of an observation. Wally Rhines, Mentor Graphics CEO and my favorite presenter certainly thinks so. In his presentation to the U2U conference (that I mentioned in my previous blog), Wally feels that Moore’s empirical observation has been a useful approximation for the past 40 years due to a basic law of nature called “The learning curve”.

By definition, a learning curve is a graphical representation of the changing rate of learning for a given activity or process. Typically, the increase in retention of information is sharpest after the initial attempts and then gradually evens out, meaning that less and less new information is retained after each repetition. The learning curve can also represent at a glance the initial difficulty of learning something and, to an extent, how much there is to learn after initial familiarity.

An amazing thing, the presentation is seventy pages long and filled with an incredible amount of data, but you will never see a better data delivery system than Wally Rhines. It would be impossible to do a Wally presentation justice in a 500 word blog but here is what I learned that day:

For perspective there are:

- 100+ billion galaxies in the night sky

- 100+ billion stars in the milky way galaxy

- 100+ billion transistors on a chip by 2025

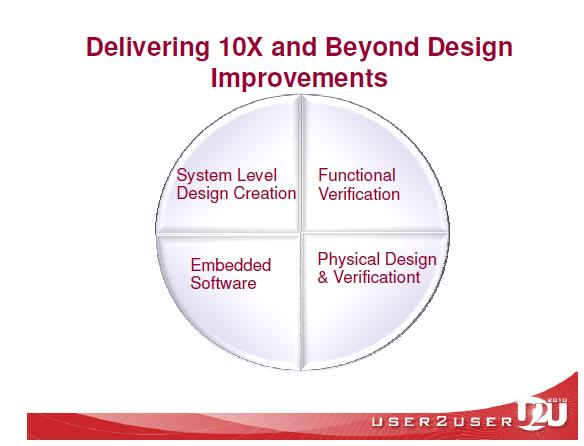

Delivering 10X and beyond design improvements will require:

- System Level Design

- Functional Verification

- Embedded Software

- Physical Design and Verification

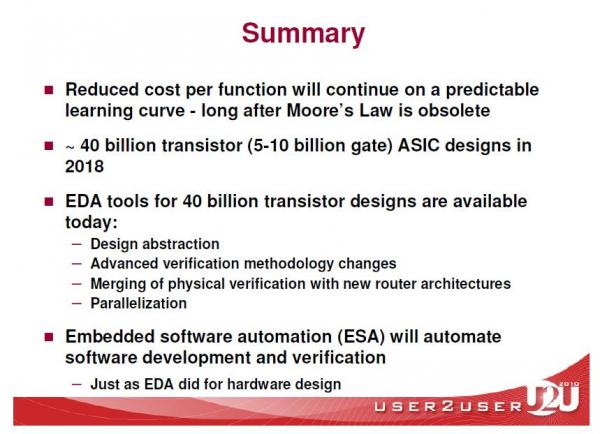

A new level of abstraction will be required for billions of transistors, 100 millions of gates, millions of lines of RTL, and hundreds of lines of TLM or C-based code. I remember the transition from schematic based design to language based (synthesis), it was a bloody battle for sure. The poor ESL guys never had a chance! Expect nothing less for System Level Design.

Verification is falling further behind and will need exponential growth in speed and capabilities to keep pace. Redundant verification must be eliminated. Emulation with transactional testbenches and mixing dynamic and formal verification will be required. Verification is one of the big challenges I see with the foundries, absolutely.

Physical Design and Verification will continue to see new routing architecture every 2-3 nodes, parallel optimization, and full parallelization of routing. Place and route will be merged with verification to reduce/eliminate ECO routing iterations. This will be a big win for Mentor obviously since they own the verification market and are trying to move up the physical design chain.

Embedded Software is a hot topic for Wally and of course where Mentor has made significant investments. According to Wally most of the escalating SoC design costs can be attributed to software development, which will by far outpace hardware development.

Wally is my favorite EDA CEO. He is humble, brilliant, personable, and a great speaker. No coincidence Mentor Graphics is also my favorite EDA company as it mirrors Wally. Why Dr. Walden C. Rhines does not have his own wiki page I do not know (too humble?). Rumor has it I may get a lunch with Wally sometime soon, which will certainly reinforce the notion that I blog for food.

lang: en_US

Share this post via: