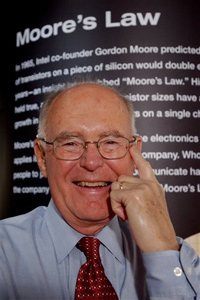

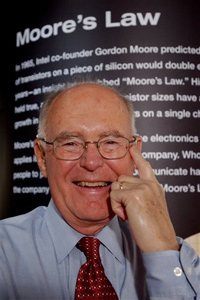

I recently read a news article where the author referred to Moore’s Law as a ‘Law of Science discovered by an Intel engineer’. Readers of SemiWiki would call that Dilbertesque. Gordon Moore was Director of R&D at Fairchild Semiconductor in 1965 when he published his now-famous paper on integrated electronic density trends. The paper doesn’t refer to a law, but rather the lack of any fundamental restrictions to doubling component density on an annual basis (at least through 1975). He later re-defined the trend to a doubling of speed every 18 months. [The attribution “Moore’s Law” was coined by Carver Mead in 1970]. As founder and President of Intel Corporation, Gordon Moore led what is now a 40+ year continuation of that trend.

See Cramming more components onto integrated circuits, Electronics Magazine, 1965

It wasn’t a law of science that drove the semiconductor industry to continuously shrink lithography. At one level it was economics. The market needed new computers with faster processors to keep up with (slower) software, i.e the WinTel duopoly. As software evolved to enable new applications, computers got faster, cheaper and easier to use. It was an economic win-win for Intel, Silicon Valley, Seattle and America.

At another level, solving difficult problems is in our nature. The physics, chemistry, design and manufacturing challenges presented by the International Technology Roadmap for Semiconductors continue to be met. Moore’s law is but one phase of an exponential trend that extends back to Guttenberg’s printing press. In fields ranging from computing, communications, geoscience, bio-technology and more, the growth of technology is not merely following Moore’s law, it is accelerating.

See Ray Kurtzweil’s Law of Accelerating Returns

In the last decade the economic driver has changed – Gigahertz have given way to Nanowatts – to enable portability. And the number of personal computing devices has exploded. Smartphones and tablet computers are audio/video portals connected to a cloud of near infinite compute cycles and storage. Moore’s Law has made a leap onto a new curve. Here are some of my personal experiences with Moore’s Law.

My Life with Moore’s Law

In 1965 I didn’t know who Gordon Moore was or the company Fairchild Semiconductor, but I did learn how to use a sliderule. I owned several in middle and high school, and I started college with the Post Versalog, a High School academic award I still have. In my sophomore year my roommate purchased an HP35, the first consumer-oriented scientific calculator. It used Reverse Polish Notation which seemed cool then, though likely politically incorrect today. A sliderule and a CRC were no match for the HP35, but it cost >$1000 in today’s money. My first scientific calculator was the TI30 from Texas Instruments. It was priced to obsolete the sliderule, and it did.

The computational analysis for my Ph.D. thesis was done on the school CDC Cyber 70. A screaming 25MHz 60b mainframe with 256KB of core memory. I created Fortran77 code one 72 character line at a time, on the IBM029 key punch. I carried my program decks in a shoebox with rubber bands over sub-routines. When I started my career at Texas Instruments, these shoeboxes were a common sight. I have a tinge of regret for tossing mine. Coincidentally my first exposure to circuit design was working with the P-MOS logic group that designed TI’s calculator ICs. In 1982 I was developing TI’s first standard cell library. The CAD system was a TI 16b mini-computer and an IBM2311 50MB disk. I carried the platter tray around the TI campus like a cake-sized pen-drive.

In 1984, I was managing TI’s first ASIC design center in Santa Clara. The center had Daisy Logicians and Mentor Apollo DN workstations. One of my ASIC customers used the Mentor system (based on a Motorola 68K) to design the first 68030 MAC, the Apple IIfx. In our industry, we use today’s computers to design tomorrow’s.

We uploaded design files to Dallas for mask generation using a dedicated 9600baud modem. At home, my connection to the Internet evolved from 12Kbps dial-up to 56Kbps ISDN to 1.5Mbps DSL and now 18Mbps cable. If the cable goes down I get free Wifi at ~2Mbps (a benefit of living in GoogleTown). Inside the home I stream 600Mbps on 802.11n and will upgrade someday to 802.11ad (60Gbps?).

I have used a succession of Intel-based personal computers based on 8086, 286, 386, 486, 586 (Intel renamed to Pentium), Pentium II, Pentium III, Pentium M, Centrino and CoreDuo. My first portable computer was a Compact III that I lugged around the US and overseas visiting customers for Actel.

The End of Moore’s Law?

When I joined the IEEE forty years ago, I was characterizing transistor S-Parameters as an intern at Collins Radio. One transistor in one package. Today the latest embedded flash technology supports 128GB (8 die stacked into one package).

The application processor in today’s smartphones costs less than a fast food lunch did in 1970, adjusted for inflation, and produces ~5000 MIPs. The Cyber 70 I used in graduate school produced <1MIP and cost maybe ~$50M in 2012 dollars. Twelve orders of magnitude in transistor density and over nine orders of magnitude in performance/dollar.

Is there an end to Moore’s Law? Yes, if charge controlled devices in a PCB package is the measure. There are finite limits to the number of electrons that can be manipulated and evaluated. But no, if your measure is the diminishing cost of processing power and the aggregate processing power in the world. Potential vectors include:

- flexible, transparent circuits printed onto virtually everything

- architectures that dynamically merge memory and logic

- quantum computing

- molecular-level and atomic-level storage.

The DNA in your “thumb” contains as much data as all the thumb drives shipped last year.

People debate the end of Moore’s Law while leading IC fabs are in an apparent race to the end (e.g. Global Foundry’s recent announcement to node-skip 20nm). But the future is just getting started. The growth of human knowledge has no apparent limit, barring Kurtzweil’s Singularity. The boundless ability of billions of human brains collaborating through ubiquitous computing is a profound network effect. The growth of knowledge and rate of technology progress over the next forty years will far eclipse the last forty.

Also Read: A Brief History of Semiconductors

TSMC vs Intel Foundry vs Samsung Foundry 2026