TinyML is kind of a whimsical term. It turns out to be a label for a very serious and large segment of AI and machine learning – the deployment of machine learning on actual end user devices (the extreme edge) at very low power. There’s even an industry group focused on the topic. I had the opportunity to preview a compelling webinar about TinyML. A lot of these topics were explained very clearly, with some significant breakthroughs detailed as well.

The webinar will be broadcast on April 21, 2020 at 10AM Pacific time. I strongly urge you to register for Artificial Intelligence in Micro-Watts: How to Make TinyML a Reality here.

The webinar is presented by Eta Compute. The company was founded in 2015 and focuses on ultra-low power microcontroller and SoC technology for IoT. The webinar presentation is given by Semir Haddad, senior director of product marketing at Eta Compute. Semir is a passionate and credible speaker on the topic of AI and machine learning, with 20 years of experience in the field of microprocessors and microcontrollers. Semir also holds four patents. In his own words, “all of my career I have been focused on bringing intelligence in embedded devices.”

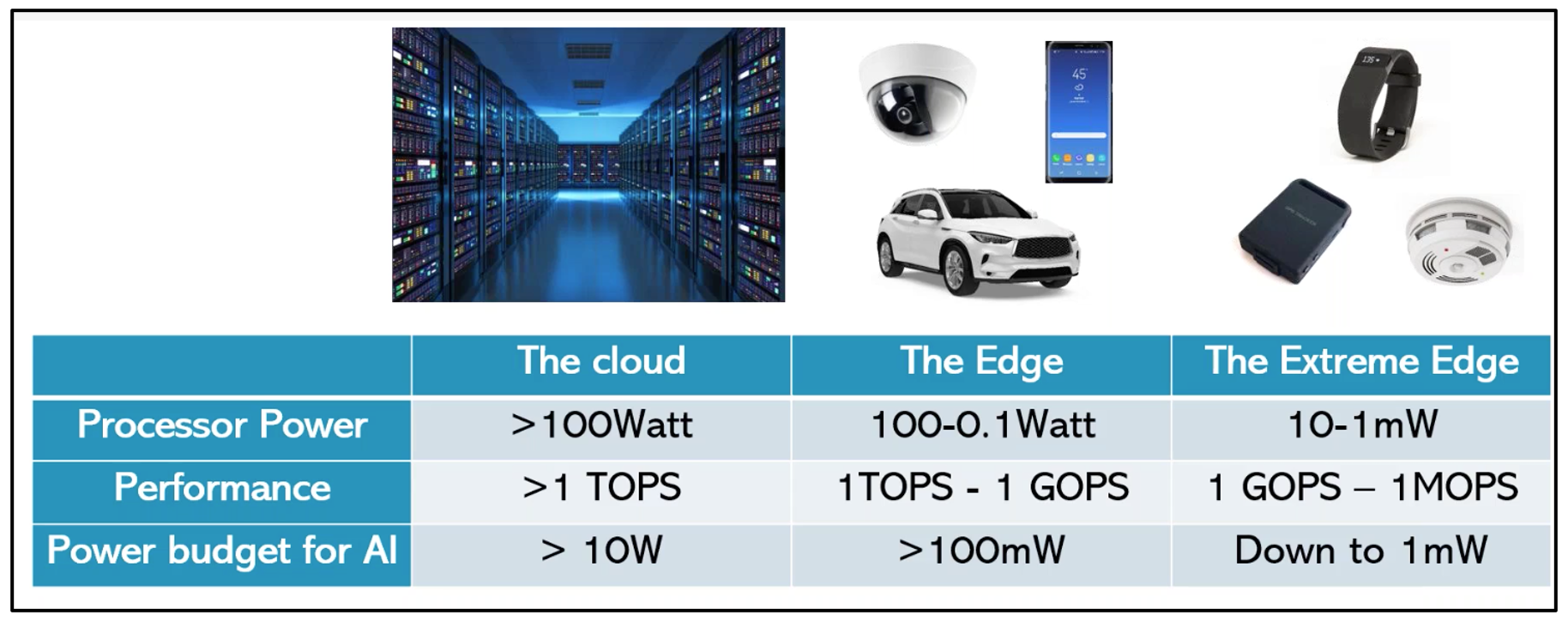

The webinar focuses on the deployment of deep learning algorithms at the  extreme edge of IoT and presents an innovative new chip from Eta Compute for this market, the ECM3532. Given the latency, power, privacy and cost issues of moving data to the cloud, there is strong momentum toward bringing deep learning closer to the end application. I’m sure you’ve seen many discussions about AI at the edge. This webinar takes it a step further, to the extreme edge. Think of deep learning in products such as thermostats, washing machines, health monitors, hearing aids, asset tracking technology and industrial networks to name a few. The figure below does a good job portraying the spectrum of power and performance for the various processing nodes of IoT.

extreme edge of IoT and presents an innovative new chip from Eta Compute for this market, the ECM3532. Given the latency, power, privacy and cost issues of moving data to the cloud, there is strong momentum toward bringing deep learning closer to the end application. I’m sure you’ve seen many discussions about AI at the edge. This webinar takes it a step further, to the extreme edge. Think of deep learning in products such as thermostats, washing machines, health monitors, hearing aids, asset tracking technology and industrial networks to name a few. The figure below does a good job portraying the spectrum of power and performance for the various processing nodes of IoT.

A power budget of ~1MW is daunting and this is where the innovation of Eta Compute and the ECM3532 shine. Semir does a great job explaining what the challenges of ultra-low power and ultra-low cost deployment for deep learning are. I encourage you to attend the webinar to get the full story. Here is a brief summary to whet your appetite.

Traditional MCUs and MPUs operate in a synchronous nature. Getting timing closed on a design like this over process, voltage and temperature conditions is quite challenging. As power consumption is proportional to the square of the operating voltage, lowering the voltage can reduce power. But this approach will reduce operating frequency to allow timing closure. An impossible balancing act to get to low power and high performance. Dynamic voltage and frequency scaling (DVFS) is one way to address this problem, but the impacts of approaches like this across the chip continue to make it difficult to achieve the optimal balance of power and performance for a synchronous design.

Eta Compute approaches the problem in a different way with continuous voltage and frequency scaling (CVFS). They are the inventor of this technology, with seven patents for both hardware and software, with more patents in the pipeline. The key innovation here is a major re-design of the processor architecture to allow self-timed performance on a device-by-device basis. This allows easier timing closure and results in higher performance for the same voltage when compared to traditional approaches. Their approach also allows frequency and voltage to be controlled by software. For example, if the user sets the frequency for a particular workload, the voltage will adjust automatically.

The bottom line is a 10X improvement in energy efficiency, which is a game changer. Eta Compute also examined what was needed for TinyML from an architectural point of view. It turns out that DSPs are better at some parts of deep learning for IoT and CPUs are better for other parts. So, the ECM3532 supports a dual core architecture, with both Arm M3 and dual MAC DSPs on board that can operate at independent frequencies. There is a lot more in-depth discussion on this and other topics during the webinar.

I will leave you with some information on availability. An ASIC version of the architecture, the ECM3531 and an evaluation board is available now. Samples of the full ECM3532 AI platform and evaluation board will be available in April 2020 with full production in May 2020. Eta Compute is also working on a software environment (called the TENSAI platform) to help move your deep learning application from the bench to the ECM3532 with full access to all the optimization technologies.

There is a lot more eye-popping power and performance information presented during the webinar. I highly recommend you register and catch this event here.

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026