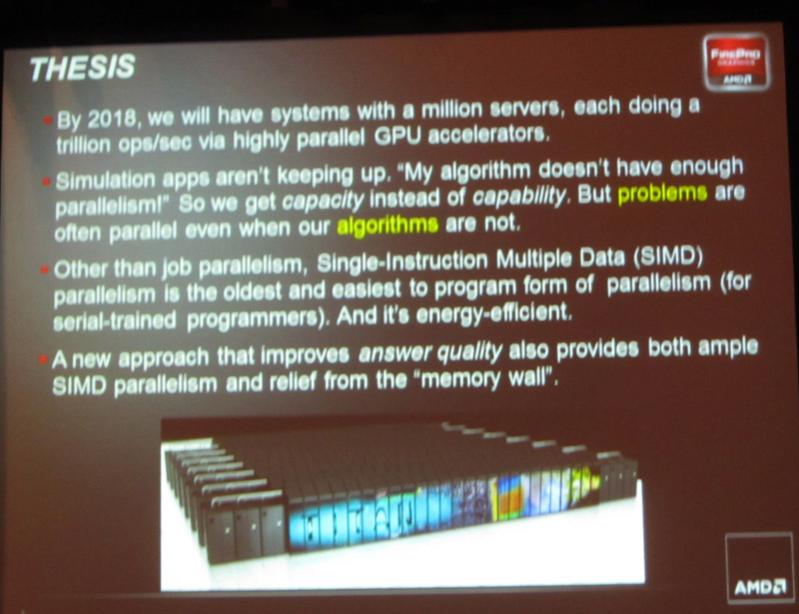

At the keynote for ICCAD this morning, John Gustafson of AMD (where he is Chief Graphics Product Architect as well as a Fellow) talked about parallel algorithms. Like Gene Amdahl, whose law states that parallel algorithms are limited by the part that cannot be parallelized (if 10% is serial, then even if the other part takes place in zero time, the maximum speedup is 10X), Gustafson has a law named after him. It basically says Amdahl is wrong, that there is no limit to the speedup you can get as long as you increase the size of the problem along with the number of cores. So his talk was a look at whether there are embarrassingly serial problems, problems that are not open to being parallelized.

For example, at first glance, calculating the Fibonacci series look like one. Each term depends on the previous two so how can you bring a millions servers to bear on the problem. But, as anyone who has done any advanced math knows, there is a formula (curiously involving the golden ratio) so it is straightforward to calculate as many terms as desired in parallel.

By 2018 we should have million server systems each doing teraflops through highly parallel operations running on GPUs. The big challenge is the memory wall. For operations that involve a high ratio of work to decision making, this sort of SIMD (single instruction, multiple data) can significantly reduce wattage per teraflop.

Throwaway line of the day: with great power comes great responsibility…and some really big heatsinks!

An instruction issue consumes around 30 times more power than basic mutiply-add operations and a memory access much more power than that. Memory transfers will soon be half the power consumed and processors are already power-constrained. Part of the problem is that hardware caches are very wasteful, designed to make programming easy rather than keep power down. They minimize miss-rates at the cost of low utilization (around 20%). Even more surprisingly, only 20% of the data written back out of the cache is ever accessed again so didn’t really need to be written back at all. John felt that at least for low-level programmers we need a programming environment that makes memory placement visible and explicit (as it apparently was on the Cray-2).

There are two ways to associate a SIMD GPU with a processor: on-chip and a separate off-chip device. On chip seems to work best for problems where data re-use is 10-100 (such as FFT and sparse-matrix operations) and an off-chip device works best for data re-use in the 1000s, such as dense matrix and many body dynamics.

We also need better arithmetic. Most programmers have never studied numerical analysis and so have no idea how many bits of precision there are or how to calculate it. A specific problem is that accumulating results (by adding) needs much more precision that is used to calculate the numbers to add. Eventually you are adding small numbers to a number that is so large that it doesn’t change. John had a few examples where he was using 8 bit floating point (yes, really. 1 sign bit, 3 bits of exponent and 4 bits of mantissa) but doing accurate analysis.

John’s final conclusion: if we really cherish every bit moved to and from main RAM then we can get better arithmetic answers (provable bounds) and as a side-effect help the memory wall dilemma and always have a use for massive parallelism.

Semidynamics Unveils 3nm AI Inference Silicon and Full-Stack Systems