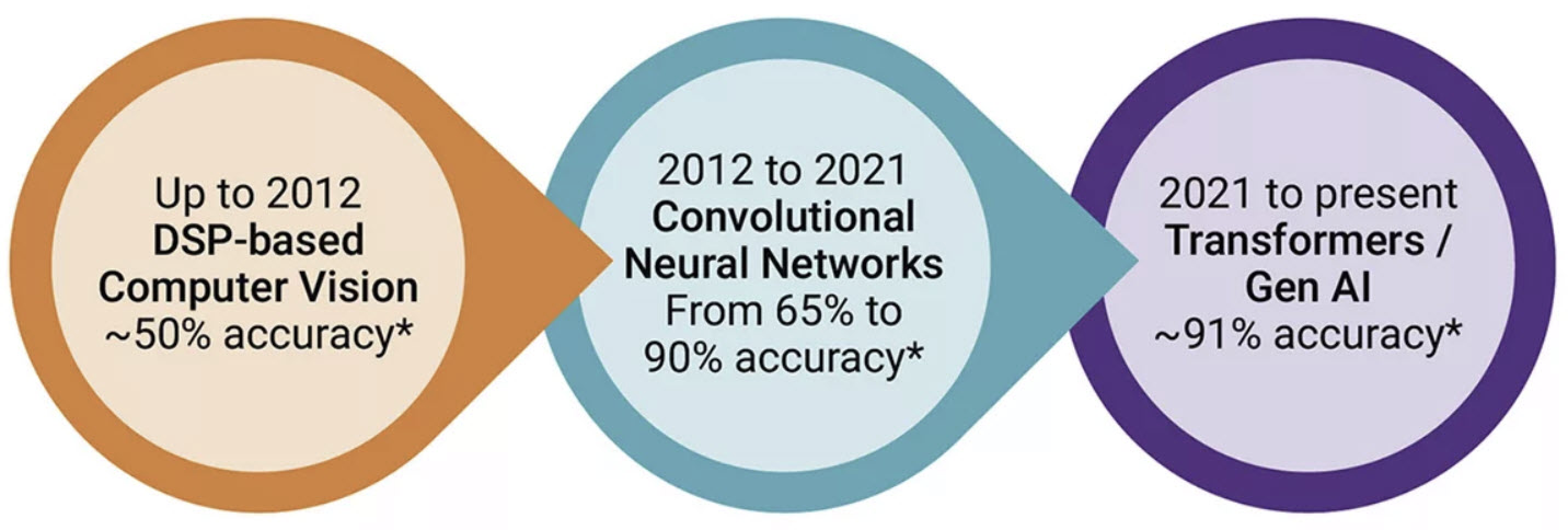

Artificial intelligence and machine learning have undergone incredible changes over the past decade or so. We’ve witnessed the rise of convolutional neural networks and recurrent neural networks. More recently, the rise of generative AI and transformers. At every step, accuracy has been improved as depicted in the graphic above. These enhancements have also increased the power and “footprint” of AI as the technology moved from automated analysis to automated creation. And all the while the thirst for processing power and massive data manipulation has continued to grow in an exponential fashion.

Artificial intelligence and machine learning have undergone incredible changes over the past decade or so. We’ve witnessed the rise of convolutional neural networks and recurrent neural networks. More recently, the rise of generative AI and transformers. At every step, accuracy has been improved as depicted in the graphic above. These enhancements have also increased the power and “footprint” of AI as the technology moved from automated analysis to automated creation. And all the while the thirst for processing power and massive data manipulation has continued to grow in an exponential fashion.

The broader footprint of Gen AI has also created a shift in the host environment. While the cloud offers tremendous processing power, it also creates serious challenges with latency and data privacy. The edge has emerged as a promising place to address these challenges, but the edge can’t always offer the same resources as the cloud. And power can be a scarce resource. Synopsys is developing IP and software to tame these problems. Let’s examine the challenges of Gen AI and how Synopsys enables Gen AI on the edge.

Why Edge-Based Gen AI?

Transformers have enabled Gen AI, which leverages transformer models to generate new data, such as text, images, or even music, based on learned patterns. The ability of transformers to understand and generate complex data has made them the backbone of AI applications such as ChatGPT. These models demand incredible processing power and massive data manipulation. While the cloud offers all of these capabilities, it is not the ideal place to run these technologies.

On of the reasons for this is latency. Applications like autonomous driving, real-time translation, and voice assistants require instantaneous responses, which can be hindered by the latency associated with cloud-based processing. Privacy and security also come into play. Sending sensitive data to the cloud for processing introduces risks related to data breaches. By keeping data processing local to the device, privacy can be enhanced and the potential for security vulnerabilities can be reduced.

Limited connectivity is another factor. In remote or underserved areas with unreliable internet access, Gen AI enabled edge devices can operate independently of cloud connectivity, ensuring continuous functionality. This is crucial for applications like disaster response, where reliable communication infrastructure is likely compromised.

The Challenges Posed by Gen AI on the Edge

As they say, there is no free lunch. This certainly applies to Gen AI on the edge. It solves a lot of problems but poses many as well. The computational complexity of Gen AI models creates a lot of the challenges. And transformers, which are the backbone of Gen AI models, contribute to this issue due to their attention mechanisms and extensive matrix multiplications. These operations require significant processing power and memory, which can strain the limited computational resources available on edge devices.

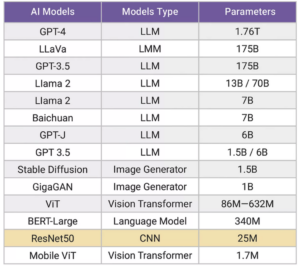

Also, edge devices often need to perform real-time processing, especially in applications like autonomous driving or real-time translation. The associated high computational demands can make it difficult to meet speed and responsiveness requirements. The figure below illustrates the incredible rise in complexity presented by large language models.

Taming the Challenges

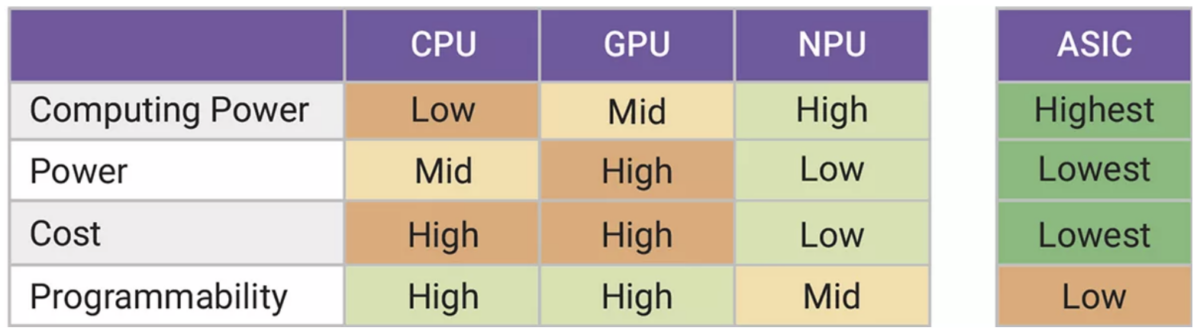

Using the best embedded processor for running Gen AI on edge devices helps to overcome challenges. Computational power, energy efficiency, and flexibility to handle various AI workloads must all be considered. Let’s look at some of the options available.

- GPUs and CPUs deliver flexibility and programmability. This makes them suitable for a wide range of AI applications. They may not be the most power-efficient options for the edge, however. For example, GPUs can consume substantial power, presenting a challenge for battery-operated environments.

- ASICs deliver a hardwired, targeted solution that works well for specific tasks. However, this approach lacks flexibility. Evolving AI models and workloads cannot be easily accommodated.

- Neural Processing Units (NPUs) strike a balance between flexibility and efficiency. NPUs are designed specifically for AI workloads, offering optimized performance for tasks like matrix multiplications and tensor operations, which are essential for running Gen AI models. This approach provides a programmable, power-efficient solution for the edge.

Synopsys ARC NPX NPU IP are designed specifically for AI workloads, creating an effective way to enable Gen AI on the edge. For instance, running a Gen AI model like stable diffusion on an NPU can consume as little as 2 Watts, compared to 200 Watts on a GPU. NPUs also support advanced features like mixed-precision arithmetic and memory bandwidth optimization, which are essential for handling the computational demands of Gen AI models. The figure below summarizes the relative benefits of NPUs compared to other approaches for Gen AI on the edge.

Beyond the benefits of its ARC NPX NPU IP, Synopsys also provides high-productivity software tools to accelerate application development. The ARC MetaWare MX Development Toolkit includes compilers and a debugger, a neural network software development kit (SDK), virtual platform SDKs, runtimes and libraries, and advanced simulation models.

Designers can automatically partition algorithms across MAC resources for highly efficient processing. For safety-critical automotive applications, the MetaWare MX Development Toolkit for Safety includes a safety manual and a safety guide to help developers meet the ISO 26262 requirements and prepare for ISO 26262 compliance testing.

To Learn More

If Gen AI is finding its way into your products plans, you will soon face the challenges associated with edge-based implementation. The good news is that Synopsys offers world-class IP and development tools that reduce the challenges you will face. There are many good resources that will help you explore these solutions.

- An informative article entitled The Rise of Generative AI on the Edge is available here.

- A short but very informative video from the Embedded Vision Summit is also available. Gordon Cooper, product marketing manager for ARC AI processor IP at Synopsys provides many useful details about the IP and some unique applications. You can view the video here.

- If you want to explore computer vision and image processing, there is an excellent blog on these topics here.

- And you can visit the Synopsys website to learn about the complete Synopsys ARC NPX NPU IP here.

And that’s how Synopsys enables Gen AI on the edge.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.