In the blur of activities at DAC last year I visited the Mentor booth a few times and had just a few minutes to glance at a 3D TV display that didn’t require me to wear any funny glasses, kind of novel I thought at the time because I’ve read that the market of 3D TV sets is being hampered by requiring viewers to wear glasses. The design team responsible for implementing an SoC for 3D TV without the use of glasses is called StreamTV Network, and they just described their journey in a white paper. So, what kind of 3D are we talking about here?

Well, these graphical gurus at StreamTV have figured out how to take the existing 2D video content and then add their Ultra-D technology layer on top of that, all in real time using their secret sauce (i.e. proprietary real-time algorithms). The team decided to go ahead and create a proof of concept SoC, even before they knew critical requirements like:

- Silicon process node

- Interfaces

- Protocols

- Memory

- Bandwidth

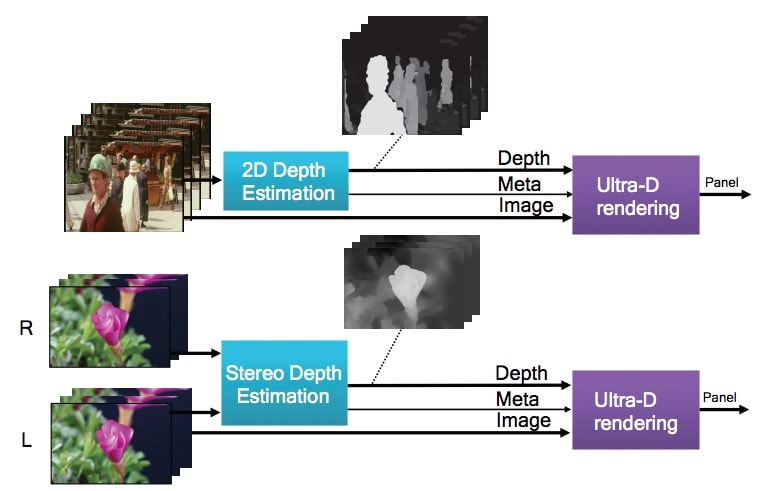

The engineers decided to take a High Level Synthesis (HLS) approach to create their proof of concept, and they used the Catapult HLS tool in the process. Inside of their SoC is a Real-Time Conversion (RTC) IP block that has a 2D depth estimation function that creates a depth map. Then the image and depth map get sent to the Ultra-D block to create a stereo depth map.

In the above diagram you can see two BW images, the depth maps where a whiter color means that the image is closer to the viewer and black means the image is farther away.

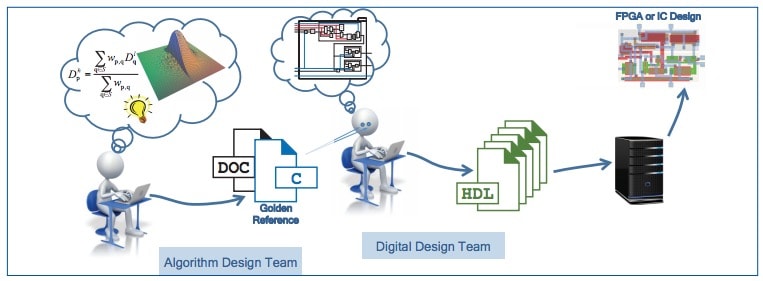

A conventional SoC design flow would have an algorithm design team coding in C++, then a digital design time manually coding in RTL with an error-prone and time consuming process of going from C++ into RTL code:

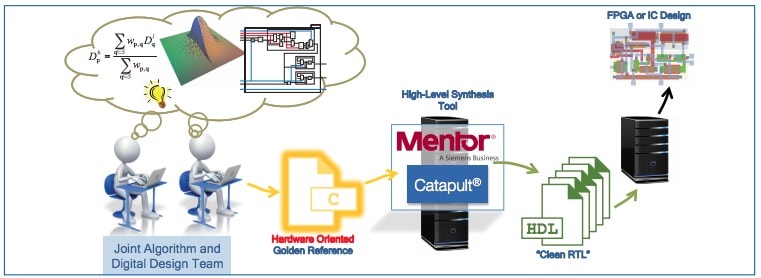

The StreamTV team heard about the HLS approach from an NVIDIA engineer and started talking with Mentor about the possibility of using Catapult, so they started out with a portion of C++ code as a sample. AEs from Mentor spent some time onsite to help push the C++ code through the Catapult tool and the StreamTV engineers could quickly simulate the RTL code from Catapult to verify that it was working OK. So the new flow was more automated than the conventional SoC design flow:

Some of the benefits in using an HLS flow include:

- C++ is the golden source for the algorithm and digital design engineers

- Only one language to be concerned with

- Direct how hardware gets synthesized with HLS directives

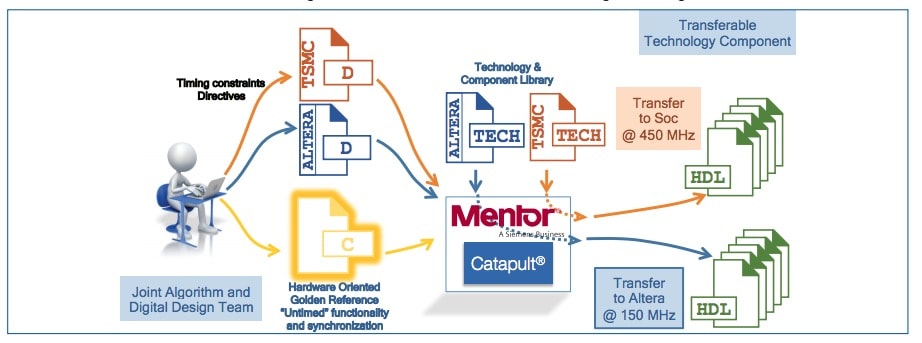

For an FPGA prototype using Altera parts the RTL code will run at 150 MHz, while choosing a TSMC process node the RTL code is much faster at 450 MHz.

During the design process there is block-wise verification run where the algorithm engineers wrote C++ functions that are then re-used as a testbench for the RTL generated code, so no manual steps were involved to verify. With the C++ being the golden source then all team members have a single language: Software, SW test, Verification testbench, Hardware. Having a unified source helped get the product out quicker than older design flows with multiple abstraction layers and manual steps.

Did the SteamTV team learn their new HLS flow by simply reading the fine manuals? Not really, they instead relied on local AE support to get up to speed with Catapult by learning best practices and avoiding pitfalls along the way. These first-time HLS users reported that they reduced SoC development time by at least 50% compared to previous designs, and that a big plus was being able to make last-minute changes because the time to generate RTL code was so fast.

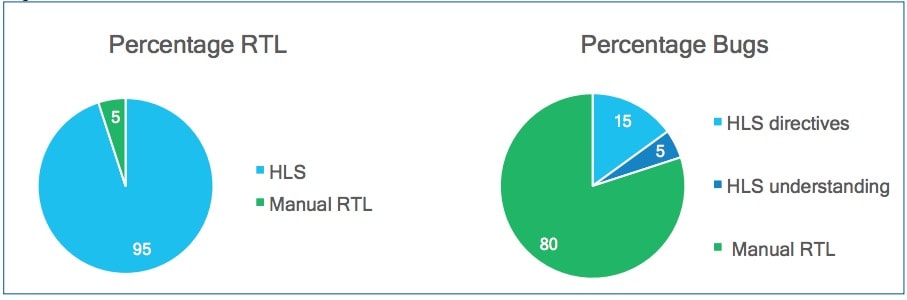

The team even kept track of how much manual RTL they had to write versus HLS-generated, then how that impacted their debugging time:

What surprised me was to see that with only 5% of their design using manual RTL coding that the debug time for that code was disproportionately large at 80% of all debug time. Learning to place HLS directives created 3X more bugs at 15% of the total debug time compared to just learning how to code HLS at 5% of the debug time.

Once the StreamTV engineers learned the new HLS flow and did their first project they are not returning to their former flow again. The Catapult technology proved itself quite capable for these video challenges in 3D TV.

To read the complete 10 page White Paper click here.

Related blogs:

- High-Level Design for Automotive Applications

- HLS update from Mentor about Catapult

- High-Level Synthesis for Automotive SoCs

Comments

There are no comments yet.

You must register or log in to view/post comments.