In this article we take an objective view of Virtual Prototyping from the engineering lens and the “quest to find bugs”. In this instance we discuss the avoidance of bugs in terms of architecting complex ASICs to be “correct by design”.

AI Challenges

It is not surprising to find out that other areas of human endeavour, beyond semiconductor design, struggle with complexity, cost and tricky problem solving…

“One of the main problems in the realization and testing of system is the time and costs associated to the iterative process that starts from the conceptual design, the preliminary (approximated) simulations, the realization of a prototype and eventually the modification of the initial design if some of the performance is not satisfactory”.[1]

This excerpt doesn’t come from a paper talking about ASIC design challenges, it is actually referring to design of end-effectors (think robotics) on space exploration vehicles and modules! Design for space, or for other planets, brings nasty challenges like lack of gravity, no atmosphere, radiation and zero-fail requirements; the list is long, but the use of Virtual Prototypes is almost a given.

A fabulous example of a device designed for out-of-this-World conditions is NASA’s rover, Perseverance. Due to the long delays communicating with the rover, all of the on-board systems have to run autonomously starting with the landing control, through control of scientific experiments to navigation control in a hostile environment!

Perseverance is the ultimate autonomous driving system and it’s in use on Mars before we have large numbers of cars using it here on Earth.

Running vehicles autonomously is the next big challenge for the automotive industry – avoiding obstacles, judging conditions and taking critical decisions are what prevents serious accidents happening.

The common thread through both these autonomous systems is control by AI systems[2]. The Perseverance autonomous navigation systems work at speeds far slower than if humans were on board to drive them on the Martian surface and the challenge is to accelerate the AI systems to do more. Back on Earth, Autonomous Driving Systems (level 3 and above) face a similar data “capture-process–decide” challenge; In both cases, much more data is required to perform correctly, including the processing of huge numbers of images and other sensory information.[3]

The challenge for the semiconductor world is to design the AI ASICs that are able to handle the very demanding data processing, performance and algorithmic targets that autonomous tasks require. And the stakes are very high;

A failed chip on Mars means a failed mission, or science experiment, so making sure that AI ASICS are bug free carries a huge premium. On Earth, failures cost lives…

As we have seen with Perseverance, or an autonomous vehicle travelling down Highway 101, a central challenge is how to deal with very large quantities of data coming from an array of sensors and other inputs. The same is also true in martial field (warfare), with important decisions being driven by information about surveillance, reconnaissance and personnel. Utilising this data relies on computational algorithms and underlying compute running at a huge scale.

ASICs are one route to managing the computational and data demands, but they tend to cost vast sums of money, are slow to develop and limited to the one task they are designed to be good at. DARPA and other organisations are turning to Software Defined Hardware, which can be defined as domain-specific programmable and configurable SoCs, optimized for a selected set of applications. In other words, “software-defined hardware” applies the principle of “form follows function” to chip design, such that the desired functionality determines a SoC’s architecture.

Correct-by-design analysis

Not all bugs can be found due to the complexity of modern ASIC designs and the challenges of verification as explained in “The Dilemmas of Hardware Verification”. We need to understand how to avoid bad architecture decisions through better architecture design practices.

We need a correct-by-design approach.

Traditionally, architectural analysis has been carried out using spreadsheets, a predominantly static approach that is highly limited. However, perhaps we should be deploying more dynamic architecture design analysis approaches to enable a far more complete, measurable and robust analysis of design choices?

So, what do we mean by dynamic? Dynamic effects arise from workloads changing over time, or multiple applications sharing the same resources. This can result in dynamic scheduling and pre-emption; many-core systems, leading to multiple initiators, which give rise to arbitration on shared interconnect; dynamic memory access latency due to caches and DDR memories (latency depends on address pattern). Hence, dynamic effects make it difficult to predict performance with static analysis.

In the same way, dynamic residency of workloads on resources leads to dynamic power consumption making it difficult to estimate average and realistic peak power. Dynamic power management (e.g. DVFS) creates a closed control loop: application workload drives resource utilization, which is influenced by the power management. This makes prediction of power and performance even more difficult, as the elements of the loop become interdependent.

Moving from Mars rovers to Earth-bound ASICs, does architecture design analysis using Virtual Prototyping help to avoid hard-to-find hardware and software bugs that emerge late in the development lifecycle? These bugs are costly to find and fix, or really disastrous if they emerge once the product is deployed and in the field!

Virtual Prototyping mitigates downstream problems by solving architectural design bugs early, before any RTL is written.

So why are these techniques less common in the design of ASICs and what are the compelling reasons for considering them, if they are not in use today? AI design is one of the areas where this is changing. The design task is to make “correct” early architectural decisions that avoid failures to meet product requirements (let’s call them architecture design bugs) in silicon.

So, what are Architecture Design Bugs?

Architecture design bugs are one of the most impactful categories of bugs and can lead to end products that miss performance, power or security goals. If bad architecture design choices do not become apparent until significant RTL design has been implemented, the cost of architectural change can be perceived as too great, with significant consequences on the schedule to make radical changes. Instead, these architecture issues are worked-around. Complexity ensues and technical debt accumulates.

You can end up with a fragile and compromised design where the ratio of bugs to lines-of-code is high, and you may never be satisfied that the design is fully robust.

The design goals may be difficult to meet as the RTL is iterated to achieve power and performance targets which could have been correctly addressed at the architectural stage. These late design specification changes are a common root cause of difficult bugs (see “The Origin of Bugs”).

The objective of Virtual Prototyping is to underpin the development of a clean and stable high-level architecture, that has been validated to meet functional, performance and power requirements. Architectural design bugs are avoided early on. This stable high-level design saves time and reduces risk in the latter RTL development stages. Implemented RTL can be benchmarked against this model.

Architecture design warrants validation and verification in the same way as the RTL HW and the SW does, in the effort to eliminate architecture design bugs.

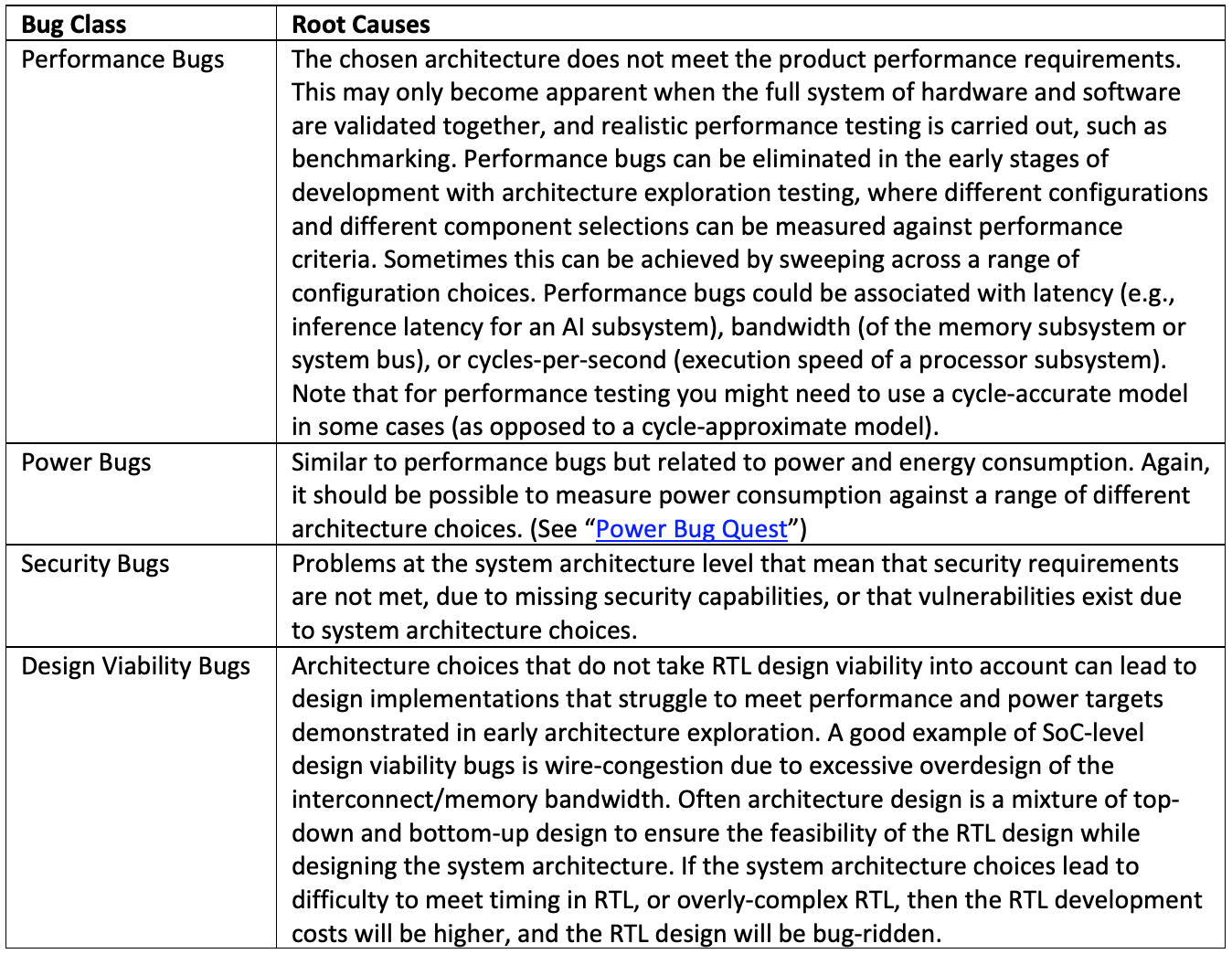

Let’s consider some different classes of architecture design bugs.

Where are HW bugs created, and where are they found?

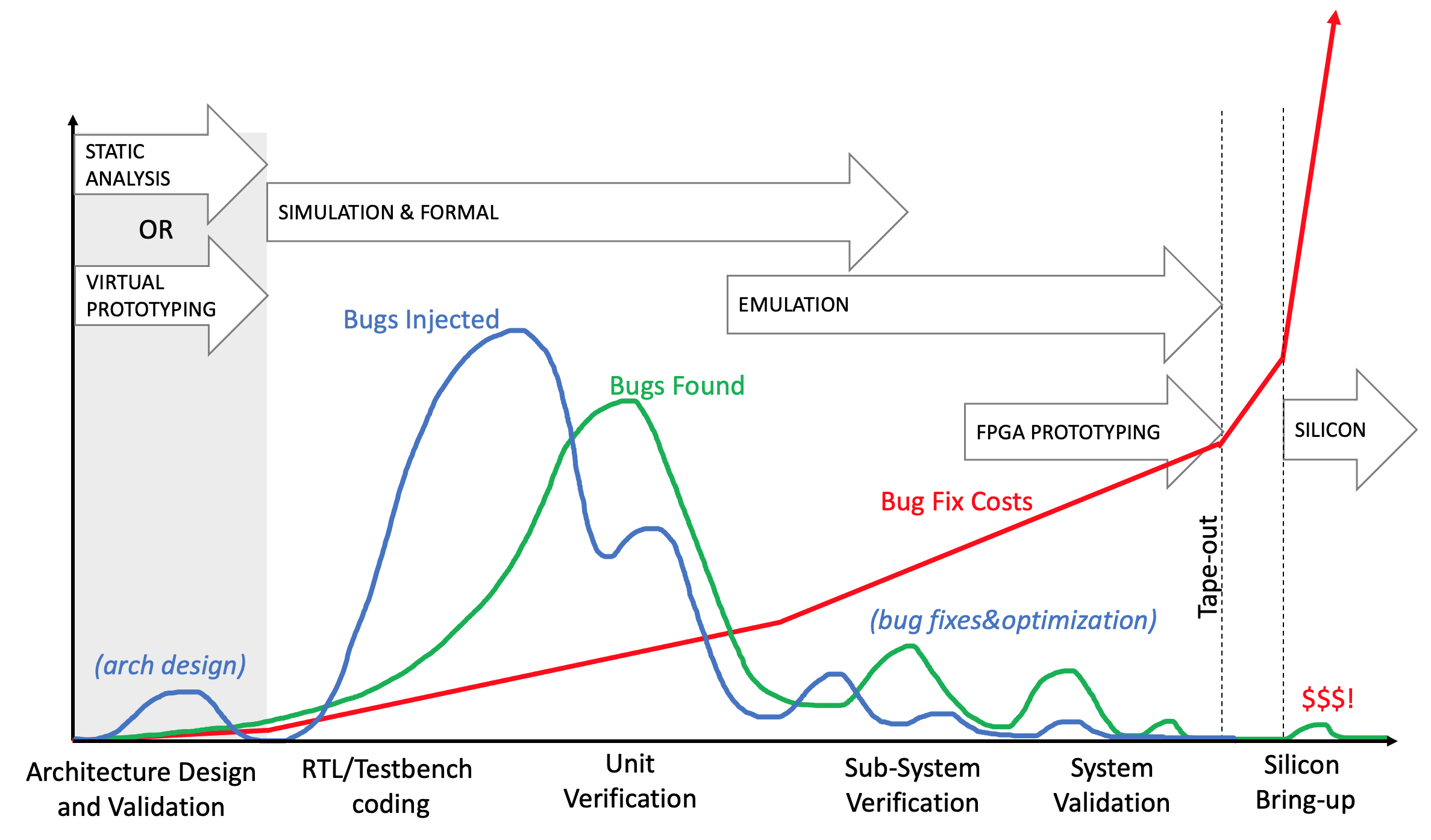

The following figure shows what a typical hardware design flow looks like in terms of where bugs are created and where they are found. Bugs are generally injected in the initial RTL coding phase, and further bugs are injected downstream as the RTL is refined for bug fixing and performance optimizations. This is often seen as a series of diminishing ripples in the RTL codebase and the bug tracking as the verification transitions through the verification abstraction levels. Some architecture design bugs may be injected in the architecture design phase and may not be found with traditional static spreadsheet based analysis. Non-functional bugs/flaws in the architecture are typically only discovered in the sub-system/system validation stages. Fixing them at this late stage can then cause rework and risk of further bug injection at the unit stages.

These architecture design bugs can be found and eliminated during architecture development by using a Virtual Prototyping based architecture design and validation strategy.

Eliminating bad architecture decisions at this early stage will then reduce performance and power optimization based code churn/bug injection at the sub-system/system stages and the corresponding risk of bugs at the silicon stage. Bug fix cost at this early stage of the development lifecycle is the lowest, with the cost to fix and the impact costs escalating exponentially as you transition through to hardware acceleration and eventually onto prototype silicon. See “The Cost of Bugs”.

Source – Acuerdo and Valytic Consulting

Identify bad decisions…

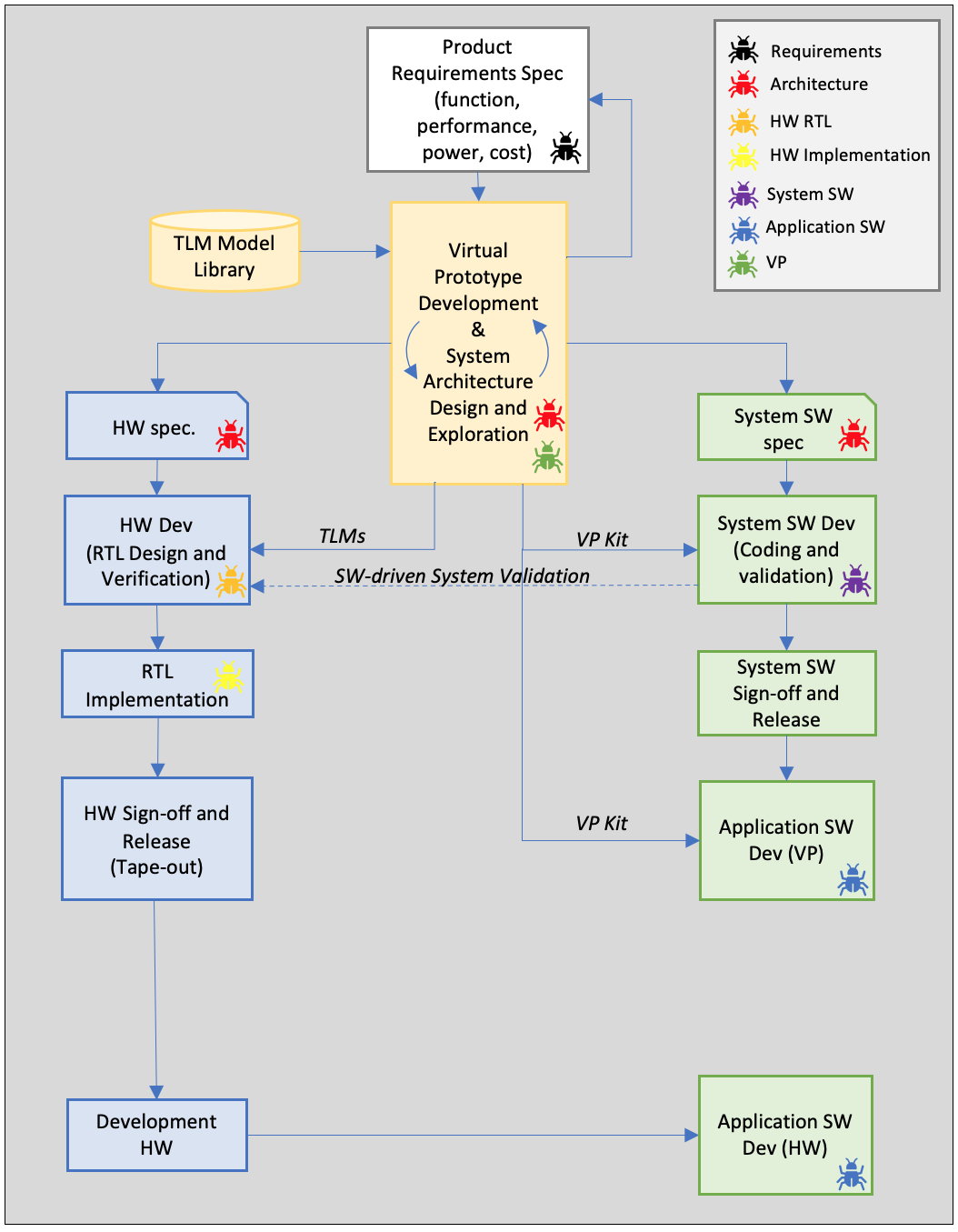

The following flow-chart visualizes a Virtual Prototyping enabled ASIC development flow, where a set of product requirements lead to an overall system architecture design which can be explored using Virtual Prototyping. This is turn drives the development of the hardware and software specifications. At the architecture level, decisions are made about the hardware/software split; What functionality needs to be delivered in hardware and what functionality should be delivered in system software to achieve the functional, performance and power goals of the product. Different categories of bugs (requirements, architecture/specification, RTL, implementation, software, and even bugs in the VP models) can originate at multiple points throughout this flow.

Bad decisions made at the system architecture design phase can lead to downstream problems in the hardware and software that may be difficult to fix without major disruption to hardware RTL design, or software that has already been written.

Virtual Prototype enabled ASIC development

Source – Acuerdo and Valytic Consulting

In the workflow above, a Virtual Prototype is developed in the early stages in a loop that iterates around the system architecture exploration. The VP for architecture analysis is developed from a combination of TLM library components with appropriate cycle timing (e.g. processors, bus interconnects, memory systems) plus any custom design components that are developed for the target product (e.g. an AI accelerator). System architecture exploration can also drive iteration of product requirements.

Virtual Prototyping can also deliver VP Kits which can be deployed easily to shift-left both system software and application software development. Further, elements of the Virtual Prototype or the TLM library (e.g. fast processor models) can be exploited for hardware verification acceleration and other use cases. Software-driven system validation consumes the system software that has already been validated using the VP Kit. We will discuss these use cases further in a forthcoming article”.

Consequences

Often, development teams will seek to find workarounds just to avoid major re-writes, and this can compromise the quality of both hardware and software. Technical debt accumulates as patches are applied to patches, complexity increases, and design integrity can decrease. This leads to hard-to-find corner-case bugs that you may (or may not) find pre-silicon and pre-production.

If you wait until beta hardware and software availability (fully functional) to validate the system architecture, then you’ve left it too late. You might have to live with the architecture bugs!

Virtual Prototyping is empowering; it facilitates the exploration of designs in terms of function, performance and power, and enables more deterministic decisions on what configuration of the design topology and hardware/software split is going to deliver the optimum solution. In the process, it helps avoid unforeseen or non-obvious problems with the architecture that might present as a Quality-of-Service (QoS), or Denial-of-Service (DoS) issues. You now have a crucial feedback loop between the fast Virtual Prototype model and the architecture design spec. Architecture bugs can be eliminated (avoided!) before they ever make it through to the hardware and software development stages.

Exploring the options

There may be a number of architecture questions that need to be answered in order to arrive at a validated architecture solution.

Solving the architecture up-front, saves time and cost and reduces risk, and leads to successful “Correct by Design” hardware and software development.

Consider some of the architecture variables and questions you might need to review: –

- Does the chosen architecture meet the non-functional requirements?

- Combinations of CPUs, GPUs or AI accelerators should be selected to balance performance, power and area.

- On-chip bus fabric and bus protocols deliver the product performance requirements (in terms of latency and bandwidth)?

- Multi-core architectures meet the product performance requirements for the lowest energy consumption?

- Caching hierarchy, associated sizes, and algorithms appropriate for the target workloads?

- Which memory controller best fits the required latency and bandwidth requirements of the product?

- Which interrupt controller offers the right latency for a real-time design?

- Architecture best suited to deliver a secure system?

- Should team develop key algorithms in software, or map those algorithms to hardware accelerators?

- Power architecture that best meets low-power targets for the product?

- Software architecture (compilers, system software and application software) required to meet product specification?

- Will architecture behave well under conditions of peak-traffic/peak processing loading?

There will be many more….

Architecture sweeping

A design-exploration approach is needed to investigate the matrix of options and analyse the resulting function and performance. This analysis must be able to dynamically observe all aspects of behaviour and performance to achieve full insights into the characteristics of the “architecture-under-test”. For example, this might entail observation of the following: –

- Software execution trace

- Task tracing for accelerator units such as a DNN component

- IP block utilization tracing

- Bus throughput tracing (latency, bandwidth, congestion)

- DDR utilization levels

- Relative power tracing

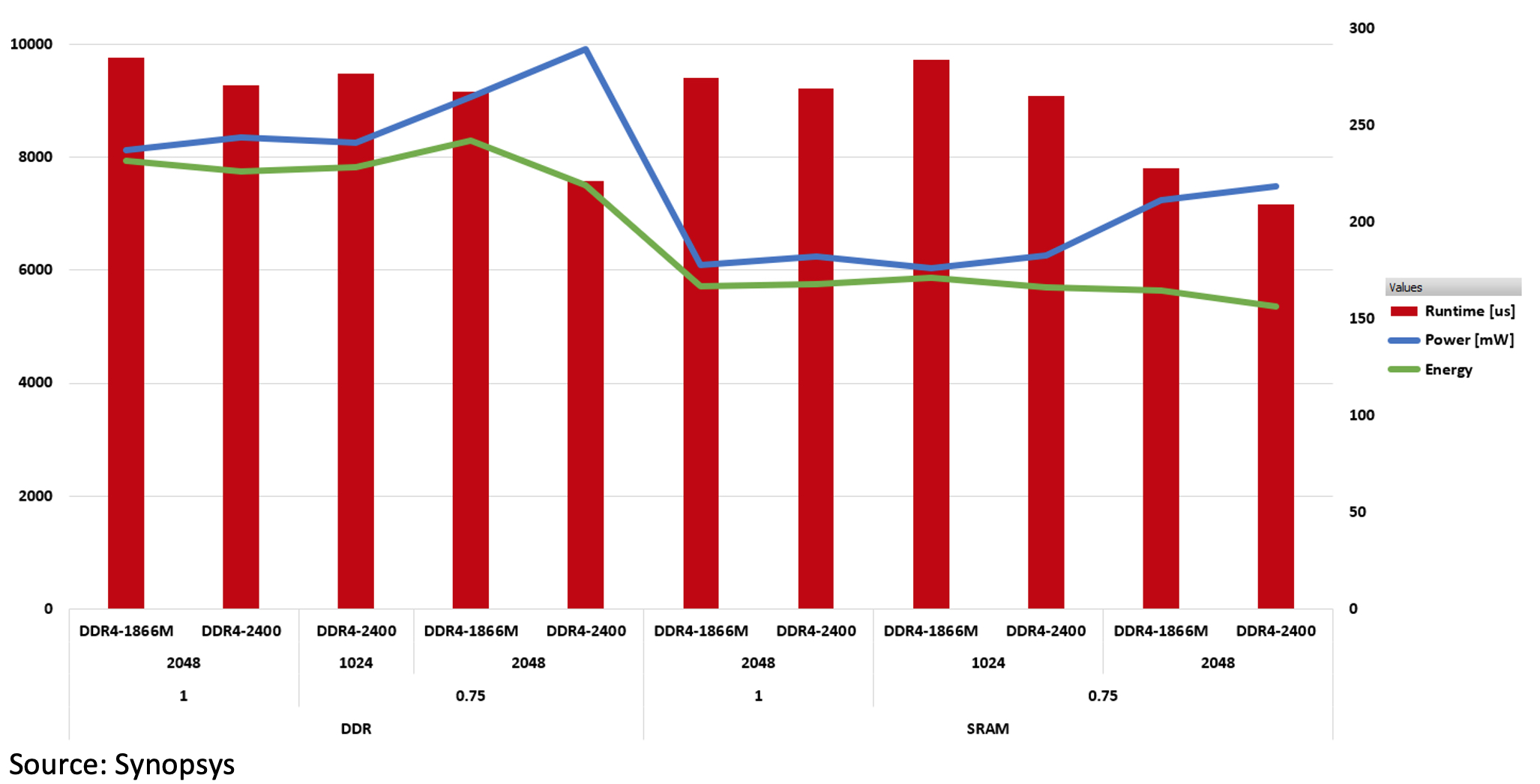

The architecture designer will be able to utilise all of these analyses to debug and reason about which iterations offer the optimum architecture solutions against the product requirements. There may be some KPIs that will determine the overall best fit such as inference-latency for an AI enabled product, for example. These KPIs can be tracked and tabulated by sweeping across the matrix of selected configuration choices.

Source: Synopsys

The figure above shows an example KPI analysis from this architecture sweeping process, showing how different memory system choices impact runtime, power and energy measurements taken from each set of VP analyses.

Don’t propagate bad decisions…

Virtual Prototyping is well known for its ability to “shift-left” the software development activity by decoupling it from the hardware RTL development. VPs can be further used to speed-up hardware verification environments in simulation, emulation and FPGA which in turn can shift-left the hardware development. However, we hope we have convinced you that architecture choices also need to be validated, and the earlier the better!

The benefit of doing this up-front, before committing to expensive hardware and software development, is that time will be saved downstream, and the reliability and quality of the end-product will be higher; Correct by Design. Bad architectural decisions should not be allowed to propagate beyond the design exploration stage, and Virtual Prototyping is the ideal methodology to do this… especially if planning a trip to Mars.

References

[1] https://scholars.direct/Articles/robotics/jra-1-003.php?jid=robotics

[2] https://www.analyticsinsight.net/artificial-intelligence-iot-sensors-tech-aboard-nasas-perseverance-rover/

[3] https://www.enterpriseai.news/2021/04/01/how-nasa-is-using-ai-to-develop-the-next-generation-of-self-driving-planetary-rovers/

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026