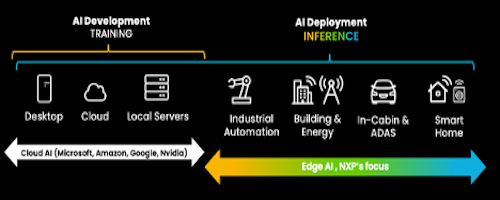

It might seem from popular debate around AI and agentic that everything in this field is purely digital, initiated through text or voice prompts, often cloud-based or on-prem. But that view misses so much. AI is already an everyday experience at the edge, for voice-based control, in object detection and safety-triggered braking and steering responses in cars, in predictive maintenance warnings in factories. Now almost so commonplace we may forget that AI underlies those functions. In such use models, demanding real-time response under all conditions, AI must be delivered locally to avoid communication and cloud latencies. Agentic methods are ready to further extend the role of AI at the edge, as I learned in a couple of recent discussions with NXP. For those of who have been curious about “physical AI”, NXP is very much leaning into this area, in software and in hardware.

The value of agentic at the edge

Imagine an industrial shop floor with banks of constantly running engines, monitored by a small number of human supervisors. At some point an engine overheats and bursts into flame. An agentic system detects the incident and takes corrective action: turning on sprinklers while closing (not locking) open doors to limit the spread of fire. Meanwhile it sends a message to the shop-floor manager, providing details of the event. This is an agentic flow. Sensing: perhaps noise, vibrations, certainly video, temperature. Inferencing: detecting and locating the incident. Actuating: turning on sprinklers, shutting doors, turning off power to nearby systems, and sending alerts through text messages.

There are some differences from compute centric agentic systems. Input comes in multiple “modalities” such as motion detection, video, audio, etc. Some are analyzed as time-series and reviewed against prior training. Video and audio analysis similarly are reviewed against pre-training for normal versus anomalous operation. An agentic orchestrator monitors and controls feedback from these agents and can trigger correctives actions as needed.

I talked to Ali Ors (Global Director of AI Strategy and Technologies for Edge Processing at NXP) on their recent announcement of a cloud-based eIQ AI hub and toolkit. This platform supports developing and accelerating agentic AI at the edge for applications like this factory example, or for other areas (automotive, avionics and robotic). eIQ AI targets a range of NXP hardware platforms offering AI support, from MCUs up to S32N7 processors (see the next section) and discrete NPUs including their Ara platform.

eIQ AI builds on established industry standards and emerging standards for agentic systems to provide a lot of functionality right out of the box. Leveraging these capabilities, NXP have been working with ModelCat who provide support to build custom agentic models in days rather than years. This is incredibly valuable because few companies today have armies of PhD data scientists to build and maintain agentic models from scratch. For problems they need to solve today, not years from now.

There’s another point I consider very important. In general discussion around mainstream digital agents and agentic systems, high accuracy and security still seem to come across in practice as a goal rather than a near-term requirement. That is not good enough for these physical AI applications. While NXP do not themselves build deployed agentic solutions, they provide significant infrastructure (safety, security, multiple AI and non-AI engines able to run in parallel) to support their customers and customers’ customers to meet these much more demanding targets.

NXP S32N7 promotes agentic innovation

The old approach to electronic control in a car, distributing MCUs around engine, body and cabin control functions, is now impractical. Architectures have evolved to more centralized hierarchies, consolidating more capabilities and control in zonal functions. Nicola Concer (Senior Product Manager, Vehicle Control and Networking Solutions, NXP) shared insight into ongoing motivation for consolidation. NXP has a widely established and dominant gateway (automotive networking) product called S32G. Already this gateway touches almost everything within a network connected car. Given this reality, customers have asked NXP to take the next logical step. Could they integrate into that same platform: motion intelligence, body intelligence, ADAS intelligence? Consolidating that hardware and software in the S32N7 increases performance and reduces cost while simplifying design and maintenance.

Which for me prompted a question: will these devices serve as zonal controllers or as zonal and central controllers? Nicola told me I shouldn’t think of an architecture in which everything coalesces into one giant central controller or a central controller with one level of branching to zonal controllers. Think of a more general tree in which some branches may themselves sprout branches. An OEM architects a hierarchy to meet fleet objectives while also standardizing on a family of common controllers. The root device may be something else, say for autonomous driving, but NXP can manage the rest of the tree, offering ample opportunities for innovation.

The standard way for OEMs/Tier1s to innovate is be simply to enhance existing features. But Nicola suggests bigger opportunities to stand out are through inferences across domains. Here’s a simple example: You park, want to open your door, but a car is approaching from behind. Cross-domain inference detects the car through radar, detects you are trying to open your door and sounds an alarm, maybe even resists you opening the door.

There are many other such opportunities, when driving, when stationary, when charging your car, and so on. Sensing, inferencing and actuating in each case. All powered by agentic methods.

The S32N7 together with eIQ enables this innovation. Agentic here can run agents on application or real-time cores. They can run models on embedded NPUs within the processor, maybe to infer tire status through tire pressure time series. Or to infer tire noise for active noise cancellation inside the cabin. For more complex inferences, an orchestrator can communicate through PCIe to a discrete Kinara NPU plugged in as an AI expansion card. Multiple paths to an inference also allow for cross checking by comparing answers generated through different paths, an important safety consideration in some cases.

Very impressive. For me this is an inspiration showing that high fidelity, high safety agentic options are a real possibility. Maybe some of these ideas can flow back into cloud-based agents? You can read more about the eIQ platform HERE and the S32N7 family HERE.

Also Read:

2026 Outlook with Kamal Khan of Perforce

Curbing Soaring Power Demand Through Foundation IP

Automotive Digital Twins Out of The Box and Real Time with PAVE360

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026