The immediate appeal of large language models (LLMs) is that you can ask any question using natural language in the same way you would ask an expert, and it will provide an answer. Unfortunately, that answer may be useful only in simple cases. When posing a question we often implicitly assume significant context and skate over ambiguities. Then we are surprised when the LLM completely misses our expectation in the answer it provides.

The reason for the miss is that initial guidance was insufficient. Rather than trying to stuff all the necessary context into a new prompt, standard practice is to refine initial guidance through added prompts, as in the following (fake) example. No longer a simple prompt, this looks more like an algorithm, though still expressed in natural language. Welcome to prompt engineering, a new discipline requiring user training and familiarity with a range of prompt engineering techniques in order to craft effective queries for LLM applications.

Techniques in prompt engineering

This domain is still quite new, as seen in the great majority of papers reported in Google Scholar which appear from 2023 onwards. Outside of Google Scholar I have found multiple papers on use of LLMs in support of software debug and to a lesser extent hardware debug, but I have found very little on prompt engineering in these domains. There are also Freemium apps to help optimize prompts (I’ll touch on these later) though I’m not sure how much these could help in engineering debug given their more likely business-centric client base.

Lacking direct sources, I will synthesize my view from a variety of sources (list at the end), extrapolating to how I think these methods might apply in hardware debug. It would love to see responses to disprove or confirm these assumptions.

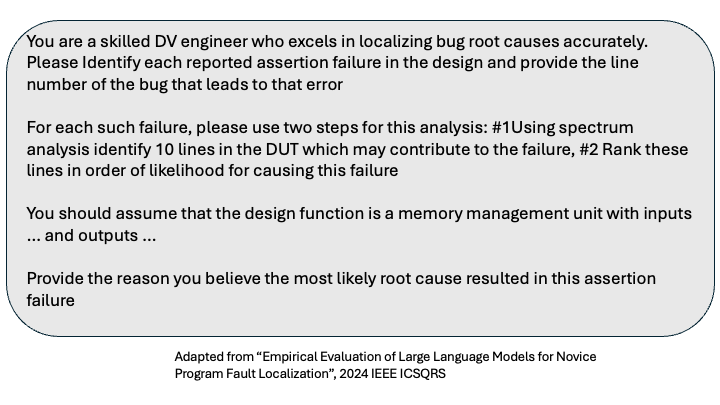

In debug, context is important even though in conventional algorithmic debug it is unclear how this might play a role. LLM-based debug could in principle help bridge between high-level context and low-level detail, for example, requiring that the LLM answer with an expert engineering viewpoint. Yes, that should be a default but isn’t when you are starting with a general-purpose model, trained on a wide spectrum of expertise in many domains. Less obvious is value in including information about the design function. This might narrow context somewhat within general training, maybe more so through in-house fine-tuning/legacy in-context training. Either way providing this information might help more than you expect, even though your prompt suggestion may appear very high level.

Chain of Thought (CoT) prompting, telling the LLM to reason through a question in steps, has proved to be one of more popular prompting techniques. We ourselves don’t reason through a complex problem in one shot and LLMs also struggle when faced with a simple question/prompt addressed to a complex problem. We humans break such a problem down into simpler steps and attack those steps in sequence. The same approach can work with LLMs. For example, in trying to trace to a failure root-cause we might ask the LLM to apply conventional (non-AI) methods to grade possible fault locales, then rank that list based on factors like suspiciousness, and then provide a reasoning for each of the top 5 candidates. One caution is that this method apparently doesn’t work so well on the more advanced LLMs like GPT4-o which apparently prefer to figure out their own CoT reasoning.

Another technique is in-context learning/few-shot learning, learning from a few samples provided in a prompt. I see this method mentioned often in code creation applications, but I’m yet to find a published example for verification except for code repair in debugging. However, there is recent work on code summarization driven by few-shot learning which I think could be a starting point for augmenting prompts with semantic hints in support of fault localization.

There are other techniques such as chain-of-tables for reasoning on table-structured data and tree-of-thoughts to explore multiple paths, but in this brief article it is best next to touch on methods to automate this newfound complexity.

Automated prompt engineering

A general characteristic of ad hoc prompt engineering seems to be high sensitivity to how prompts are worded/constructed. I can relate. I use a DALL-E 3 tool to generate images for some of my blogs and find that beyond my initial attempt and a few simple changes it is very difficult to tune prompts predictably towards something that better matches my goal.

Leading AI providers now offer prompt generators such as this one for ChatGPT which will tell you what essentials you should add to your request, then will generate a new and very detailed prompt which has the added benefit of optimizing to the host model’s preferences (e.g. whether to spell out step-based reasoning or not). I tried this for image generation. It built an impressively complex prompt which unfortunately was too long to be accepted by my free subscriptions to either of 2 image generators. Google/Deepmind OPRO has a somewhat similar objective though as far as I can tell it directly front-ends the LLM, optimizing your input “meta-prompt”, then feeding that into the LLM.

There is also an emerging class of prompt engineering tools, though I wonder how effective these can be given rapid evolution in LLM models and the generally opaque/emerging characteristics of those systems. In prompt engineering perhaps the best options may still be those offered by the big model builders, augmented by your own promptware.

Happy prompting!

References

CACM: Tools for Prompt Engineering

Promptware Engineering: Software Engineering for LLM Prompt Development

Prompt Engineering: How to Get Better Results from Large Language Models

Empirical Evaluation of Large Language Models for Novice Program Fault Localization

Automatic Semantic Augmentation of Language Model Prompts (for Code Summarization)

Also Read:

What is Vibe Coding and Should You Care?

DAC TechTalk – A Siemens and NVIDIA Perspective on Unlocking the Power of AI in EDA

Architecting Your Next SoC: Join the Live Discussion on Tradeoffs, IP, and Ecosystem Realities

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026