For decades, speculative execution was a brilliant solution to a fundamental bottleneck: CPUs were fast, but memory access was slow. Rather than wait idly, processors guessed the next instruction or data fetch and executed it ‘just in case.’ Speculative execution traces its lineage back to Robert Tomasulo’s work at IBM in the 1960s. His algorithm—developed for the IBM System/360 Model 91—introduced out-of-order execution and register renaming. This foundational work powered performance gains for over half a century and remains embedded in most high-performance processors today.

But as workloads have shifted—from serial code to massively parallel AI inference—speculation has become more burden than blessing. Today’s data centers dedicate massive silicon and power budgets to hiding memory latency through out-of-order execution, register renaming, deep cache hierarchies, and predictive prefetching. These mechanisms are no longer helping—they’re hurting. The effort to keep speculative engines fed has outpaced the benefit they provide.

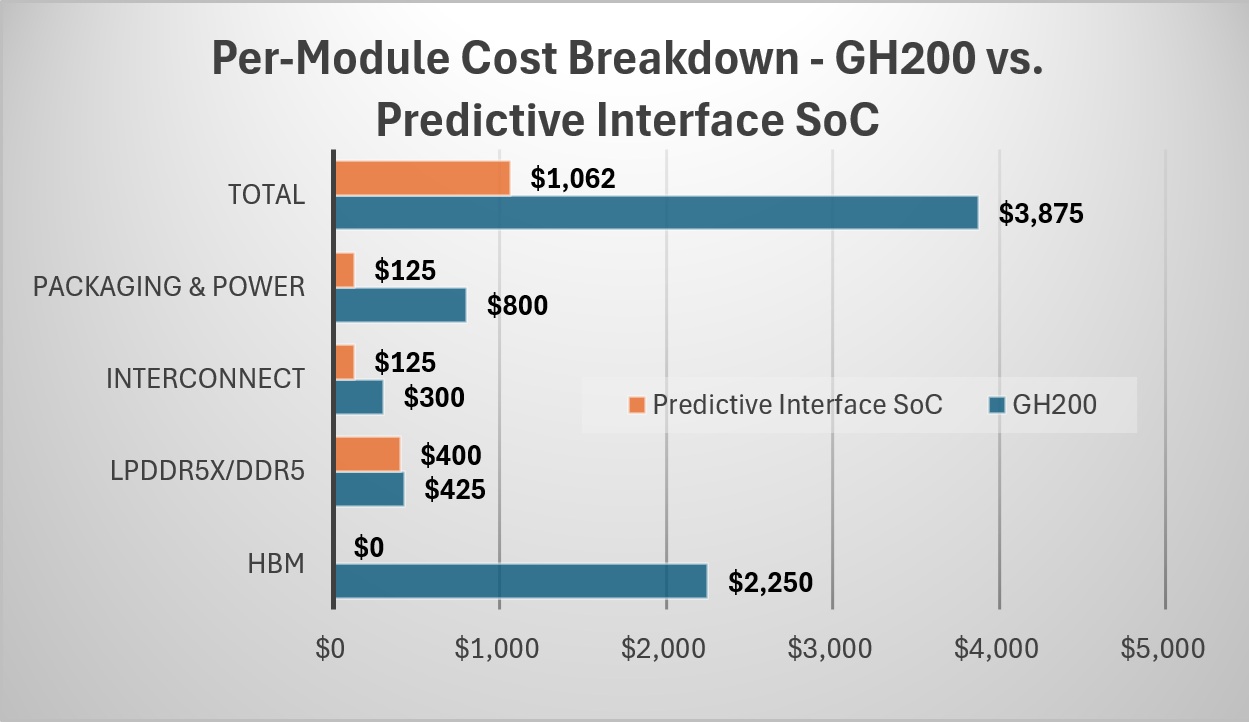

It’s time to rethink the model. This article explores the economic, architectural, and environmental case for moving beyond speculation—and how a predictive execution interface can dramatically reduce system cost, complexity, and energy use in AI data centers. See Fig. 1, which shows Side-by-side comparison of integration costs per module. Predictive interface SoCs eliminate the need for HBM3 and complex speculative logic, slashing integration cost by more than 3×. When IBM introduced Tomasulo’s algorithm in the 1960s, “Think” was the company’s unofficial motto—a call to push computing forward. In the 21st century, it’s time for a new mindset. One that echoes Apple’s challenge to the status quo: “Think Different.” Tomasulo changed computing for his era. Today, Dr. Thang Tran is picking up that torch—with a new architecture that reimagines how CPUs coordinate with accelerators. Predictive execution is more than an improvement—it’s the next inflection point.

Freeway Traffic Analogy: Speculative vs. Predictive Execution

Imagine you’re driving on a crowded freeway during rush hour. Speculative execution is like changing lanes the moment you see a temporary opening—hoping it will be faster. You swerve into that new lane, pass 20 cars… and then hit the brakes. That lane just slowed to a crawl, and you have to switch again, wasting time and fuel with every guess.

Predictive execution gives you a drone’s-eye view of the next 255 car lengths. You can see where slowdowns will happen and where the traffic flow is smooth. With that insight, you plan your lane changes in advance—no jerky swerves, no hard stops. You glide through traffic efficiently, never getting stuck. This is exactly what predictive interfaces bring to chip architectures: fewer stalls, smoother data flow, and far less waste.

Let’s examine the cost of speculative computing in current hyperscalar designs. The NVIDIA Grace Hopper Superchip (GH200) integrates a 72-core Grace CPU with a Hopper GPU via NVLink-C2C and feeds them using LPDDR5x and HBM3 memory respectively. While this architecture delivers impressive performance, it also incurs massive BoM costs due to its reliance on HBM3 high-bandwidth memory (96–144 GB), CoWoS packaging to integrate GPU and HBM stacks, deep caches, register renaming, warp scheduling logic, and power delivery for high-performance memory subsystems.

GH200 vs. Predictive Interface: Module Cost Comparison

| GH200 Module Components | Cost | Architecture with Predictive Interface | Cost |

| HBM3 (GPU-side) | $2,000–$2,500 | DDR5/LPDDR5 memory (shared) | $300–$500 |

| LPDDR5x (CPU-side) | $350–$500 | Interface control fabric (scheduler + memory coordination) | $100–$150 |

| Interconnect & Control Logic (NVLink-C2C + PHYs) | $250–$350 | Standard packaging (no CoWoS) | $250–$400 |

| Packaging & Power Delivery (CoWoS, PMICs) | $600–$1,000 | Simplified power delivery | $100–$150 |

| Total per GH200 module | $3,200–$4,350 | Total cost per module | $750–$1,200 |

A Cost-Optimized Alternative

An architecture with predictive interface eliminates speculative execution and instead employs time-scheduled, deterministic coordination between scalar CPUs and vector/matrix accelerators. This approach eliminates speculative logic (OOO, warp schedulers), makes memory latency predictable—reducing cache and bandwidth pressure, enables use of standard DDR5/LPDDR memory, and requires simpler packaging and power delivery. In the same data center configuration, this would yield a total integration cost of $2.4M–$3.8M, resulting in a total estimated savings: $7.8M–$10.1M per deployment.

While the benefits of predictive execution are substantial, implementing it does not require a complete redesign of a speculative computing system. In most cases, the predictive interface can be retrofitted into the existing instruction execution unit—replacing the speculative logic block with a deterministic scheduler and timing controller. This retrofit eliminates complex out-of-order execution structures, speculative branching, and register renaming, removing approximately 20–25 million gates. In their place, the predictive interface introduces a timing-coordinated execution fabric that adds 4–5 million gates, resulting in a net simplification of silicon complexity. The result is a cleaner, more power-efficient design that accelerates time-to-market and reduces verification burden.

Is $10M in Savings Meaningful for NVIDIA?

At NVIDIA’s global revenue scale (~$60B in FY2024), a $10M delta is negligible. But for a single data center deployment, it can directly impact total cost of ownership, pricing, and margins. Scaled across 10–20 deployments, savings exceed $100M. As competitive pressure rises from RISC-V and low-cost inference chipmakers, speculative execution becomes a liability. Predictive interfaces offer not just architectural efficiency but a competitive edge.

Environmental Impact

Beyond cost and performance, replacing speculative execution with a predictive interface can yield significant environmental benefits. By reducing compute power requirements, eliminating the need for HBM and liquid cooling, and improving overall system efficiency, data centers can significantly lower their carbon footprint.

- Annual energy use is reduced by ~16,240 MWh

- CO₂ emissions drop by ~6,500 metric tons

- Up to 2 million gallons of water saved annually by eliminating liquid cooling

Conclusion: A Call for Predictable Progress

Speculative execution has long served as the backbone of high-performance computing, but its era is showing cracks—both in cost and efficiency. As AI workloads scale exponentially, the tolerance for waste—whether in power, hardware, or system complexity—shrinks. Predictive execution offers a forward-looking alternative that aligns not only with performance needs but also with business economics and environmental sustainability.

The data presented here makes a compelling case: predictive interface architectures can slash costs, lower emissions, and simplify designs—without compromising on throughput. For hyperscalers like NVIDIA and its peers, the question is no longer whether speculative execution can keep up, but whether it’s time to leap ahead with a smarter, deterministic approach.

As we reach the tipping point of compute demand, predictive execution isn’t just a refinement—it’s a revolution waiting to be adopted.

Also Read:

LLMs Raise Game in Assertion Gen. Innovation in Verification

Scaling AI Infrastructure with Next-Gen Interconnects

Siemens Describes its System-Level Prototyping and Planning Cockpit

Share this post via:

A Detailed History of Samsung Semiconductor