Qualcomm is a common name in mobile industry for chips. The company has generated $33 billion in revenue in 2021 and continues to march ahead with its innovations. However, Qualcomm doesn’t get the same visibility and mention as Nvidia and Intel in the world of AI chips. By our estimate, Qualcomm’s contribution to AI chip market is comparable to Intel and Nvidia given the volume shipment of smartphones and silicon content dedicated to AI in recent years. Qualcomm has been steadily making progress on key AI chip markets and perhaps has the most diverse and comprehensive portfolio to cater all AI chip markets.

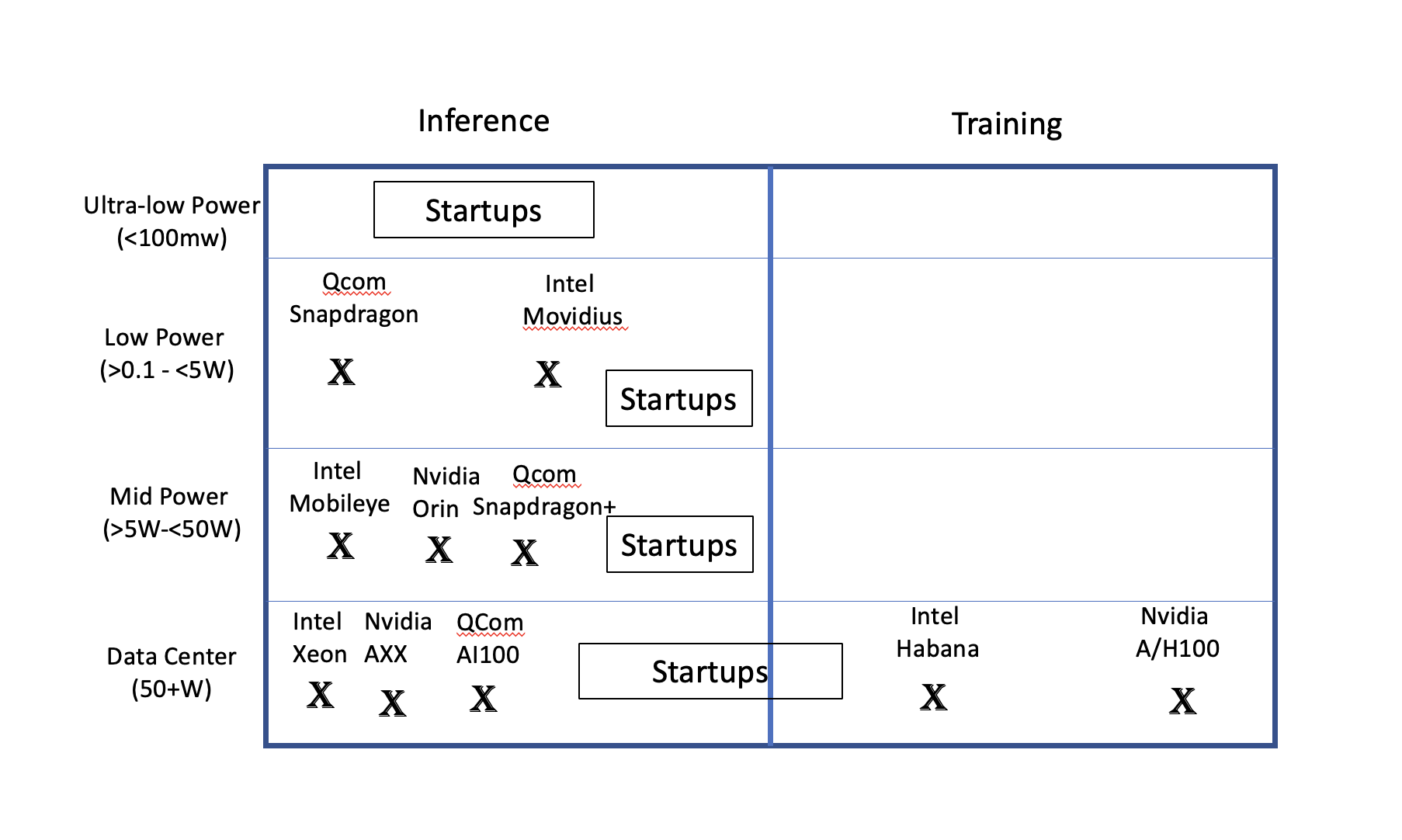

Figure shows different segments within AI chip market and products in each

AI chip market has grown significantly in the past few years and you can read all about it in JP Data’s latest report on AI chips. According to the analysis, overall AI chip market can be best segmented by power consumption: data center AI chips segment (50+W), mid power AI chips (5-50W, primarily for automotive and such markets), low power AI chips (0.1-5W, primarily for mobile and client computing) and ultra-low power AI chips (<0.1W for always on applications). There’s no sign of slowdown in AI yet with enterprises as well as edge device markers eager to test out new solutions. Many use cases and exciting applications are continuing to emerge. Proof of concept applications that are going into production are driving the need for AI inference chips.

Qualcomm is poised to play in all markets which sets it apart from other companies. For the data-center market, the company has introduced AI100 chip and results submitted on MLPerf compete well with Nvidia. Qualcomm boasts its significantly higher performance per watt than the competition. Qualcomm is actively adapting its Snapdragon product line to support automotive market and recently claimed design wins at BMW. Qualcomm’s dominance in low power market segment within mobile world is well known and needs no introduction. The same chips offers ultra low power mode for always on applications enabling a whole new set of AI use cases for device manufacturers.

This makes its portfolio even more comprehensive than Nvidia and Intel if we keep training aspect aside. Nvidia for example, doesn’t have products in the mobile space and neither does Intel. Intel and Nvidia don’t have solutions for ultra-low power market either.

Qualcomm was somewhat late to the party and focused earlier on accelerating AI via enhancing its Hexagon DSP and Adreno GPU. The company then acquired Nuvia to create new AI accelerator. At Microsoft’s 2022 Build conference, the company announced Project Volterra, a new device powered by Snapdragon chips that contain AI accelerator, NPU. The dedicated accelerator will become part of Microsoft’s Windows 11. Via the included SDK to build AI applications, the chip will enable AI usage within large number of Windows applications to potentially challenge X86 dominance in PC world.

Qualcomm has invested heavily into AI since. Qualcomm announced 100 million AI fund way back in 2018, has aggressively invested in AI R&D and released SDK that allows developers to take a model and customize it for mobile, automotive, IoT, robotics or other markets. While there is no data on active AI developers for Qualcomm, we expect the number to be much lower than bragging rights gained by Nvidia and Intel. In fact, Google trends search reveals that the searches for Qualcomm AI are far below Nvidia AI or Intel AI suggesting that there’s a lot of catching up to do.

The AI chip market is still emerging. Nvidia has become de-facto standard in training but the inference market is just starting its ramp up. If Qualcomm is indeed able to offer a consistent software experience across different market segments, it has a potential to become a formidable player in the AI chip market.

Also read:

How to Cut Costs of Conversational AI by up to 90%

HLS in a Stanford Edge ML Accelerator Design

Share this post via:

Advancing Automotive Memory: Development of an 8nm 128Mb Embedded STT-MRAM with Sub-ppm Reliability