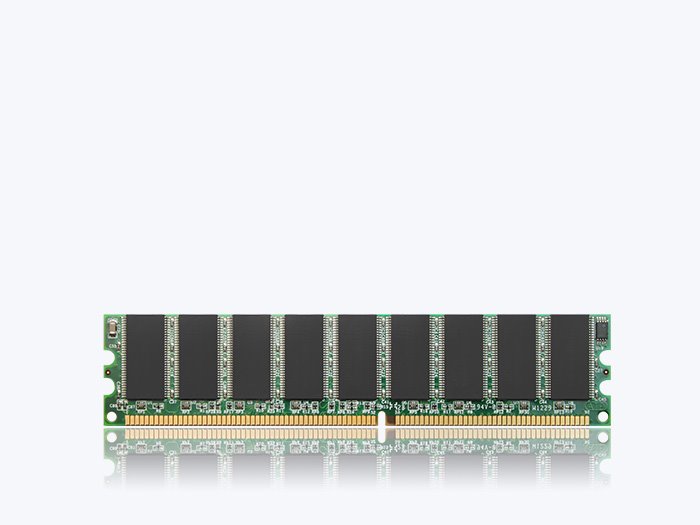

What DDR is

DDR SDRAM is the mainstream dynamic memory architecture for PCs, workstations, and servers. “Double Data Rate” means data transfers occur on both clock edges, doubling throughput without raising the clock frequency. DDR comes in generations (DDR → DDR2 → DDR3 → DDR4 → DDR5) that increase bandwidth, improve efficiency/reliability, and raise practical capacities.

Core ideas & signal scheme

-

Double-edge transfers: Data strobes (DQS) latch reads/writes on rising and falling edges.

-

Prefetch & bursts: Internally, the DRAM core runs slower than the I/O; data is “prefetched” (e.g., 8n prefetch since DDR3) and emitted in bursts (DDR4 BL8; DDR5 BL16 for better efficiency).

-

Banks & bank groups: Many parallel banks allow multiple open/queued operations; bank groups reduce timing conflicts at high speed.

-

On-die termination (ODT): Improves signal integrity on longer motherboard traces typical of socketed modules.

Generational overview (representative JEDEC data rates)

-

DDR (DDR1): ~200–400 MT/s per pin

-

DDR2: ~400–1066 MT/s

-

DDR3: ~800–2133 MT/s

-

DDR4: ~1600–3200 MT/s (higher vendor grades exist)

-

DDR5: 4800 MT/s baseline, scaling to 6400 MT/s and beyond as official grades advance (enthusiast profiles exceed this)

Actual platform bandwidth depends on channel count, ranks, controller timings, and thermals.

Modules & form factors

-

UDIMM / SO-DIMM: Unbuffered modules for desktops and laptops.

-

RDIMM (Registered DIMM): Adds a register/clock driver to buffer command/address for server stability at high capacities.

-

LRDIMM: “Load-reduced” DIMMs that further buffer data lines to support very large memory configurations.

-

3DS (3-D stacked) DIMMs: TSV-stacked dies for higher capacity per module.

-

MRDIMM (DDR5): Multiplexer-rank modules to push capacity/bandwidth envelopes in servers.

Channel architecture (client vs. server)

-

Channel width: Nominally 64-bit data per channel (client); 72-bit with DIMM-level ECC (server/workstation).

-

DDR5 sub-channels: Each UDIMM/RDIMM rank is internally split into two independent 32-bit sub-channels, improving parallelism and efficiency at high data rates.

-

Ranks: Single/dual/quad ranks interleave operations and hide DRAM core latencies.

Reliability, availability & serviceability (RAS)

-

On-die ECC (ODECC): Since DDR5, each DRAM die corrects small internal faults to maintain yield/reliability at high speed (not a substitute for system ECC).

-

System-level ECC: Servers/workstations use 72-bit channels (x8 or x4 devices) to detect/correct multi-bit faults; features like patrol scrubbing and chipkill-style resilience (with the right DIMMs/controllers) boost availability.

-

Link protection: Command/address parity and data CRC/DBI mechanisms reduce mis-transfer risk on high-speed buses.

Power & management

-

Voltage evolution: I/O/core voltages have dropped each generation (e.g., 1.2 V DDR4 → ~1.1 V DDR5), cutting dynamic power.

-

PMIC on DIMM (DDR5): Power management IC moves from motherboard to the module, improving local regulation and transient response.

-

Low-power states: Self-refresh, power-down, and fine-grained clock gating; server platforms coordinate refresh, throttling, and thermal headroom at rack scale.

Training, calibration & SI considerations

-

Link training: Read/write leveling and per-lane timing alignment compensate for trace length/skew.

-

Equalization: DDR5 platforms add receiver equalization tactics (e.g., DFE on the controller side) to sustain signal integrity at higher speeds.

-

Topology: Fly-by routing for command/address and careful termination help large multi-slot servers keep margins at speed.

Performance in practice

-

Throughput: Scales with data rate × channels × ranks. DDR5’s sub-channels improve utilization under mixed workloads.

-

Latency: Raw cycle counts (tCAS, tRCD, tRP) often rise with each generation, but effective latency is masked by higher concurrency (more banks/ranks) and smarter memory scheduling.

-

Queueing & QoS: Server controllers arbitrate between latency-sensitive and bulk traffic (databases, analytics, AI inference), using row-buffer locality and bank-group awareness to meet SLAs.

BIOS/firmware & profiles

-

SPD (Serial Presence Detect): Module-resident configuration data the platform reads to set timings/voltages.

-

Vendor profiles: Consumer boards expose XMP/EXPO profiles that push beyond JEDEC baselines; stability depends on silicon quality, board layout, and cooling.

-

Server policy: Firmware enforces validated JEDEC bins for uptime; features like Address-Based Page Policy, refresh management, and patrol scrubbing are common.

DDR vs. LPDDR (one-screen comparison)

| Aspect | DDR | LPDDR |

|---|---|---|

| Typical systems | Desktops, servers, workstations | Mobile, ultra-thin PCs, embedded/edge |

| Physical | Socketed DIMMs, long traces | Soldered BGA/PoP, very short routes |

| Channel | 64-bit (72-bit ECC) | Narrow multi-channel (e.g., dual ×16; LPDDR6: 2××12 sub-channels + metadata) |

| Power | Higher I/O power, platform cooling | Low VDDQ, deep idle states, DVFS |

| Capacity | Very high (multi-DIMM) | Moderate (soldered) |

| Serviceability | Field-replaceable | Not user-replaceable |

(Use DDR for capacity/serviceability; LPDDR for energy/area-constrained designs.)

Common pitfalls & best practices

-

Mixed modules fall to the slowest/common timings; avoid combining unmatched DIMMs.

-

Populate channels symmetrically (and the recommended slots) for dual/quad-channel bandwidth.

-

Thermals matter: High-speed DDR5 can throttle; airflow and heatsinks on dense servers are not optional.

-

Validate ECC/RAS paths: Enable scrubbing, monitor correctable error rates, and investigate hotspots early.

Outlook

DDR will continue scaling by raising I/O data rates, increasing bank-level concurrency, and refining module power/regulation. As AI and memory-bound analytics spread across data centers, expect tighter controller QoS, more sophisticated RAS, and new module innovations (higher-capacity stacks, advanced buffering) to keep capacity and bandwidth growing while maintaining reliability at scale.

TSMC vs Intel Foundry vs Samsung Foundry 2026