Quadric, Inc.

Overview

Quadric is a semiconductor and software company that designs and develops general-purpose neural processing units (NPUs) for edge computing. The company focuses on enabling real-time, AI-powered decision-making directly at the edge, with a unique processor architecture that combines classical DSP, control, and machine learning workloads in a single unified platform.

Founded

2016

Headquarters

Burlingame, California, United States

Founders

-

Veerbhan Kheterpal

-

Nigel Drego

-

Chetan Khona

Technology

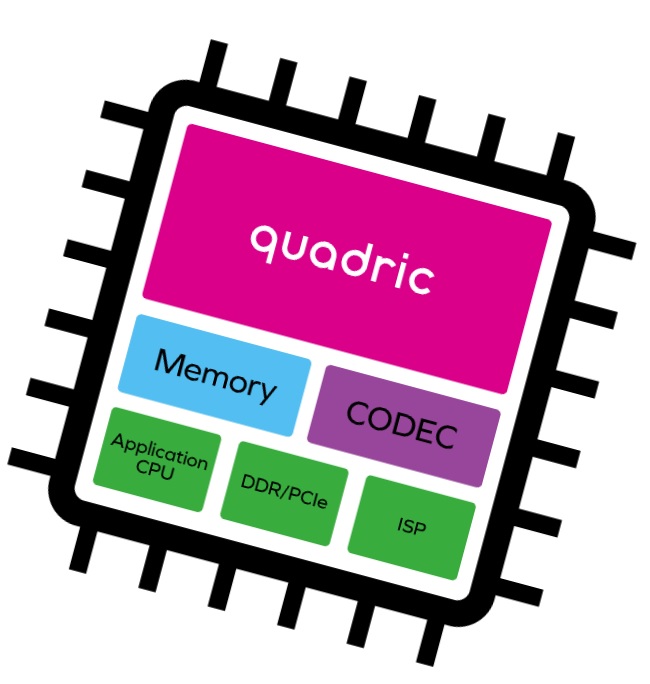

Quadric’s core innovation is the Quadric Processor, based on its proprietary GPNPU™ (General Purpose Neural Processing Unit) architecture. Unlike traditional accelerators that offload specific AI workloads, Quadric’s architecture enables both AI inference and conventional algorithmic tasks to run natively on the same chip—reducing latency, power, and complexity.

Quadric’s software stack allows developers to use standard C++ and familiar tools to build applications, eliminating the need for specialized AI programming models.

Key Products

-

Quadric Dev Kit – Integrated hardware and software development platform.

-

q16 Processor – A 16-core GPNPU offering balanced AI and traditional compute performance.

-

Quadric SDK – A complete toolchain for writing and optimizing code for Quadric chips.

Target Applications

-

Smart cameras

-

Robotics

-

Industrial automation

-

Drones

-

Smart sensors

-

Automotive edge systems

Business Model

Quadric licenses its processor IP to semiconductor and systems companies and also offers software tools to integrate and accelerate AI workloads at the edge.

Investors & Funding

Quadric has raised funding from major investors, including:

-

Denso

-

Leawood Venture Capital

-

Pear VC

-

Uncork Capital

-

Dell Technologies Capital

Mission

To empower edge devices with the ability to think and act in real time by delivering a high-performance, low-power AI compute platform that unifies classic and machine learning workloads.

Website

https://www.quadric.io

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.