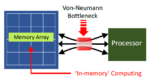

The term von Neumann bottleneck is used to denote the issue with the efficiency of the architecture that separates computational resources from data memory. The transfer of data from memory to the CPU contributes substantially to the latency, and dissipates a significant percentage of the overall energy associated with … Read More

Tag: in memory computing

All-Digital In-Memory Computing

Research pursuing in-memory computing architectures is extremely active. At the recent International Solid State Circuits conference (ISSCC 2021), multiple technical sessions were dedicated to novel memory array technologies to support the computational demand of machine learning algorithms.

The inefficiencies associated… Read More

In-Memory Computing for Low-Power Neural Network Inference

“AI is the new electricity.”, according to Andrew Ng, Professor at Stanford University. The potential applications for machine learning classification are vast. Yet, current ML inference techniques are limited by the high power dissipation associated with traditional architectures. The figure below highlights the … Read More