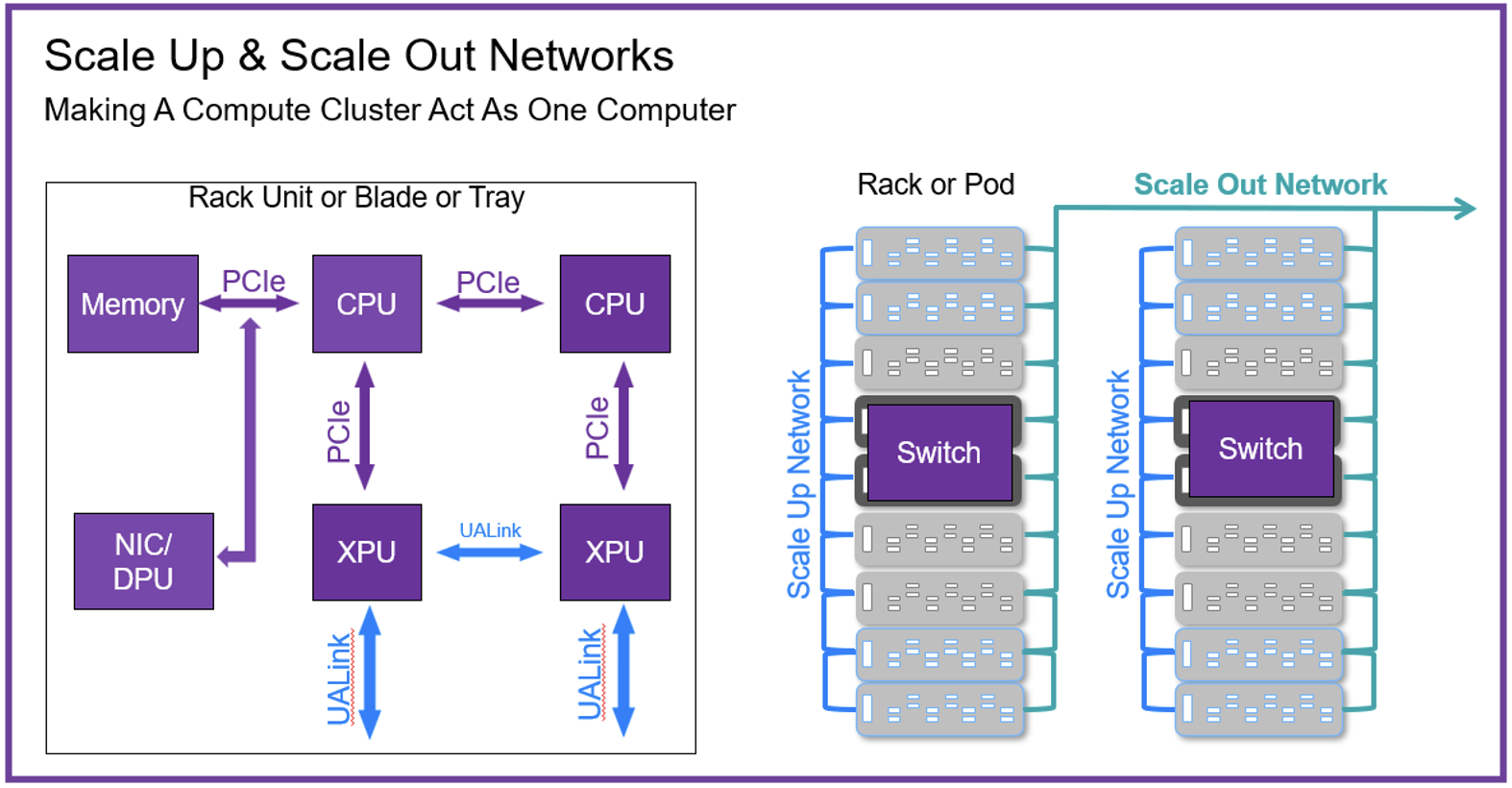

The evolution of hyperscale data center infrastructure to support the processing of trillions of parameters for large language models has created some rather substantial design challenges. These massive processing facilities must scale to hundreds of thousands of accelerators with highly efficient and fast connections. How to deliver fast memory access to all those GPUs and how to efficiently move large amounts of information across the data center are two significant problems to be solved.

It turns out Synopsys has been working on critical enabling IP technology in this area. The company has announced multiple industry-first solutions. Synopsys also co-hosted a very informative discussion on the emerging UALink standard with several industry heavyweights. Links are a coming but first let’s take a big picture view of how Synopsys enables AI advances with UALink.

About the Standards

A bit of background is useful.

Ultra Accelerator Link, or UALink is an open specification for a die-to-die and serial bus communication between AI accelerators. It was co-developed by Alibaba, AMD, Apple, Astera Labs, AWS, Cisco, Google, Hewlett Packard Enterprise, Intel, Meta, Microsoft and Synopsys. The UALink consortium officially incorporated as an electronics industry consortium in 2024 for promoting and advancing UALink. This is another very large ecosystem effort with over 100 companies participating.

An Informative Discussion

Getting four industry experts together to discuss technical topics can be challenging. Coordinating the discussion so lots of very useful information is conveyed in 25 minutes is truly noteworthy. That’s exactly what Synopsys achieved with AMD, Astera Labs and Moor Insights in a recent discussion posted on LinkedIn.

Entitled Designing the Future of AI Infrastructure with UALink, the four technologists participating are impressive:

Matthew Kimball, VP & Principal Analyst, Moor Insights & Strategy

Kurtis Bowman, Director of Architecture and Strategy, AMD

Chris Petersen, Fellow of Technology and Ecosystems, Astera Labs

Priyank Shukla, Director of Product Management, Synopsys Inc

Chris Petersen led the discussion. A link is coming so you can watch the entire event. Here are some of the items that are discussed:

- UALink aims to improve bandwidth, latency, and density. How does advanced SerDes fit into these scenarios?

- How do customers perceive the UALink consortium? What are the benefits of this collaboration?

- This is a very broad-based consortium, including many competing entities. How does such a system work? What are the dynamics of the group?

- How does this work optimize memory access for massively parallel systems?

- What are the key design challenges for advanced design centers and how does UALink address them?

- What is the interaction between UALink, OCP, IEEE and UEC?

- What will the timing be for product introductions based on this work?

A couple of comments from Priyank Shukla of Synopsys will give you a flavor of the discussion. Priyank discussed how the IEEE has expanded its communication specification to facilitate the very high performance, low power, and low latency required for advanced AI systems. He explained that high-speed SerDes technology is the foundation for the critical physical level link for all communication between any two chips that comprise a system. This includes GPUs, accelerators, and switches for example.

He went on to describe the massive ecosystem effort across many companies to implement the required standards and supporting technology. He pointed out that, “it takes a whole village to train a machine learning model.” Priyank explained that designing these systems is so challenging, there is no one right answer. He stated that, “when over 100 companies come together, the best idea survives.”

There are many more topics covered. A link is coming.

Industry First UALink IP Solution for Scale-Up Networks

Synopsys announced industry-first IP solutions for both Ultra Ethernet and UALink implementations back in December of 2024. The announcement details advances for both scale-up and scale-out strategies.

The Synopsys UALink IP solution facilitates scaling up AI clusters to 1,024 accelerators. Designers can achieve 200 Gbps per lane and unlock memory sharing across accelerators. The solution delivers speed verification with built-in-protocol checks.

The Synopsys Ultra Ethernet IP solution facilitates scaling out of networks to 1 million nodes. There is access to silicon-proven Synopsys 224G PHY IP to achieve up to 1.6 Tbps of bandwidth. Designers can now unlock ultra-low latency for real-time processing. You can learn more about the Synopsys 224G PHY IP on SemiWiki here, including characterization data and how the IP fits in these new standards.

There is a lot of useful information in the announcement, including how Synopsys’ industry-leading communication IP has enabled more than 5,000 successful customer tapeouts.

Synopsys also has a lot of help to scale the HPC and AI accelerator ecosystem through collaboration with industry leaders, including AMD, Astera Labs, Juniper Networks, Tenstorrent, and XConn.

There are informative quotes from senior executives at Junpier, AMD, Astera Labs, Tenstorrent, and XConn, as well as Synopsys. The chairperson of the board at the UALink Consortium also weighs in.

To Learn More

You can read the complete product announcement, Synopsys Announces Industry’s First Ultra Ethernet and UALink IP Solutions to Connect Massive AI Accelerator Clusters here. The LinkedIn panel entitled Designing the Future of AI Infrastructure with UALink can be viewed here. You can learn more about the Synopsys Ultra Ethernet IP solution here. And you can learn more about the Synopsys UALink IP solution here. And that’s how Synopsys enables AI advances with UALink.

Also Read:

448G: Ready or not, here it comes!

Synopsys Webinar – Enabling Multi-Die Design with Intel

cHBM for AI: Capabilities, Challenges, and Opportunities

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.