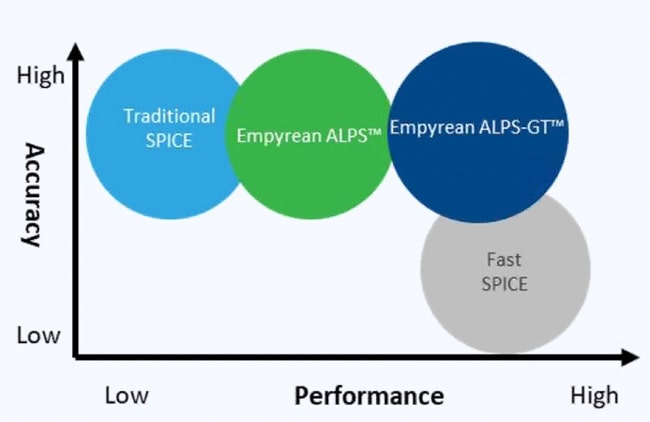

I first started using a SPICE circuit simulator in 1978 while at Intel and have followed that market ever since then. Back at DAC in 2012 I first heard of a Chinese EDA company called IC Scape with a SPICE circuit simulator called Aeolus, so I blogged about it. Fast forward to 2019 and I heard from Ravi Ravikumar, a former co-worker from Viewlogic days in the 1990s and he was excited to talk about Empyrean, because they have the tools from IC Scape plus they’ve added even more EDA tools. You’ve probably heard of classic SPICE and the more modern Fast SPICE simulators, so the following chart shows where the Empyrean simulators fit into that space:

The ALPS acronym stands for: Accurate, Large capacity, Parallel, SPICE. The GT suffix stands for: GPU-Turbo. Other companies and Universities have attempted to use a GPU to speed up the matrix math used in SPICE, but none of them have had commercial success, until now. So, why would you employ a GPU for SPICE circuit simulation?

- 10X faster simulation speeds versus using a 16-core simulation

- 7nm IC designs have massive SPICE requirements

Empyrean ALPS-GT runs on the Nvidia Tesla V100, and it simply outperforms SPICE simulator running on an Intel Xeon 8180, for example. OK, that sounds attractive, but as an engineer I want to know how it operates under the hood. The matrix math library found in the CUDA library could be used for SPICE, but it’s not fast enough or efficient enough, so the team at Empyrean wrote their own matrix solver to run on the Nvidia GPU.

Comparing the Nvidia Tesla V100 versus an Intel Xeon 8180:

- Cores

- Xeon 8180: 28 physical cores

- Tesla V100: 5,276 FP64 cores

- Double precision floating-point performance (FP64)

- Xeon 8180: 2T flops

- Tesla V100: 7T flops

In benchmarks between the Nvidia CUDA matrix solver versus the Smart Matrix Solver from Empyrean, the new approach is 5.8X faster on average. Comparing a CPU with 16 cores versus 8 GPUs, the ALPS-GT simulator was 6.4 to 16.9X faster. So that’s how you make your GPU-based SPICE simulator faster than the competition, impressive.

DAC Designer Track

I hope that you can make it to DAC56 in Las Vegas, but if not I wanted to at least tell you something about a paper authored by Empyrean (An-Jui Shey, Jason Xing) and Nvidia (Eric Hsu, Ting Ku):

Qualib AI: Machine Learning Based Arc Prediction of Timing Model for AMS Design

For AMS timing models you can use the manual method of defining timing arcs, which is time consuming and error prone, or you can apply machine learning to find more timing arcs. Empyrean has a commercial tool called Qualib that is a library/IP QA and debugging platform, but the paper shows how they have extended that for Nvidia to make Qualib AI, which is not commercially available yet, so stay tuned.

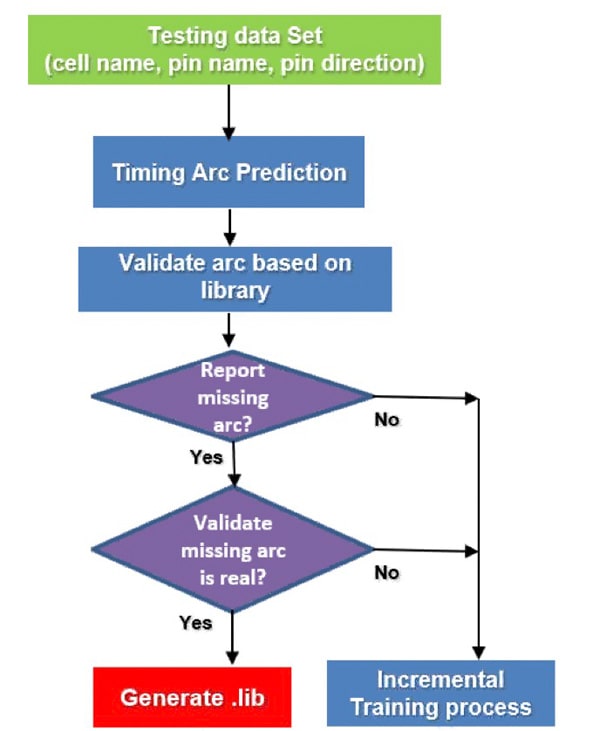

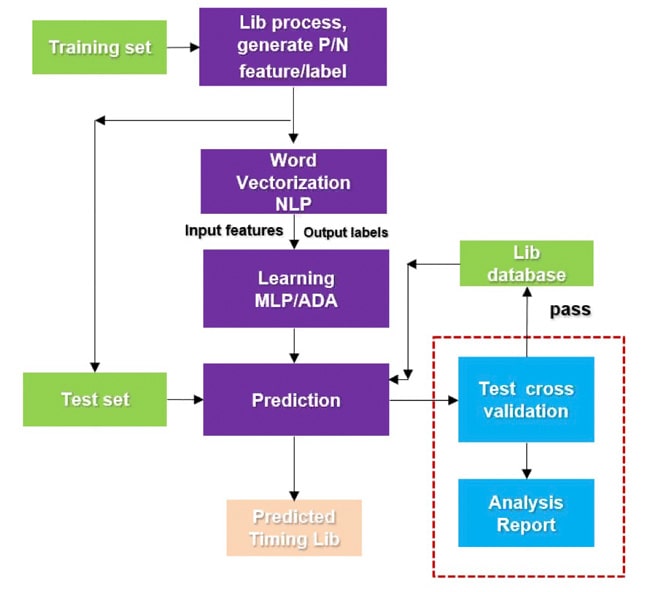

Let me show you a couple of diagrams, the first one is an application flow for timing arc prediction, the second one is timing arc modeling and prediction algorithm:

What Nvidia found in using Qualib AI is that the tool:

- Found a significant number of real, missing arcs in live projects

- Reduced the number of false positive missing arcs

- Runtime allowed interactive prediction

- Using increment training for better accuracy

- Predicted timing types

Summary

The team at Empyrean has been busy creating a GPU-based SPICE circuit simulator and applying AI to complex IP blocks in order to shorten development times at Nvidia. They are certainly on my radar to keep a watch on, because if they can satisfy the leading-edge demands at Nvidia then they are definitely a contender for new EDA tool evaluations. Why use old technology, when something newer comes along?

Share this post via:

A Century of Miracles: From the FET’s Inception to the Horizons Ahead