Why benchmark competition enables breakthrough innovation in AI. Two years ago I inadvertently started a war. And I couldn’t be happier with the outcome. While wars fought on the battle field of human misery and death never have winners, this “war” is different. It is a competition of human ingenuity to create new technologies that will benefit mankind by accelerating innovation underpinning advances in AI solutions to benefit an already massive and still growing worldwide user base.

Why benchmark competition enables breakthrough innovation in AI. Two years ago I inadvertently started a war. And I couldn’t be happier with the outcome. While wars fought on the battle field of human misery and death never have winners, this “war” is different. It is a competition of human ingenuity to create new technologies that will benefit mankind by accelerating innovation underpinning advances in AI solutions to benefit an already massive and still growing worldwide user base.

It all started two years ago, as I was preparing for the launch of “PowerAI”, a new type of software distribution that would take AI research in the form of Neural Network training out of the research labs across the world and in the hand of everyday users looking to create AI-powered solutions. PowerAI was designed to be the “Red Hat” of AI, a software distro that curates a rapidly improving technology on the cusp of greatness to provide stability, continuity and support. To mark the launch, I wanted to create a memorable milestone for the upcoming launch of our PowerAI product.“

PowerMLDL” had been making great strides with an agile release cycle for early adopters that would make users forget the oldest, most solid – and staid – computer brand IBM was behind it. I had created the abbreviation MLDL (for Machine Learning and Deep Learning) because “AI” still had the bitter taste of defeat it acquired when “Artificial Intelligence” had been overhyped and ultimately failed to deliver on its promise in the 80s. Then as now, the most promising technology were “Neural Networks”, a computing structure loosely modeled after the human brain as a set of “neurons”, simple highly interconnected computing units.

But much had changed since Neural Networks and with them the term “AI” had fallen in disrepute: advances in computer hardware and the needs to process a veritable data deluge created by an ever more connected world answered both the “how” and “why” for rebooting “Artificial Intelligence”. And, marketing opined, the name AI was quickly rehabilitating itself along with the technical field.

As we stood to launch our PowerAI distro for Artificial Intelligence together with IBM’s first accelerated AI server “Minsky” (or, “IBM Power 822LC for HPC” in corporate branding), we needed to capture the imagination of what these new products made possible. The value of the new products was integration, ease of use and speed to solution brought to the technical innovations contributed to open source AI frameworks developed by researchers from many companies.

But how to express this benefit? One day as we were reviewing training times and working with our applied AI research colleagues at the IBM TJ Watson Research Center to improve training times for a range of Watson applications, an idea took hold: with the new server and software, we were on the cusp of training a network to recognize images from the most complete image database (“ImageNet”) available to date in less than two hours.

What if “Alexnet”, the most neural network of the day and winner of a prestigious image recognition contest, could be trained in under an hour? We set out to conquer the one hour time. With a focus on innovation and optimization, Alexnet could be trained in under an hour in late summer, and we published “Deep Learning Training in under an hour” in a blog detailing our results in October 2016.

And so, the AI benchmark war started. At IBM, we had started a project to federate many Minsky servers to train a newer, more complex network in even less time using a technology we called DDL – “Distributed Deep Learning”. But before we could publish our results , Facebook published their own blog about “Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour”.

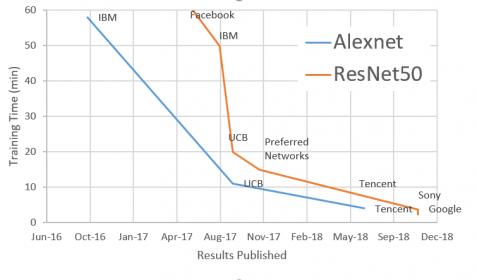

The Facebook team demonstrated many great ideas, but were not able to release their code to the public. Thus, in another first, PowerAI made Distributed Deep Learning available to a broad user community with even better training performance. Since then, some of the most prestigious companies in technology have added their illustrious names to the growing list of new AI training records: UC Berkeley, Tencent, Sony, and Google, to name but a few.

In the course of this competition, the training time for Alexnet went from 6 hours in 2015 to 4 minutes, and for the much more complex ResNet50 (another winner of the image classification competition) from 29 hours to 2.2 minutes. These advances in training speed are particularly important because they enable AI developers and data scientists to create better solutions – despite many advances, AI and neural networks are by no means a mature technology. Not least because a “constructive theory” of neural networks – that is, how to construct a network to accomplish a well-defined task such as recognizing cancerous tissue from a biopsy sample – has eluded practitioners, and so defining new networks to accomplish a task is as much an act of artisanship as of engineering. Something that requires sketches, tests, trials and errors – and to enable that, speed in testing new ideas by training new networks.

And so this AI benchmark “war” is a war without victims, but many victors – everybody who is benefiting from advances in AI technology, from enhanced face recognition to secure data on your phone, to better recommendations for movies, books and restaurants, to enhanced security and better data management, and assistive technologies for road safety and medical diagnosis.

As AI evolves, many of us have recognized that image recognition has served us well in this competition for creativity and innovation until now, but we need to be more inclusive of the wide raneg of AI application domains, as we propel forward the performance and capabilities of AI solutions. With the recent “MLPerf” initiative to create an industry-wide AI performance benchmark standard, we are creating a better competition to propel human ingenuity even further to advance the boundaries of what is possible with AI.

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026