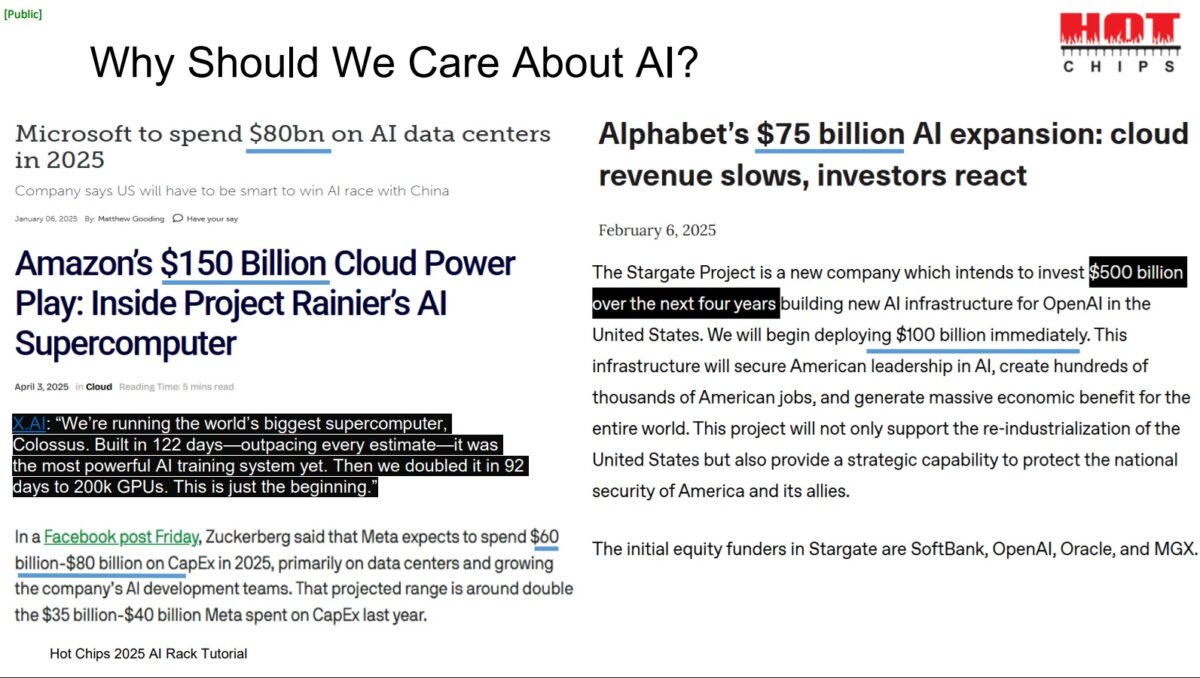

The evolution of AI workloads has profoundly influenced hardware design, shifting from single-GPU systems to massive rack-based clusters optimized for parallelism and efficiency. As outlined in this Hot Chips 2025 tutorial, this transformation began with foundational models like AlexNet in 2012 and continues with today’s multi-trillion-parameter behemoths.

Early AI breakthroughs relied on affordable compute. AlexNet, trained on ImageNet using two NVIDIA GTX 580 GPUs, demonstrated convolutional neural networks’ potential for image classification. Priced at $499, the GTX 580 offered 1.58 TFLOPs of FP32 performance and 192 GB/s memory bandwidth. Training took 5-6 days on FP32 precision, necessary for convergence due to its range and precision advantages over formats like Int8.

By 2015, ResNet-50 introduced data parallelism, distributing training across multiple GPUs like those in Facebook’s “Big Sur” system with eight K80 cards. Data parallelism splits datasets across model copies, using AllReduce operations to synchronize weights, accelerating training without increasing batch size.

The 2018 BERT model marked the transformer era, emphasizing natural language processing. Google’s TPUv2, with BF16 precision (matching FP32 range but lower precision), enabled faster training. A 256-TPUv2 cluster in a 2D torus topology trained BERT-large in four days using 64 ASICs. Reduced precision like BF16 and FP16, accelerated by NVIDIA’s Tensor Cores in V100 GPUs, balanced accuracy and throughput.

As models grew, new parallelism forms emerged. Pipeline parallelism, introduced in GPipe (2018), divides layers across nodes, though limited by inter-node bandwidth (e.g., PCIe or Ethernet at ~8 GB/s vs. intra-node links). NVIDIA’s DGX-1 V100 systems exemplified this, connecting eight GPUs via NVLink for efficient communication.

Tensor slicing (model parallelism), added in Megatron-LM (2019), splits matrix operations across GPUs within a node, offering near-linear scaling. For instance, the 2021 Megatron-Turing NLG (530B parameters) used 280 A100 GPUs per replica: 8-way tensor slicing per node, pipeline across 35 nodes, and data parallelism across replicas. Training took three months, highlighting the need for larger clusters.

Mixture of Experts (MoE), from GShard (2020), introduced sparsity. Unlike dense models requiring full compute, MoE activates only expert subsets, reducing power and computation. DeepSeekMoE (2024) maximized this by using fine-grained experts fitting single GPUs, minimizing overlap and inter-GPU communication. Intra-GPU bandwidth (e.g., 3.35 TB/s HBM) far exceeds scale-up links like NVLink (900 GB/s aggregate).

Hardware adapted accordingly. Scale-up domains like NVLink or AMD’s Infinity Fabric enable high-bandwidth, low-latency intra-rack connectivity. NVIDIA’s GB200 NVL72 racks 72 GPUs with 1.8 TB/s uni-directional bandwidth, using copper for short reaches and optics for longer. AMD’s MI300X supports 32 GPUs per rack in Azure setups.

Cluster scaling poses challenges: SERDES rates limit copper reach, driving optical adoption; power density (e.g., 100 kW+ racks) mandates liquid cooling; and bandwidth doubling every two years strains infrastructure. Taxonomy distinguishes scale-up (e.g., UALink) from scale-out (Ethernet/InfiniBand) and front-side networks.

Future trends include microscaling formats like FP4/FP8 for efficiency, with computational throughput soaring— from 1 PFLOP FP16 in 2018 DGX V100 to projected 100 PFLOP FP4 in 2027 single-GPU packages. Racks as AI building blocks, from Google’s TPU toruses to Meta’s data centers, underscore this shift toward continent-scale systems.

Bottom line: AI’s progression from narrow tasks to AGI/ASI demands hardware innovations in parallelism, precision, and interconnects, balancing compute density with energy constraints. As clusters grow to hundreds of thousands of GPUs, racks optimize for these workloads, enabling unprecedented scale.

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026