Given my SpyGlass background I always keep an eye out for new ideas that might be emerging in static and formal verification. Whatever can be covered through stimulus-free analysis reduces time that needn’t be wasted in dynamic analysis, also adding certainty to coverage across that range. Still, advances don’t come easily. Static analyses are infamously noisy, and formal methods equally infamously demand significant verifier expertise. Apps have made formal proofs more accessible within a bounded set of use-cases, and formal and/or AI complementing static methods have made static analyses more tractable in linting and domain crossing applications. What can be done to broaden the useful scope of static/formal? AI, no surprise, is playing an increasing role in answering that question.

Unifying Stimulus-Free Verification

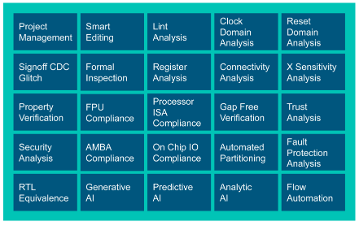

The promise of both static and formal methods is that whatever they can prove, they can do so quite generally without need to define stimulus; whereas dynamic verification can prove correctness only within the bounds of the use-cases you test. The tradeoff is that useful static and formal proofs are quite restricted in application. Siemens aims to loosen those limits through a new product release they call Questa One SFV (stimulus-free verification), integrating static and formal analysis together with AI.

One objective here is to simplify license management — one license pool to draw on whether you are running static checks, formal checks, or AI-operations, which helps simplify access and improve license utilization. This freedom applies equally to parallel usage, again maximizing utilization no matter what licenses are needed for a particular task.

Questa One Stimulus Free Verification

Importantly under this umbrella, as one example, they can combine natural language understanding to parse a user-requested check, and from that generate and verify a property assertion. Similarly, linting checks can be filtered by formal methods to suppress much of the noise common to such static analysis, leaving only truly suspicious cases for DV/design resolution.

In this paper Siemens hints at applying this suite of capabilities to a more challenging requirement. While Questa One already supports a formal VIP library covering a range of needs, including AMBA compliance, it is not uncommon for design houses to adapt protocol standards to their own needs. For example, they might have a modified version of AHB for which they need their own compliance properties suite. Siemens asserts that Questa One SFV could handle this through generative methods, starting from the custom spec as input. They also suggest that partitioning large formal proofs can be automated through SFV, adding scalability to formal methods.

What else might be possible?

Continuing the theme of mixing AI, static, and formal, bug triage of various flavors is already in active deployment, especially around CDC and RDC analysis where I know that unsupervised learning techniques are used for grouping a large number of error reports into likely common root causes.

Another interesting application came up in a recent DAC panel for detecting naming-convention compliance violations (remember those from the Reuse Methodology Manual?). Turns out that adhering to naming conventions is becoming more important in support of equivalence checking and also, I would guess, in AI learning and inference support across design and (formal) testbench file structures. Yet, no surprise, designers aren’t always conscientious in following these rules (my design simulates and synthesizes correctly, who needs naming rules?).

More generally, I am holding out hope that AI can help scale the app concepts to something more flexible. The AHB application mentioned earlier is one example.

Interesting ideas. You can read the white paper HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.