Badru Agarwala is the CEO and Co-Founder of Rise Design Automation (RDA), an EDA startup with a mission to drive a fundamental shift-left in semiconductor design, verification, and implementation by raising abstraction beyond RTL With over 40 years of experience in EDA, Badru served as General Manager of the CSD division at Mentor Graphics (now Siemens EDA) before founding RDA, where he spearheaded advancements in high-level design, verification, and power optimization. He has also founded multiple successful startups, including Axiom Design Automation (acquired by Mentor Graphics in 2012), Silicon Automation Systems (now Sasken Communication), and Frontline Design Automation (acquired by Avant! Corporation). His expertise and visionary leadership continue to drive innovation, shaping the future of semiconductor design and verification.

Can you tell us about your company and its mission.

Rise Design Automation (RDA) is a new EDA startup that recently emerged from stealth mode. Our mission is to drive a shift-left in semiconductor design, verification, and implementation by raising abstraction beyond RTL. This approach delivers orders-of-magnitude improvements in productivity while bridging the gap between system and silicon.

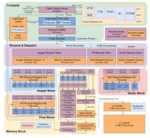

RDA’s innovative tool suite is designed for scalable adoption, enabling multi-level design and verification with high performance. By combining higher abstraction with implementation insight, we help semiconductor teams accelerate development while meeting the demands of modern chip design.

What problems are you solving/what’s keeping your customers up at night?

The semiconductor industry is experiencing unprecedented growth, driven by increasing intelligence, greater connectivity, and rising design complexity across all market segments. This increased intelligence results in more software and a growing reliance on specialized silicon accelerators to meet compute demands. However, these accelerators must be tailored to specific market requirements, making a one-size-fits-all approach impractical.

Delivering architectural innovation in silicon with predictable resources, costs, and schedules is critical for customers to achieve differentiation. However, traditional RTL design flows are time-consuming and often require multiple iterations due to late-stage issues that emerge during design and implementation. Especially with a shortage of experienced hardware designers, these inefficiencies extend development cycles and introduce risks that can impact power, performance, and area (PPA) targets. A systematic and scalable approach is essential.

Rise addresses this challenge by enabling early architectural exploration with implementation correlation. This provides early visibility into silicon design estimations and trends before committing to an architecture. By integrating front-end exploration with implementation-aware insights, teams can confidently develop innovative, verifiable, and implementable architectures at their target technology node.

By operating at a higher level of abstraction, Rise delivers a 30x to 1000x increase in verification performance over traditional RTL. This speedup enables software and hardware co-simulation very early in the design cycle, allowing teams to verify both functionality and performance in a cohesive environment. By bridging the gap between software and silicon, Rise ensures that architectural decisions are validated holistically, reducing risk and accelerating overall system development.

How has the recent “speed of light” advances in AI and generative AI helped what RDA delivers to customers?

The semiconductor industry has increasingly adopted AI across a range of applications to enhance tool and user productivity, efficiency and results. However, the use of generative AI for RTL code has been met with caution, partly due to concerns about training data, verification, and reliability. As design complexity increases, AI-driven hardware design is becoming essential for reducing costs, improving accessibility, and accelerating innovation while ensuring high-quality, verifiable results.

Rise addresses this challenge by raising design abstraction and applying AI with domain expertise to transform natural-language intent into human-readable, modifiable, and verifiable high-level design code. This reduces manual effort, shortens learning curves, and minimizes late-stage surprises. Leveraging lightweight, deployable models built on pretrained large language models, Rise delivers a shift-left approach at higher abstractions in SystemVerilog, C++, and SystemC.

Rather than relying solely on AI for Quality of Results (QoR), Rise augments human expertise with a high-level toolchain for design, verification, debug, and architectural exploration. This synergy between AI and the Rise toolchain delivers optimized RTL code and unlocks significant productivity gains, while ensuring that AI-driven EDA remains practical, verifiable, and implementable.

Additionally, our AI capabilities continue to evolve. We recently integrated AI into our Design Space Exploration (DSE), enabling intelligent, goal-driven optimizations with analysis feedback, instead of manual parameter sweeps. This AI-enhanced approach changes architectural exploration from random searching to finding the right architecture quickly.

There have been many attempts and tools to raise abstraction in semiconductor design, why is Rise different?

The EDA market has seen many efforts to raise design abstraction, yet higher-level design tools often lag in innovation. Rise takes a fundamentally different approach, building a new architecture from the ground up with several key advantages.

First, Rise is language- and abstraction-agnostic, supporting the most suitable language for each task. While existing solutions rely on C++ and SystemC, Rise adds untimed and loosely timed SystemVerilog support, easing adoption for engineers in established workflows. Its open and flexible architecture also allows seamless integration of new languages and tools. This native multi-level, multi-language support enables designers to analyze and debug at the same abstraction level in which they design.

Second, Rise delivers 10x–100x faster synthesis and exploration while maintaining predictable, high-quality RTL. This is critical for true architectural exploration, allowing teams to make informed decisions with immediate feedback.

Third, verification is deeply integrated into the Rise architecture. Automated verification methods, reusable components, and adaptable interfaces enable seamless connection with industry best practices, facilitating complete block-to-system verification with minimal effort.

Finally, we have developed a unique generative AI solution for high-level design that is tightly integrated into the Rise toolchain, as discussed in detail earlier.

Which type of markets and users do you target?

We focus on companies developing new designs, new IP blocks, and new silicon. Our solution is particularly valuable for teams engaged in architectural innovation and performance optimization, where early decisions significantly impact final silicon quality.

We see two types of users with Rise. The first consists of traditional RTL and production design teams, who are cautious in adopting new methodologies due to the high cost of failure. For these teams, maintaining high-quality QoR, a short learning curve, and comprehensive verification alongside architectural exploration is essential. The additional support of SystemVerilog and plug-in of existing EDA tools helps ease adoption and mitigate risk.

The second group includes researchers, architects, and HW/SW teams focused on early-stage exploration and software-hardware co-design. Rise tools serve this market by providing high-performance simulation and synthesis, enabling teams to efficiently explore trends and validate design choices. By integrating with high-speed, open-source implementation tools, our solution facilitates rapid iteration on architectural decisions, delivering key implementation insights and performance metrics for systems executing both hardware and software.

How do customers normally engage with your company?

We offer multiple ways for customers to engage with our products and team. The process typically begins with a discussion, presentation, or product demonstration, where we collaborate to determine the best next steps based on their needs.

To learn more, customers can visit our website rise-da.com, where we provide on-demand webinars, product videos, and additional resources. They can also contact us directly via email at info@rise-da.com .

To get the latest updates you can follow us on LinkedIn (RDA LinkedIn Page)and I personally welcome direct connections via LinkedIn (Badru Agarwala) or email at badru@rise-da.com.

Also Read:

CEO Interview: Mouna Elkhatib of AONDevices