Keeping up with competitors in many computing applications today means incorporating AI capability. At the edge, where devices are smaller and consume less power, the option of using software-powered GPU architectures becomes unviable due to size, power consumption, and cooling constraints. Purpose-built AI inference … Read More

Tag: inference engines

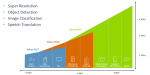

A No-Fudge ML Architecture for Arm

At TechCon I had a 1×1 with Steve Roddy, VP of product marketing in the Machine Learning (ML) Group at Arm. I wanted to learn more about their ML direction since I previously felt that, amid a sea of special ML architectures from everyone else, they were somewhat fudging their position in this space. What I heard earlier was that… Read More