An Open ISA, a Closed Mindset — Predictive Execution Charts a New Path

The RISC-V revolution was never just about open instruction sets. It was a rare opportunity to break free from the legacy assumptions embedded in every generation of CPU design. For decades, architectural decisions have been constrained by proprietary patents, locked toolchains, and a culture of cautious iteration. RISC-V, born at UC Berkeley, promised a clean-slate foundation: modular, extensible, and unencumbered. A fertile ground where bold new paradigms could thrive.

XiangShan, perhaps the most ambitious open-source RISC-V project to date, delivers impressively on that vision—at least at first glance. Developed by the Institute of Computing Technology (ICT) under the Chinese Academy of Sciences, XiangShan aggressively targets high performance. Its dual-core roadmap (Nanhu and Kunminghu) spans mobile and server-class performance brackets. By integrating AI-focused vector enhancements (e.g., dot-product accelerators), high clock speeds, and deep pipelines, XiangShan has established itself as the most competitive open-source RISC-V core in both versatility and throughput.

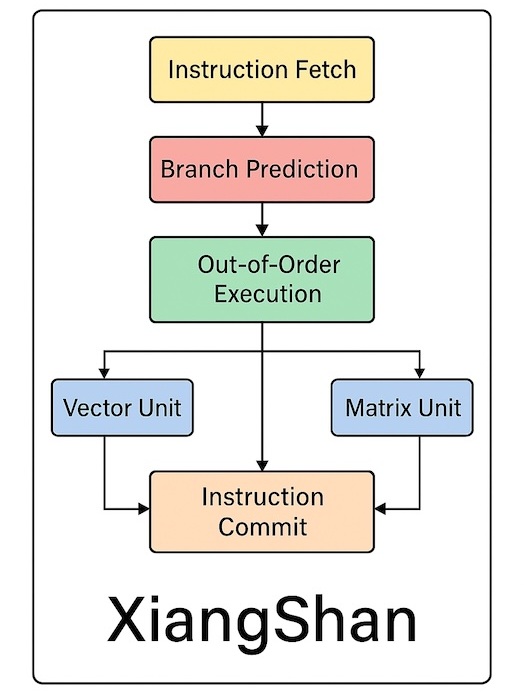

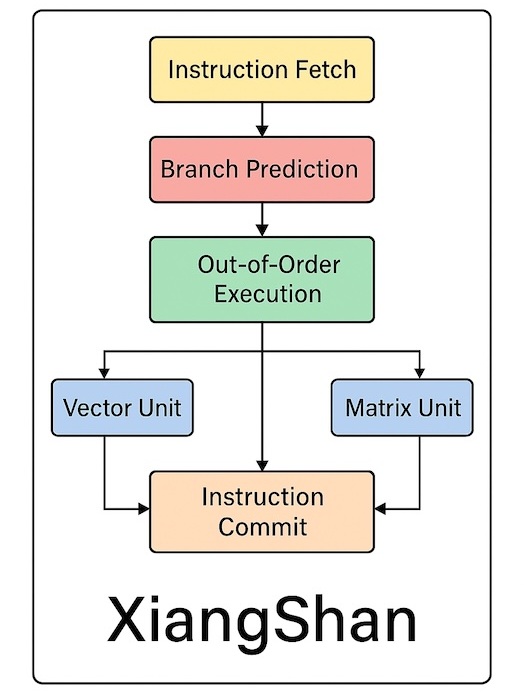

But XiangShan achieves this by doubling down on conventional wisdom. It fully embraces speculative, out-of-order microarchitecture—fetching, predicting, and reordering dynamically to maintain high instruction throughput. Rather than forging a new execution model, it meticulously refines well-known techniques familiar from x86 and ARM. Its design decisions reflect performance pragmatism: deliver ARM-class speed using proven playbooks, made interoperable with an open RISC-V framework.

What truly sets XiangShan apart is not its microarchitecture but its tooling. Built in Chisel, a hardware construction language embedded in Scala, XiangShan prioritizes modularity and rapid iteration. Its open-source development model includes integrated simulators, verification flows, testbenches, and performance monitoring. This makes XiangShan not just a core design, but a scalable research platform. The community can reproduce, modify, and build upon each generation—from Nanhu (targeting Cortex-A76 class) to Kunminghu (approaching Neoverse-class capability).

In this sense, XiangShan is a triumph of open hardware collaboration. But it also highlights a deeper inertia in architecture itself.

Speculative execution has dominated CPU design for decades. From Intel and AMD to ARM, Apple, IBM, and NVIDIA, the industry has invested heavily in branch prediction, out-of-order execution, rollback mechanisms, and speculative loads. Speculation once served as the fuel for ever-increasing IPC (Instructions Per Cycle). But it now carries mounting costs: energy waste, security vulnerabilities (Spectre, Meltdown, PACMAN), and ballooning verification complexity.

Since 2018, when Spectre and Meltdown exposed the architectural liabilities of speculative logic, vendors have shifted focus. Patents today emphasize speculative containment rather than acceleration. Techniques like ghost loads, delay-on-miss, and secure predictors aim to obscure speculative side effects rather than boost performance. What was once a tool of speed has become a liability to mitigate. This shift marks a broader digression in CPU innovation—from maximizing performance to patching vulnerabilities.

Most recent patents and innovations now prioritize security mitigation over performance enhancement. While some performance-oriented developments still surface, particularly in cloud and distributed systems, the dominant trend has become defensive. Designs increasingly rely on rollback and verification mechanisms as safeguards. The speculative execution model, once synonymous with speed and efficiency, has been recalibrated into a mechanism of trust and containment.

This is why XiangShan’s adherence to speculation represents a fork in the road. RISC-V’s openness gave the team a chance to rethink not just the ISA, but the core execution model. What if they had walked away from speculation entirely?

Unlike dataflow machines (Groq, Tenstorrent) or the failed promise of VLIW (e.g., Itanium and its successors in niche DSP or embedded markets), Simplex Micro’s predictive execution model breaks from speculative architecture—but with a crucial difference: it aims to preserve general-purpose programmability. Dataflow and VLIW each delivered valuable lessons in deterministic scheduling but struggled to generalize beyond narrow use cases. Each became a developmental cul-de-sac—offering point solutions rather than a unifying compute model.

Simplex’s family of foundational patents eliminates speculative execution entirely. Dr. Thang Tran—whose earlier vector processor was designed into Meta’s original MTIA chip—has patented a suite of techniques centered on time-based dispatch, latency prediction, and deterministic replay. These innovations coordinate instruction execution with precision by forecasting readiness using cycle counters and hardware scoreboards. Rather than relying on a program counter and branch prediction, this architecture replaces both with deterministic, cycle-accurate scheduling—eliminating speculative hazards at the root.

Developers can still write in C or Rust, compiling code through standard RISC-V toolchains with a modified backend scheduler. The complexity shifts to compilation, not programming. This preserves software portability while achieving hardware-level predictability.

XiangShan has proven what open-source hardware can achieve within the boundaries of established paradigms. Simplex Micro challenges us to redraw those boundaries. If the RISC-V movement is to fulfill its original promise—not just to open the ISA, but to reimagine what a CPU can be—then we must explore roads not taken.

And Predictive Execution may be the most compelling of them all: the fast lane no one has yet dared to take.

Also Read:

Podcast EP294: An Overview of the Momentum and Breadth of the RISC-V Movement with Andrea Gallo

Andes Technology: Powering the Full Spectrum – from Embedded Control to AI and Beyond

From All-in-One IP to Cervell™: How Semidynamics Reimagined AI Compute with RISC-V

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.