NVIDIA reports Q2 EPS $2.70 ex-items

- Reports Q2:

- Revenue $13.51B vs. FactSet $11.19B

- Q3 Guidance:

- Revenue $16.00B +/- 2% vs FactSet $12.59B

- Non-GAAP gross margin 72.5%, +/- 50bp vs consensus 70.6%

- Non-GAAP operating expenses $2.00B vs. consensus $2.16B

Impressive. Do you remember the crypto mining bubble a while back? TSMC had a good run with it but it was short lived. The AI bubble should have a much longer life but it will still be a bubble of sorts, not sustainable, my opinion.

VMWare was mentioned 15 times in the call!

NVIDIA Corp. (NASDAQ:NASDAQ:NVDA) Q2 2024 Earnings Conference Call August 23, 2023 5:00 PM ETCompany ParticipantsSimona Jankowski - VP, IRColette Kress -...

seekingalpha.com

I had thought Nvidia was TSMC CoWas constrained but there was no mention of that. I'm guessing that TSMC CoWas facilities are ramping faster than predicted. It will be interesting to hear TSMC's next call.

Here are Jensen's closing remarks which is worth a read:

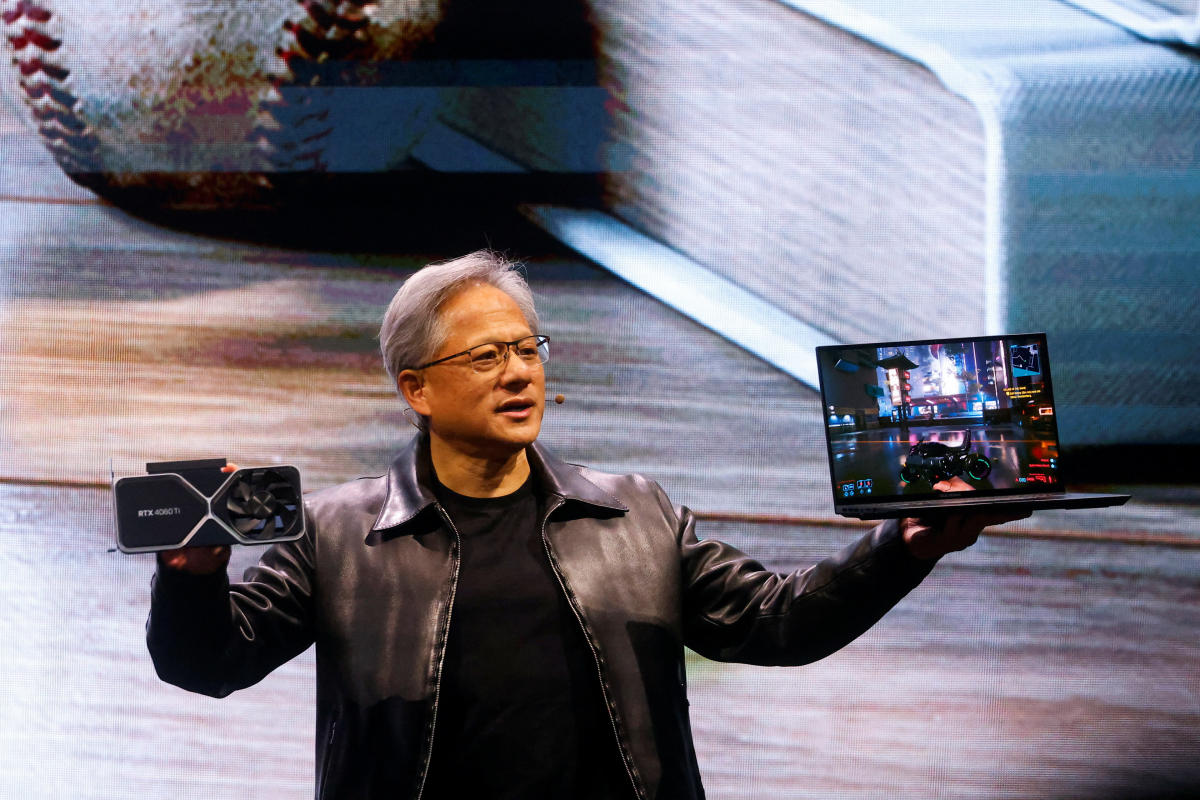

Jensen Huang

A new computing era has begun. The industry is simultaneously going through 2 platform transitions, accelerated computing and generative AI. Data centers are making a platform shift from general purpose to accelerated computing. The $1 trillion of global data centers will transition to accelerated computing to achieve an order of magnitude better performance, energy efficiency and cost. Accelerated computing enabled generative AI, which is now driving a platform shift in software and enabling new, never-before possible applications. Together, accelerated computing and generative AI are driving a broad-based computer industry platform shift.

Our demand is tremendous. We are significantly expanding our production capacity. Supply will substantially increase for the rest of this year and next year. NVIDIA has been preparing for this for over two decades and has created a new computing platform that the world’s industry -- world’s industries can build upon. What makes NVIDIA special are: one, architecture. NVIDIA accelerates everything from data processing, training, inference, every AI model, real-time speech to computer vision, and giant recommenders to vector databases. The performance and versatility of our architecture translates to the lowest data center TCO and best energy efficiency.

Two, installed base. NVIDIA has hundreds of millions of CUDA-compatible GPUs worldwide. Developers need a large installed base to reach end users and grow their business. NVIDIA is the developer’s preferred platform. More developers create more applications that make NVIDIA more valuable for customers. Three, reach. NVIDIA is in clouds, enterprise data centers, industrial edge, PCs, workstations, instruments and robotics. Each has fundamentally unique computing models and ecosystems. System suppliers like OEMs, computer OEMs can confidently invest in NVIDIA because we offer significant market demand and reach. Scale and velocity. NVIDIA has achieved significant scale and is 100% invested in accelerated computing and generative AI. Our ecosystem partners can trust that we have the expertise, focus and scale to deliver a strong road map and reach to help them grow.

We are accelerating because of the additive results of these capabilities. We’re upgrading and adding new products about every six months versus every two years to address the expanding universe of generative AI. While we increased the output of H100 for training and inference of large language models, we’re ramping up our new L40S universal GPU for scale, for cloud scale-out and enterprise servers. Spectrum-X, which consists of our Ethernet switch, BlueField-3 Super NIC and software helps customers who want the best possible AI performance on Ethernet infrastructures. Customers are already working on next-generation accelerated computing and generative AI with our Grace Hopper.

We’re extending NVIDIA AI to the world’s enterprises that demand generative AI but with the model privacy, security and sovereignty. Together with the world’s leading enterprise IT companies, Accenture, Adobe, Getty, Hugging Face, Snowflake, ServiceNow, VMware and WPP and our enterprise system partners, Dell, HPE, and Lenovo, we are bringing generative AI to the world’s enterprise. We’re building NVIDIA Omniverse to digitalize and enable the world’s multi-trillion dollar heavy industries to use generative AI to automate how they build and operate physical assets and achieve greater productivity. Generative AI starts in the cloud, but the most significant opportunities are in the world’s largest industries, where companies can realize trillions of dollars of productivity gains. It is an exciting time for NVIDIA, our customers, partners and the entire ecosystem to drive this generational shift in computing. We look forward to updating you on our progress next quarter.

finance.yahoo.com

finance.yahoo.com