Meet the mammoth AI supercomputer featuring 100,000 Nvidia H100 GPUs, poised for a significant performance boost.

Elon Musk, co-founder and CEO of Tesla Motors Inc.,(R) with Jen-Hsun Huang, CEO of Nvidia Corp.,(L) at the GPU Technology Conference in San Jose, California.

On October 4, 2024, the Greater Memphis Chamber approved the expansion of xAI’s AI training facility and supercomputer, Colossus. Launched just a month prior, Colossus quickly gained recognition as the world’s largest GPU supercomputer, initially featuring 100,000 Nvidia GPUs.

Led by Elon Musk, xAI aims to double Colossus’s capacity to 200,000 GPUs, including 50,000 advanced H200s. This ambitious growth, occurring merely 122 days after the facility’s initial announcement on June 5, showcases the rapid pace and scale typical of Musk’s ventures.

Technological advantages of xAI’s Colossus

Built in Memphis, Tennessee, in just four months, Colossus utilizes Nvidia’s H100 GPUs, offering up to nine times the speed of the previous A100 models, with each H100 GPU delivering up to 2,000 teraflops of performance. The initial setup integrates 100,000 H100 GPUs, with plans to add 50,000 more H100s alongside 50,000 new H200 GPUs.The combined array could theoretically achieve about 497.9 exaflops (497,900,000 teraflops), setting new benchmarks in supercomputing power. While this enhanced capacity far exceeds current supercomputing records, real-world performance may face system integration, communication overhead, power consumption, and cooling challenges.

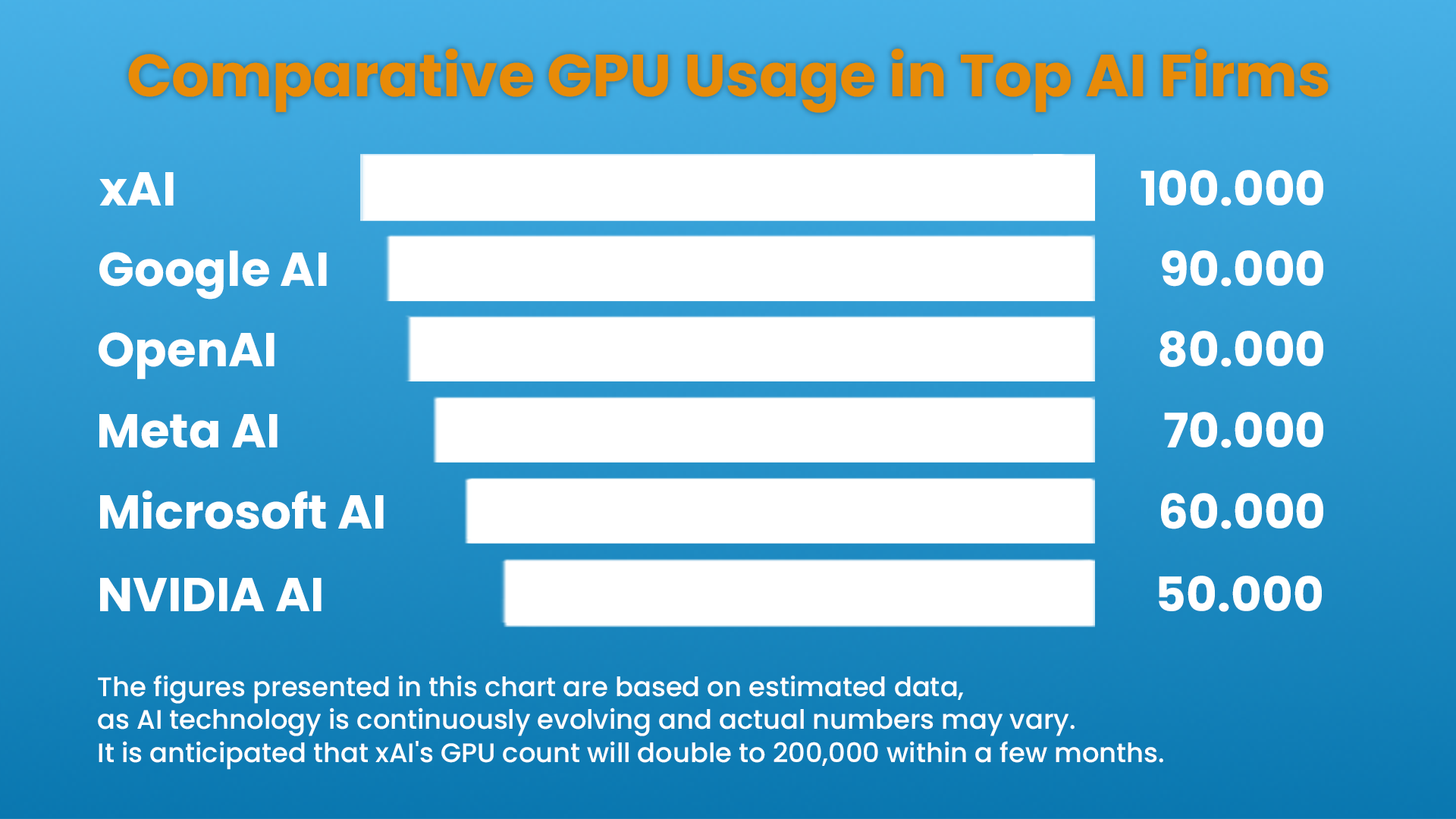

Colossus also surpasses major competitors, with xAI’s planned 200,000 GPUs outstripping Google AI’s 90,000 and Meta AI’s 70,000 GPUs. The expansion aims to boost xAI’s ability to develop and refine AI models like Grok 3, which is set to compete with GPT-5, OpenAI’s highly anticipated breakthrough in language model technology. Grok 3, xAI’s most advanced chatbot, is expected to launch in December 2024.

As Tesla prepares to unveil AI enhancements at its Robotaxi event this month, the collaboration between Tesla’s AI initiatives and xAI’s computational resources becomes increasingly apparent, particularly in autonomous driving technologies.

Environmental impact and sustainability measures

Despite its technological prowess, the Colossus project has faced environmental and resource concerns from the Memphis community.The supercomputer’s operation is expected to consume 1.3 million gallons of water daily and up to 150 megawatts of power per hour at peak times (3.6 million kWh per day). This energy consumption is equivalent to the daily electricity use of approximately 120,000 average American households (43.8 million households/year).

xAI has committed to mitigating these impacts by planning a new power substation and a gray-water processing facility. These steps aim to alleviate the strain on local resources, reflecting xAI’s commitment to balancing innovation with sustainability.

Elon Musk's xAI expands Colossus, the world's largest AI supercomputer

Colossus, a groundbreaking AI supercomputer with 200,000 Nvidia GPUs, is spearheading artificial intelligence advancements and reshaping the future of computing.