Moshe Tanach is co-founder and CEO of NeuReality. Prior to founding the company, he held senior engineering leadership roles at Marvell and Intel, where he led complex wireless and networking products from architecture through mass production. He also served as AVP of R&D at DesignArt Networks (later acquired by Qualcomm), where he led development of 4G base station technologies.

Tell us about your company? What problems are you solving?

NeuReality was established by industry veterans from Nvidia-Mellanox, Intel, and Marvell, united by a vision to transform datacenter infrastructure for the AI era. As computational focus shifts from CPUs to GPUs and specialized AI processors, we recognized that general-purpose legacy CPU and NIC architectures had become bottlenecks, limiting high-end GPU performance and efficiency. Our mission is to redefine these system components, prioritizing efficiency and cost-effectiveness for next-generation AI infrastructure.

We address a critical challenge in today’s AI datacenters — underutilized GPUs idling while waiting for data. Whether in distributed training of large language models or in disaggregated inference pipelines, the network connecting these GPUs is increasingly vital both in bandwidth and latency. The core challenge is to move large volumes of data between GPUs instantly, enabling continuous computation. Failure to do so results in significant cost inefficiencies and undermines the profitability of AI applications.

Our purpose-built heterogeneous compute architecture, advanced AI networking, and software-first philosophy led to the launch of our NR1 product. NR1 integrates an embedded AI-NIC and is delivered with comprehensive inference-serving and networking software stacks. These are natively integrated with MLOps, orchestration tools, AI frameworks, and xCCL libraries, ensuring rapid innovation and optimal GPU utilization. We are now developing our second-generation of products starting with the NR2 AI-SuperNIC, focused exclusively on GPU-direct, east-west communication for large-scale AI factories.

What is the biggest pain point that you are solving today for customers?

The paradigm in datacenter design has shifted from optimizing individual server nodes to architecting entire server racks and clusters, scaling up to hundreds or thousands of GPUs. The interconnect between these GPU nodes and racks must match the performance of in-node connectivity, delivering maximum bandwidth and minimal latency.

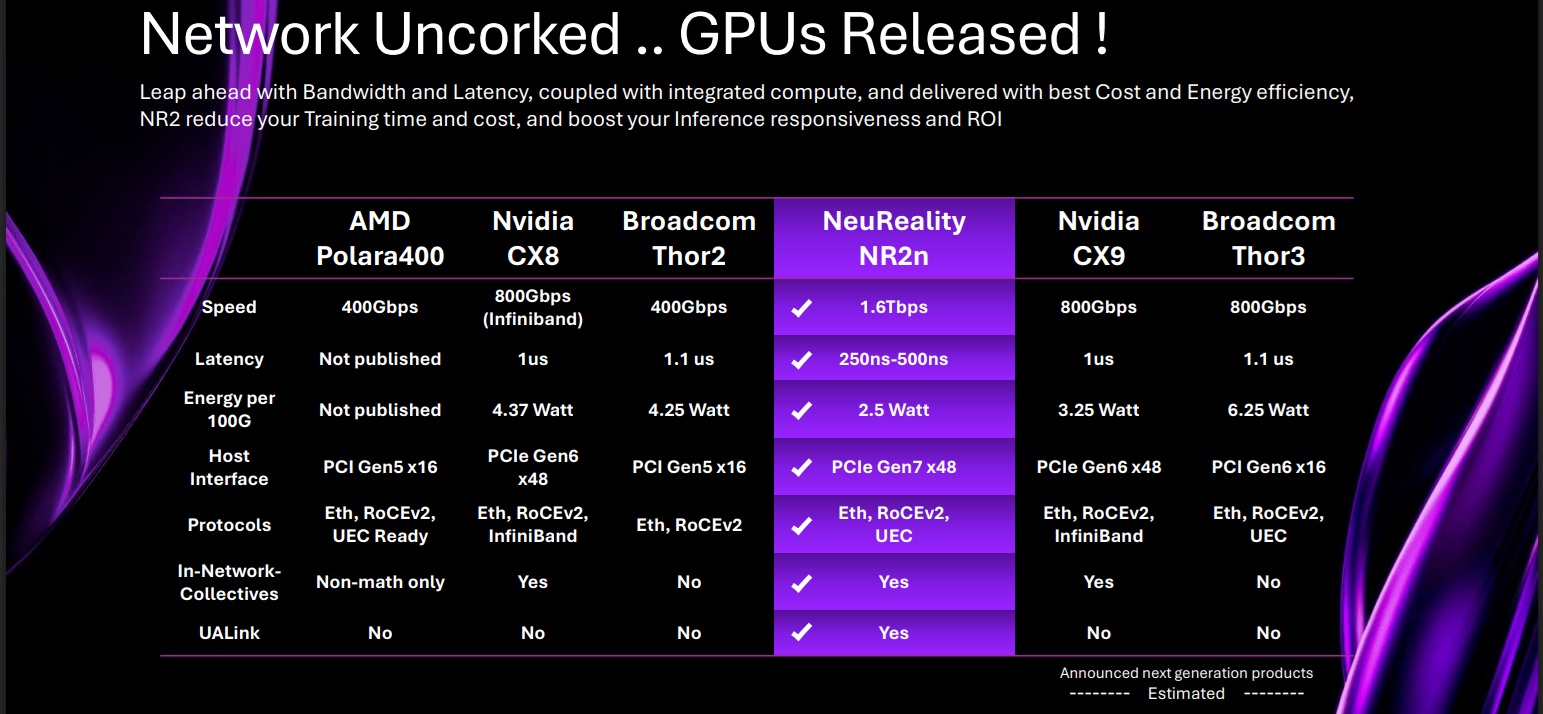

Our customers’ primary pain point is that current networking solutions, such as those from Nvidia and Broadcom, are neither wide nor fast enough, resulting in wasted GPU resources and increased operational costs due to power inefficiencies. To address this, we developed the NR2 AI-SuperNIC, purpose-built for scale-out AI systems. Free from legacy constraints, NR2 offers 1.6Tbps bandwidth, sub-500ns latency, and native support for GPU-direct interfaces over RoCE and UET. A flexible control plane and full hardware offload to the data plane supports all distributed collective libraries, orchestration, and MLOps protocols. By eliminating unnecessary overhead, NR2 achieves industry-leading power efficiency, a critical advantage as the number of NIC ports and wire speeds continue to rise.

Once you secure the best GPUs and XPUs for AI, network performance and integration into AI workflows becomes the ultimate differentiator for AI datacenters and multi-site “AI brains.”

What keeps your customers up at night?

Our customers are focused on three core challenges:

- Maximizing the ROI of their GPU investments

- Managing AI infrastructure growth in a cost-effective, sustainable manner

- Avoiding lock-in to proprietary, closed solutions

From the outset, we addressed these concerns with a software-first, open-standards approach. This gives customers the flexibility to mix accelerators, adapt architectures, and scale without overhauling their entire system while leveraging the power of the developers’ communities. Customers recognize that superior hardware alone is insufficient. Robust, open software that leverages community-driven innovation and supports new algorithms and deployment models, is essential to unlocking the full value of their infrastructure. Our software-first strategy has earned significant trust and respect from customers using our NR1 AI-NIC with our Inference Serving Stack (NR-ISS) and our Scale-out Networking Stack (NR-SONS) and those preparing to adopt NR2 AI-SuperNIC.

What does the competitive landscape look like and how do you differentiate? What new features/technology are you working on?

The competitive landscape is dominated by Nvidia’s ConnectX and Broadcom’s Thor General-purpose NIC products. While these solutions are advancing in bandwidth, their latency remains above 1 microsecond, which becomes a significant bottleneck as speeds increase to 800G and 1.6T. Hyperscalers and other leading customers are demanding faster, more efficient networking to pair with Nvidia GPUs and their own custom XPUs. Without such solutions, they are compelled to develop their own NICs to overcome current limitations, a task found to be long and complex.

NeuReality differentiates itself by delivering double the bandwidth and less than half the latency of competing products. We then deliver exclusive AI features in the core network engines, such as the packet processors and the hardened transport layers, and the integrated system functions, such as PCIe switch and peripheral interfaces.

We defined and designed NR2 AI-SuperNIC die, package and board in collaboration with market leaders to accommodate diverse system topologies. Features include:

- Integrated UALink for high-performance in-node connectivity between CPUs and GPUs, bridging scale-up and scale-out networks

- Embedded PCIe switch for flexible system architectures

- xCCL acceleration for both mathematical and non-mathematical collectives, a unique capability

- Exceptional power efficiency—2.5W per 100G, setting a new industry benchmark

- Comprehensive, open-source software stack with native support for all major AI frameworks and libraries.

Looking at this table, you can clearly see the advantage of NR2 AI-SuperNIC compared to today’s solutions and to future roadmap solutions from our competition:

How do customers normally engage with your company?

We work directly with hyperscalers, neocloud customers, and enterprises, providing support both directly and through system integrators and OEMs. Our engineering team invests in understanding each customer’s unique needs, collaborating closely to deliver tailored solutions. Most customers approach us not simply seeking a new networking solution but aiming to maximize the value of their GPU investments.

Engagements often begin with proof-of-concept (POC) projects. With our NR1 AI-CPU product, we established a robust ecosystem of partners, channels, and lead customers to ensure early product validation and customer satisfaction. For NR2, we are inviting partners to join the AI-SuperNIC Partnership and validate interoperability with their hardware, software stacks, and communication libraries well before full-scale deployment.

What is next in the evolution of AI infrastructure?

Looking ahead, we anticipate two key trends will shape customer focus and industry direction.

First, as AI workloads become increasingly dynamic and distributed, customers will demand even greater flexibility and automation in their infrastructure. This will drive the adoption of intelligent orchestration platforms that can optimize resource allocation in real time, ensuring maximum efficiency and responsiveness across diverse environments. To me, it’s crystal clear that Rack-scale design is not enough. Scale-out must evolve together with scale-up to support ease of deployment that is less dependent on the location of GPUs in the node, server, rack, or cluster of racks.

Second, we expect sustainability and energy efficiency to become central decision factors for enterprises building or using large-scale AI infrastructure. Organizations will seek solutions that not only deliver top tier performance but also minimize environmental impact and operational costs. As a result, power-efficient networking and hardware offload will become critical differentiators in the market.

Also Read:

2026 Outlook with Paul Neil of Mach42

CEO Interview with Scott Bibaud of Atomera

CEO Interview with Rabin Sugumar of Akeana

Share this post via:

The Name Changes but the Vision Remains the Same – ESD Alliance Through the Years