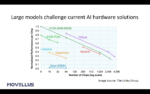

Neural network models are advancing rapidly and becoming more complex. Application developers using these new models need faster AI inference but typically can’t afford more power, space, or cooling. Researchers have put forth various strategies in efforts to wring out more performance from AI inference architectures,… Read More

Tag: Scalable AI

Advantages of Large-Scale Synchronous Clocking Domains in AI Chip Designs

We are currently in the hockey stick growth phase of AI. Advances in artificial intelligence (AI) are happening at a lightning pace. And, while the rate of adoption is exploding, so is model size. Over the past couple of years, we’ve gone from about two billion parameters to Google Brain’s recently announced trillion-parameter… Read More