What if your smart device could empathize with you? The evolving field known as affective computing is likely to make it happen soon. Scientists and engineers are developing systems and devices that can recognize, interpret, process, and simulate human affects or emotions. It is an interdisciplinary field spanning computer science, psychology, and cognitive science. While its origins can be traced to longstanding philosophical inquiries into emotion, a 1995 paper on #affective computing by Rosalind Picard catalyzed modern progress.

The more smart devices we have in our lives, the more we are going to want them to behave politely and be socially smart. We don’t want them to bother us with unimportant information or overload us with too much information. That kind of common-sense reasoning requires an understanding of our emotional state. We’re starting to see such systems perform specific, predefined functions, like changing in real time how you are presented with the questions in a quiz, or recommending a set of videos in an educational program to fit the changing mood of students.

How can we make a device that responds appropriately to your emotional state? Researchers are using sensors, microphones, and cameras combined with software logic. A device with the ability to detect and appropriately respond to a user’s emotions and other stimuli could gather cues from a variety of sources. Facial expressions, posture, gestures, speech, the force or rhythm of key strokes, and the temperature changes of a hand on a mouse can all potentially signify emotional changes that can be detected and interpreted by a computer. A built-in camera, for example, may capture images of a user. Speech, gesture, and facial recognition technologies are being explored for affective computing applications.

Just looking at speech alone, a computer can observe innumerable variables that may indicate emotional reaction and variation. Among these are a person’s rate of speaking, accent, pitch, pitch range, final lowering, stress frequency, breathlessness, brilliance, loudness, and discontinuities in the pattern of pauses or pitch.

Gestures can also be used to detect emotional states, especially when used in conjunction with speech and face recognition. Such gestures might include simple reflexive responses, like lifting your shoulders when you don’t know the answer to a question. Or they could be complex and meaningful, as when communicating with sign language.

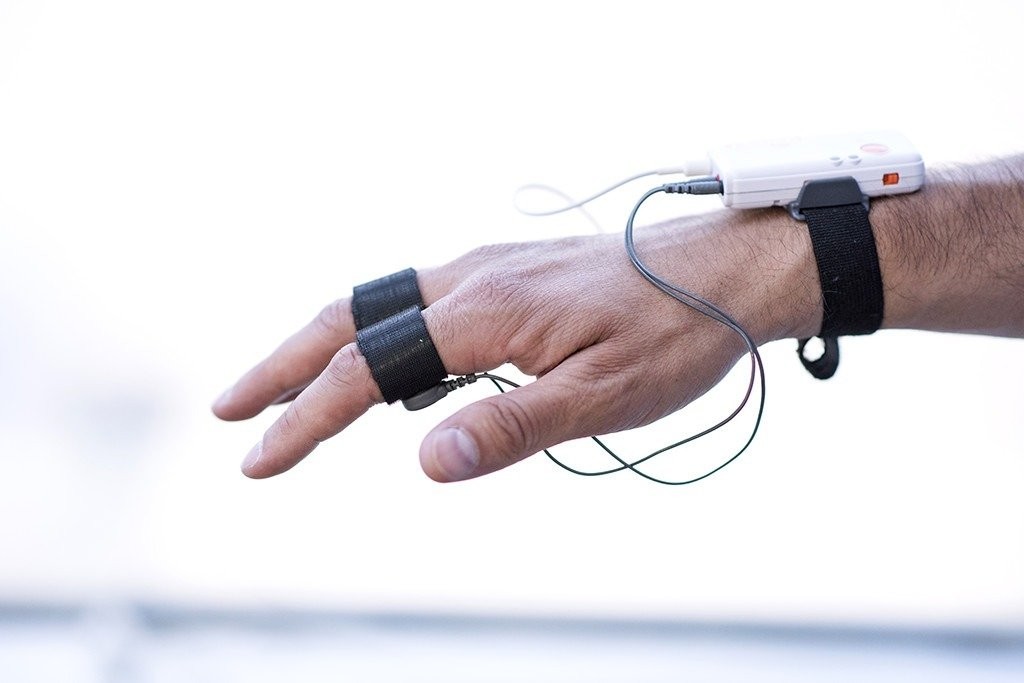

A third approach is the monitoring of physiological signs. These might include pulse and heart rate or minute contractions of facial muscles. Pulses in blood volume can be monitored, as can what’s known as galvanic skin response. This area of research is still in relative new but it is gaining momentum and we are starting to see real products that implement the techniques.

Source: galvanic skin response, Explorer Research

Recognizing emotional information requires the extraction of meaningful patterns from the gathered data. Some researchers are using machine learning techniques to detect such patterns.

Detecting emotion in people is one thing. But work is also going into computers that themselves show what appear to be emotions. Already in use are systems that simulate emotions in automated telephone and online conversation agents to facilitate interactivity between human and machine.

There are many applications for affective computing. One is in education. Such systems can help address one of the major drawbacks of online learning versus in-classroom learning: the difficulty faced by teachers in adapting pedagogical situations to the emotional state of students in the classroom. In e-learning applications, affective computing can adjust the presentation style of a computerized tutor when a learner is bored, interested, frustrated, or pleased. Psychological health services also benefit from affective computing applications that can determine a client’s emotional state.

Robotic systems capable of processing affective information can offer more functionality alongside human workers in uncertain or complex environments. Companion devices, such as digital pets, can use affective computing abilities to enhance realism and display a higher degree of autonomy.

Other potential applications can be found in social monitoring. For example, a car might monitor the emotion of all occupants and invoke additional safety measures, potentially alerting other vehicles if it detects the driver to be angry. Affective computing has potential applications in human-computer interaction, such as affective “mirrors” that allow the user to see how he or she performs. One example might be warning signals that tell a driver if they are sleepy or going too fast or too slow. A system might even call relatives if the driver is sick or drunk (though one can imagine mixed reactions on the part of the driver to such developments). Emotion-monitoring agents might issue a warning before one sends an angry email, or a music player could select tracks based on your mood. Companies may even be able to use affective computing to infer whether their products will be well-received by the market by detecting facial or speech changes in potential customers when they read an ad or first use the product. Affective computing is also starting to be applied to the development of communicative technologies for use by people with autism.

Many universities done extensive work on affective computing resulting projects include something called the galvactivator which was a good starting point. It’s a glove-like wearable device that senses a wearer’s skin conductivity and maps values to a bright LED display. Increases in skin conductivity across the palm tend to indicate physiological arousal, so the display glows brightly. This may have many potentially useful purposes, including self-feedback for stress management, facilitation of conversation between two people, or visualizing aspects of attention while learning. Along with the revolution in wearable computing technology, affective computing is poised to become more widely accepted, and there will be endless applications for affective computing in many aspects of life.

One of the future applications will be the use of affective computing in #Metaverse applications, which will humanize the avatar and add emotion as 5th dimension opening limitless possibilities, but all these advancements in applications of affective computing racing to make the machines more human will come with challenges namely SSP (Security, Safety, Privacy) the three pillars of online user, we need to make sure all the three pillars of online user are protected and well defined , it’s easier said than done but clear guidelines of what , where, who, who will use the data will make acceptance of hardware and software of affective computing faster without replacing physical pain with mental pain of fear of privacy and security and safety of our data .

References

https://www.linkedin.com/pulse/20140424221437-246665791-affective-computing/

https://www.linkedin.com/pulse/20140730042327-246665791-your-computer-will-feel-your-pain/

Share this post via:

A Century of Miracles: From the FET’s Inception to the Horizons Ahead