Arthur Hanson

Well-known member

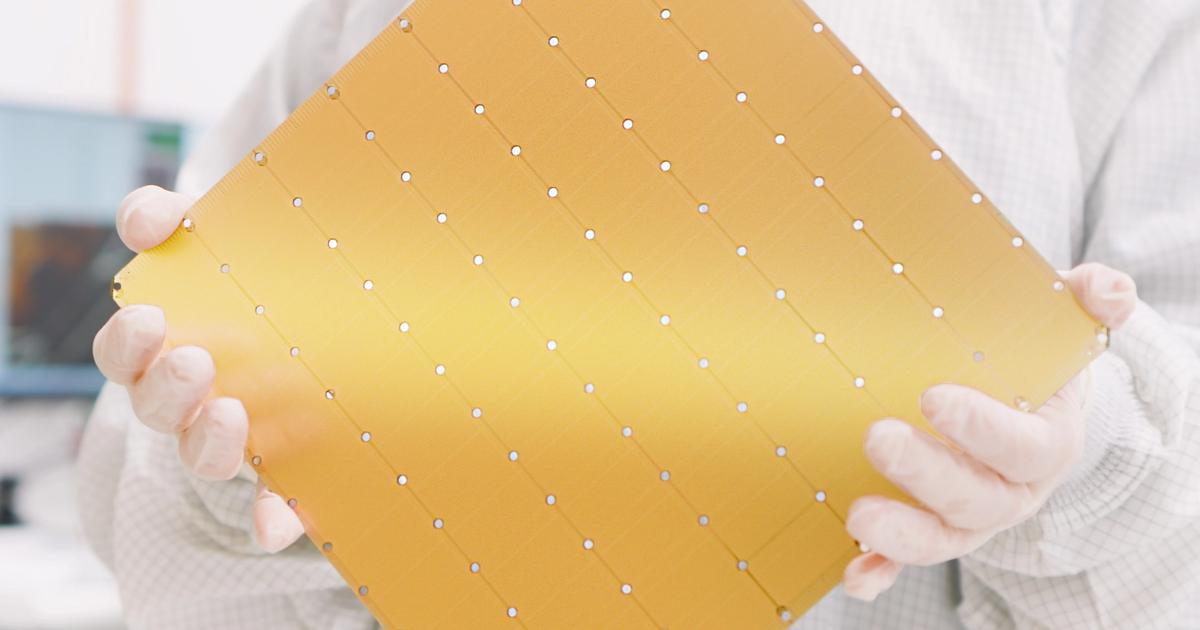

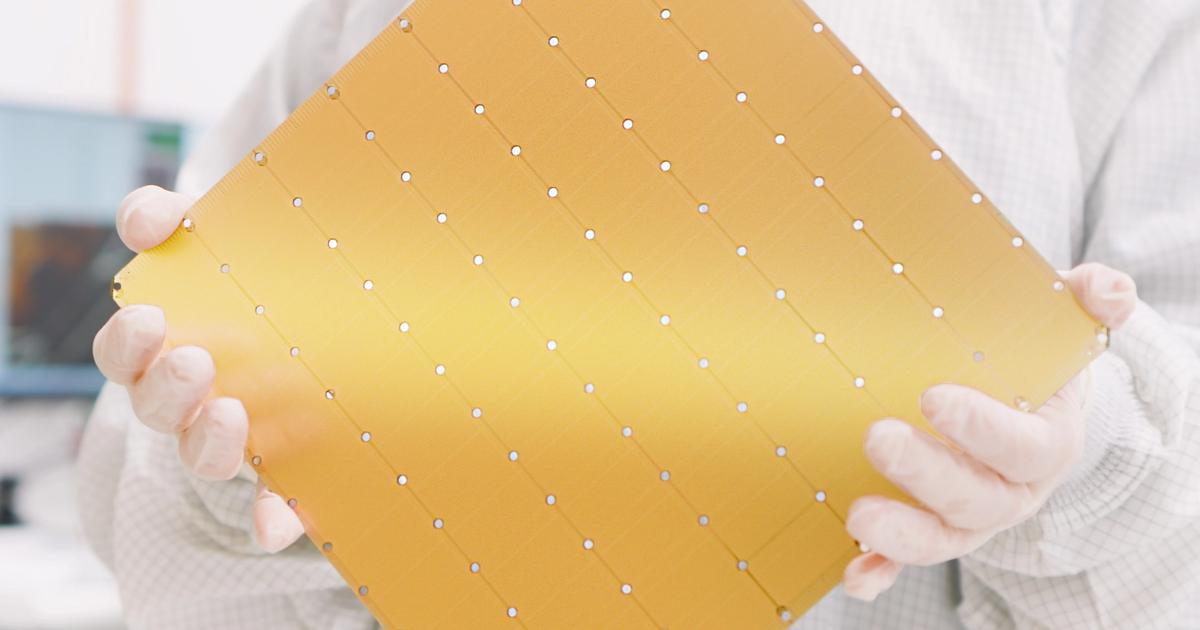

Cerebras maintaines having everything on on giant chip saves power and provides superior results to a board full of separate chips. Any thoughts or comments on this appreciated.

www.cerebras.ai

www.cerebras.ai

www.cerebras.ai

www.cerebras.ai

Cerebras Systems Unveils World’s Fastest AI Chip with Whopping 4 Trillion Transistors - Cerebras

Third Generation 5nm Wafer Scale Engine (WSE-3) Powers Industry’s Most Scalable AI Supercomputers, Up To 256 exaFLOPs via 2048 Nodes

www.cerebras.ai

www.cerebras.ai

Cerebras Raises $1.1 Billion at $8.1 Billion Valuation

Cerebras is the go-to platform for fast and effortless AI training. Learn more at cerebras.ai.

www.cerebras.ai

www.cerebras.ai