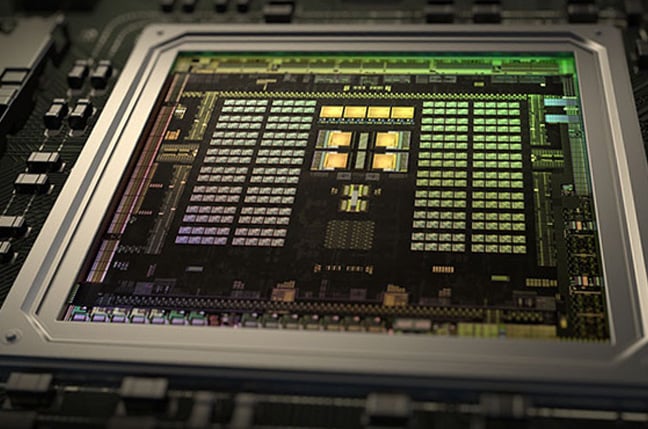

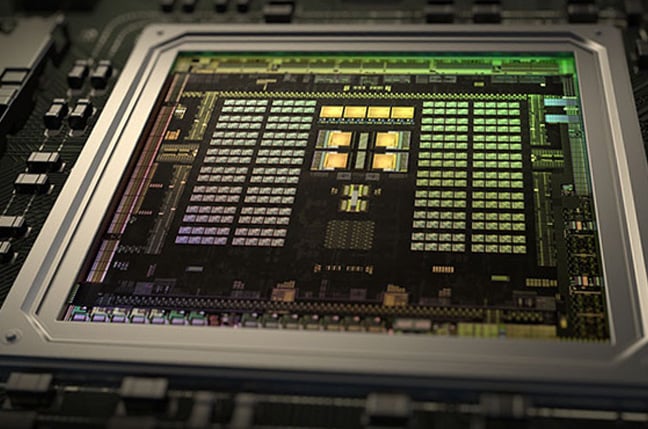

Analysis Nvidia is facing its stiffest competition in years with new accelerators from Intel and AMD that challenge its best chips on memory capacity, performance, and price.

However, it's not enough just to build a competitive part: you also have to have software that can harness all those FLOPS – something Nvidia has spent the better part of two decades building with its CUDA runtime.

Nvidia is well established within the developer community. A lot of codebases have been written and optimized for its specific brand of hardware, while competing frameworks for low-level GPU programming are far less mature. This early momentum is often referred to as "the CUDA moat."

But just how deep is this moat in reality?

www.theregister.com

www.theregister.com

However, it's not enough just to build a competitive part: you also have to have software that can harness all those FLOPS – something Nvidia has spent the better part of two decades building with its CUDA runtime.

Nvidia is well established within the developer community. A lot of codebases have been written and optimized for its specific brand of hardware, while competing frameworks for low-level GPU programming are far less mature. This early momentum is often referred to as "the CUDA moat."

But just how deep is this moat in reality?

Nvidia's CUDA moat may not be as impenetrable as you think

Not as impenetrable as you might think, but still more than Intel or AMD would like