NVIDIA’s once-dominant position in China’s AI GPU market has effectively collapsed after new U.S. export restrictions rendered its advanced chips off-limits. CEO Jensen Huang confirmed that NVIDIA’s market share in China has fallen from 95% to zero — a dramatic reversal for a region that previously contributed 20–25% of its data center revenue. This loss highlights how geopolitics, rather than competition, reshaped one of NVIDIA’s most profitable markets.

In the short term, the company is offsetting the revenue hit with explosive demand from U.S. and international cloud providers building AI infrastructure. Global hyperscalers, sovereign AI projects, and enterprise adoption of generative AI continue to drive orders for the H100, H200, and upcoming Blackwell GPUs. However, the long-term implications are significant. China is accelerating efforts to achieve semiconductor self-sufficiency, with Huawei’s Ascend 910B and startups like Birente and Moore Threads filling the vacuum left by NVIDIA. AMD and Intel may capture some global share, but neither can sell unrestricted advanced AI chips to China either.

NVIDIA’s pivot toward software, AI ecosystems, and custom data center solutions underscores its strategy to remain indispensable — even as one of its largest regional markets slips permanently out of reach.

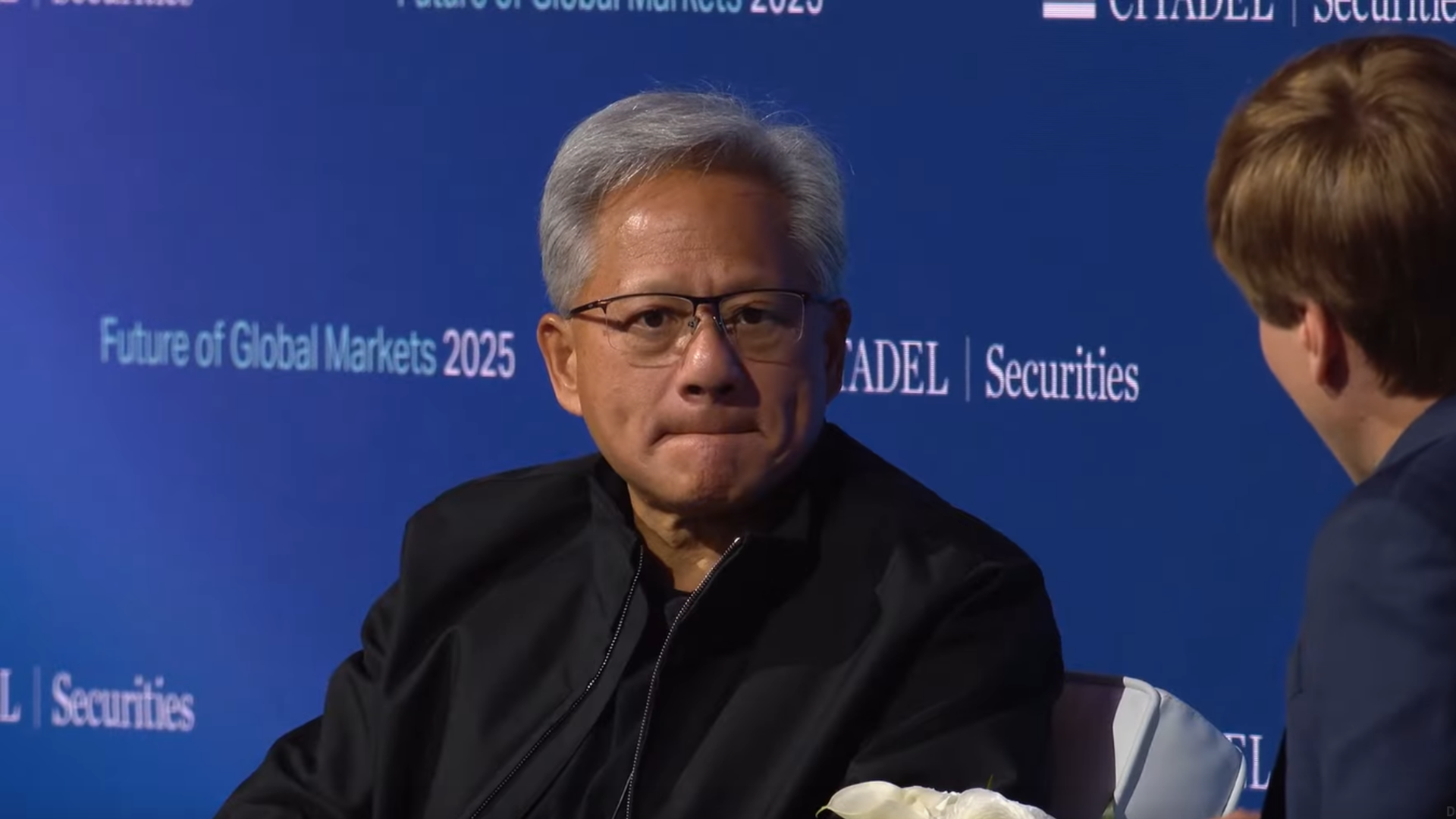

Jensen says Nvidia’s China AI GPU market share has plummeted from 95% to zero — the Chinese market previously amounted to 20% to 25% of the chipmaker's data center revenue

Speaking at a Citadel Securities event on October 6, the Nvidia CEO blamed U.S. export controls and said the company now assumes no revenue from China in its forecasts.