Arthur Hanson

Well-known member

Any thoughts on the WSE-3 chip from Cerebras that has four trillion transistors with 900,000 optimized cores? Is this a game changer or curiousity?

Array

(

[content] =>

[params] => Array

(

[0] => /forum/threads/four-trillion-transistors-chip.23523/

)

[addOns] => Array

(

[DL6/MLTP] => 13

[Hampel/TimeZoneDebug] => 1000070

[SV/ChangePostDate] => 2010200

[SemiWiki/Newsletter] => 1000010

[SemiWiki/WPMenu] => 1000010

[SemiWiki/XPressExtend] => 1000010

[ThemeHouse/XLink] => 1000970

[ThemeHouse/XPress] => 1010570

[XF] => 2030770

[XFI] => 1060170

)

[wordpress] => /var/www/html

)

It's a game changer for AI. Nothing touches it, performance-wise, especially for inference. There are numerous challenges for a wafer-scale processor, especially in applications. They need to keep the cores small, so defects don't cause lots of expensive die area to be lost to relatively large cores with just small defects. But AI-specific cores are naturally small. The packaging needs to be completely custom, and the cooling system is a work of art. No standard high volume rack chassis for Cerebras. Clustering the modules is a networking challenge too, but Cerebras uses it's own internal networking architecture, and uses 100Gb Ethernet for external communications with a custom gateway for translation to their internal message passing architecture.Any thoughts on the WSE-3 chip from Cerebras that has four trillion transistors with 900,000 optimized cores? Is this a game changer or curiousity?

Does outright performance really matter here though?It's a game changer for AI. Nothing touches it, performance-wise, especially for inference.

Of course, it depends on the application, but performance matters in the usual metrics: latency (time to first token), and throughput (tokens per second). With thousands or tens of thousands of concurrent users, inference performance can be critical to customer application success.Does outright performance really matter here though?

I think Cerebras products are largely still in the investigation, research, and proof of concept phases for user applications. I agree, Nvidia clusters are the low-risk approach. I think, but don't have data, that cloud company AI chips and systems are mostly targeted at internal applications.AI is already a highly risky investment for any major firm, and if you're spending billions on something already highly risky -- would you further add to the risk by trying Cerebras's products instead of just buying from Nvidia?

I doubt it. Cerebras was unicorn a few years ago, and have had a plan to go public. As with so many unicorns, I think they're just waiting for the exact right time to saddle themselves with the complexity of being a public company to get the huge payoff of being public.I agree the tech is pretty fantastic, but I think they're just waiting for a buy out from an AMD, Nvidia, or similar at this point?

I agree - they have found a way to build wafer scale chips that have killed many companies before them and harness the WSE-3 / CSE-3 on one of the most relevant problems of the times, LLM inference. Their on-chip static memory and associated bandwidth gives them pretty much unrivaled performance on LLM benchmarks, plus the short on-chip interconnect gives them a power advantage on a per compute basis. Where I think they fall down a bit is on their capital cost per million tokens in a many-user environment.I think they're amazing.

found a way to build wafer scale chips that have killed many companies before them

I don't know how to answer this. Cerebras offers their own cloud services for their systems, and the pricing is publicly advertised. I'm not ambitious enough to do the rigorous analysis, but it is claimed to be competitive with competing cloud services. If you want to actually buy a Cerebras system, their business terms are confidential, and pricing isn't published.Where I think they fall down a bit is on their capital cost per million tokens in a many-user environment.

In theory their tech should give them the best perf/watt -- which should let them scale down cost per token in a large environment further than Nvidia's offerings.I agree - they have found a way to build wafer scale chips that have killed many companies before them and harness the WSE-3 / CSE-3 on one of the most relevant problems of the times, LLM inference. Their on-chip static memory and associated bandwidth gives them pretty much unrivaled performance on LLM benchmarks, plus the short on-chip interconnect gives them a power advantage on a per compute basis. Where I think they fall down a bit is on their capital cost per million tokens in a many-user environment.

They have a facility called MemoryX units, which are connected via their proprietary inter-WSE interconnect called SwarmX. This article describes the off-chip distributed DRAM memory at a high level:Iirc their largest stakeholder (70+%?) is in the Middle East.

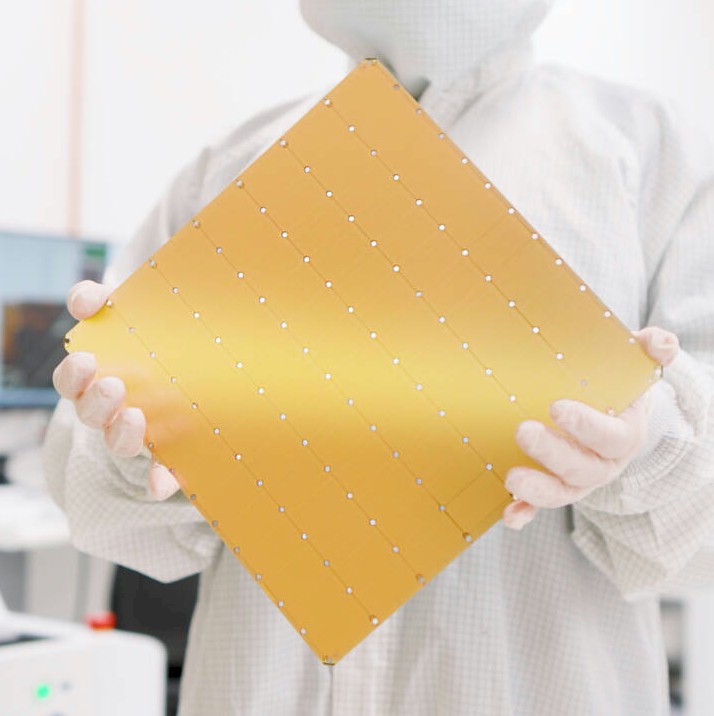

I like their approach, I think it's a clever way to work with what we have today. From what I glean of their documents, they take the whole wafer without dicing, and route redistribution (?) layers over the reticle boundaries. Please correct me if I'm wrong. If true, this technique would make for fascinating reading all by itself.

A question: is all the memory in the wafer itself, or do they add more atop the wafer somewhat like AMD's 3Dcache products?

www.nextplatform.com

www.nextplatform.com

Iirc their largest stakeholder (70+%?) is in the Middle East.

I like their approach, I think it's a clever way to work with what we have today. From what I glean of their documents, they take the whole wafer without dicing, and route redistribution (?) layers over the reticle boundaries. Please correct me if I'm wrong. If true, this technique would make for fascinating reading all by itself.

A question: is all the memory in the wafer itself, or do they add more atop the wafer somewhat like AMD's 3Dcache products?

Ah, but they are benchmarked for performance on specific models in terms of speed (MTokens/s), time to first token, and cost per million tokens by guys like Artificial Analysis.I don't know how to answer this. Cerebras offers their own cloud services for their systems, and the pricing is publicly advertised. I'm not ambitious enough to do the rigorous analysis, but it is claimed to be competitive with competing cloud services. If you want to actually buy a Cerebras system, their business terms are confidential, and pricing isn't published.

I admit, I'm biased. I hate benchmarks. I used to be a DBMS development guy, and given the complexity of systems, software architecture, and data structures, you could often design a specific benchmark your DBMS was better at or cheaper at than the competition. The sales and marketing people always wanted to win at something, especially something the customers thought was important. I hated those discussions. The really important capability was the whole weighted portfolio of capabilities. But I digress.Ah, but they are benchmarked for performance on specific models in terms of speed (MTokens/s), time to first token, and cost per million tokens by guys like Artificial Analysis.

But benchmarks vary greatly based other parameters like batch size and number of concurrent end users. NVIDIA was trolling Cerebras a week or so ago with one particular case where Cerebras endpoint performance was inferior to one of their smallish DGX8 boxes (and hence a huge cost advantage).