Arthur Hanson

Well-known member

Will this technology help the Chinese replace some of the HBM required for AI or is it just a small step?

At its Data Storage AI SSD launch event, the company presented the OceanDisk EX, OceanDisk SP, and OceanDisk LC 560 drives.

The company described the launches as a response to the “memory wall” and “capacity wall” problems which currently limit artificial intelligence workloads.

The OceanDisk EX 560 is described as an “extreme performance drive” with write speeds reaching 1,500K IOPS, latency below 7µs, and endurance of 60 DWPD.

Huawei says it can increase the number of fine-tunable model parameters on a single machine sixfold, potentially making it useful for LLM fine-tuning.

The SP 560 takes a more cost-focused approach, with 600K IOPS and lower durability of 1 DWPD.

It targets inference scenarios, with claims of reducing first-token latency by 75% while doubling throughput.

The LC 560 represents the largest SSD in the series, with a stated single-drive capacity of 245TB and read bandwidth of 14.7GB/s.

Huawei promotes this model as well-suited for handling massive multimodal datasets in cluster training, although practical adoption at scale will depend on integration with existing systems.

Beyond hardware, Huawei also introduced DiskBooster, a driver said to expand pooled memory capacity twentyfold by coordinating AI SSDs with both HBM and DDR.

The company also highlighted multi-stream technology aimed at reducing write amplification, which could extend drive longevity.

These software-driven optimizations may provide more flexibility for AI workloads, but actual gains will depend on how well applications adopt and support them.

Huawei’s latest SSD is an attempt to mitigate the impact of the United States’ continued tightening of controls on advanced HBM chips, which have left Chinese firms with limited access.

The company aims to reduce China’s dependence on imported HBM chips, emphasizing domestic NAND flash, and shift the value toward SSD technology.

Still, whether these new drives can truly offset HBM constraints in LLM training remains uncertain.

Nevertheless, Huawei’s solution is not replacing HBM; it is only a partial alternative, but the company argues that high-capacity solid-state storage with specialized software can reduce demand for large amounts of costly memory.

This claim remains to be fully tested, but the approach suggests a shift toward “system supplementing single points,” where different layers of storage balance out limitations in HBM.

Huawei released an AI SSD which uses a secret sauce to reduce the need for large amounts of expensive HBM

- - Huawei shifts focus from HBM toward SSD capacity to manage AI loads

- - The OceanDisk LC 560 boasts 245TB, the largest SSD ever made

- - Huawei promotes DiskBooster software as a memory pooling solution across HBM and SSD

At its Data Storage AI SSD launch event, the company presented the OceanDisk EX, OceanDisk SP, and OceanDisk LC 560 drives.

The company described the launches as a response to the “memory wall” and “capacity wall” problems which currently limit artificial intelligence workloads.

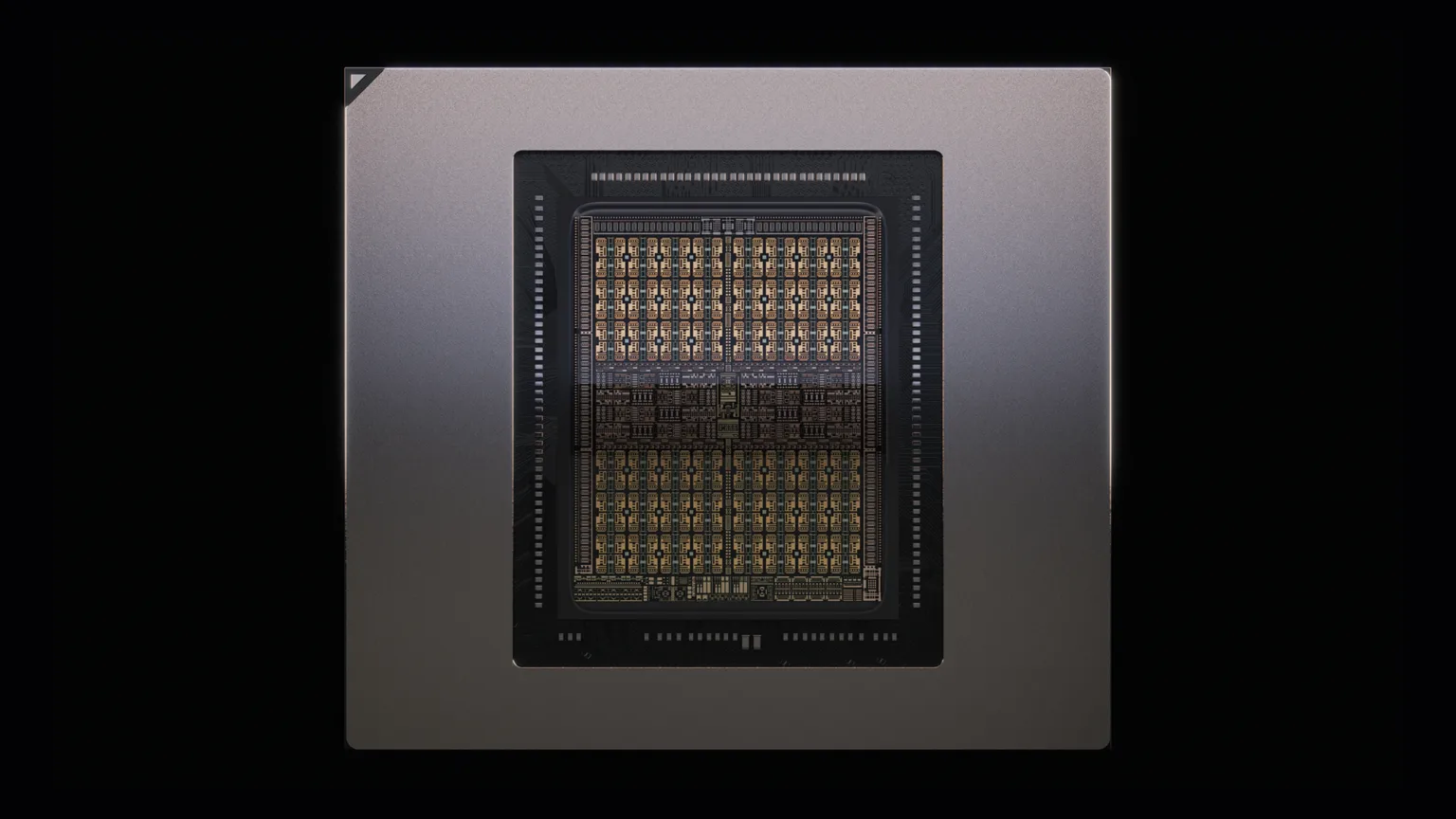

Huawei OceanDisk EX, SP, and LC 560 drives

"The increasingly severe 'memory wall' and 'capacity wall' have become key bottlenecks to AI training efficiency and user experience. This creates challenges for the performance and cost of IT infrastructure, affecting the positive AI business cycle," said Zhou Yuefeng, vice-president and head of Huawei's data storage product line.The OceanDisk EX 560 is described as an “extreme performance drive” with write speeds reaching 1,500K IOPS, latency below 7µs, and endurance of 60 DWPD.

Huawei says it can increase the number of fine-tunable model parameters on a single machine sixfold, potentially making it useful for LLM fine-tuning.

The SP 560 takes a more cost-focused approach, with 600K IOPS and lower durability of 1 DWPD.

It targets inference scenarios, with claims of reducing first-token latency by 75% while doubling throughput.

The LC 560 represents the largest SSD in the series, with a stated single-drive capacity of 245TB and read bandwidth of 14.7GB/s.

Huawei promotes this model as well-suited for handling massive multimodal datasets in cluster training, although practical adoption at scale will depend on integration with existing systems.

Beyond hardware, Huawei also introduced DiskBooster, a driver said to expand pooled memory capacity twentyfold by coordinating AI SSDs with both HBM and DDR.

The company also highlighted multi-stream technology aimed at reducing write amplification, which could extend drive longevity.

These software-driven optimizations may provide more flexibility for AI workloads, but actual gains will depend on how well applications adopt and support them.

Huawei’s latest SSD is an attempt to mitigate the impact of the United States’ continued tightening of controls on advanced HBM chips, which have left Chinese firms with limited access.

The company aims to reduce China’s dependence on imported HBM chips, emphasizing domestic NAND flash, and shift the value toward SSD technology.

Still, whether these new drives can truly offset HBM constraints in LLM training remains uncertain.

Nevertheless, Huawei’s solution is not replacing HBM; it is only a partial alternative, but the company argues that high-capacity solid-state storage with specialized software can reduce demand for large amounts of costly memory.

This claim remains to be fully tested, but the approach suggests a shift toward “system supplementing single points,” where different layers of storage balance out limitations in HBM.