Electricity accounts 80% of BTC mining cost.Beyond a given point, lowering voltage further increases power consumption per operation.

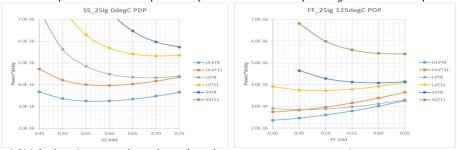

If you look at power-delay product, this tells you the energy needed to do something, Dynamic power (CV^2) drops with lower voltages (leakage less so) but so does clock speed, more rapidly as you get closer to the threshold voltages. For a given gate type (e.g. ELVT, ULVT, LVT, SVT) and clock speed (and activity percentage) there is a supply voltage where PDP reaches a minimum, and this where the power consumption is also a minimum -- as VDD drops you have to run slower but with more parallel circuits, which works for many things but not all. And if you're really bothered about power efficiency, you also need to vary VDD with process corner and temperature, and also circuit activity and clock speed.

For the circuits we've looked at in N3 and N2 which are relatively high activity (e.g. DSP, FEC...) the lowest PDP is usually with ELVT, but has never been as low as 0.225V -- for lower activity circuits where ELVT leakage is too high compared to dynamic power, ULVT can be better. But there's no single "best" answer (transistor type, voltage, frequency), it all depends on what the circuits are doing... ;-)

You can try a better solution and there's a lot of money to be made .

Intel was once a wantobe player.