Can somebody explain the major differences between 16nm vs 10nm (besides 6nm)?

I am told they are both double patterned. True or false?

Is there more interconnect available (tighter pitches at M3 and above, more upper routing layers, etc)

Higher GM?

Lower leakage?

More SRAM density?

New exotic memory?

Lower interconnect capacitance or resistance?

From this article

https://semiengineering.com/new-patterning-options-emerging/

It seems that the major piece is the poly connecting to the gate above the diffusion, allowing pmos and nmos to get closer. This theoretically makes the standard cells tighter, but will that result in tighter P&R? Won't the user need to lower the utilization % to connect up the standard cells? How much tighter is the P&R block?

I assume the big savings is in SRAM?

Is there an exotic RAM involved that gets the huge size benefit?

Is Altera's FPGA fabric much tighter?

Is there a cost per fet benefit?

Was the change worth it?

I am told they are both double patterned. True or false?

Is there more interconnect available (tighter pitches at M3 and above, more upper routing layers, etc)

Higher GM?

Lower leakage?

More SRAM density?

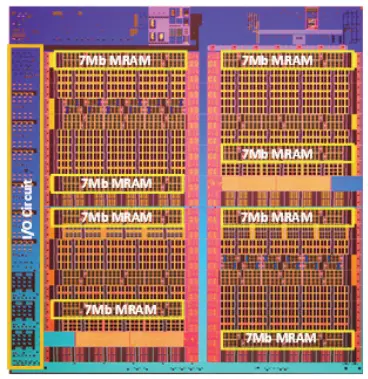

New exotic memory?

Lower interconnect capacitance or resistance?

From this article

https://semiengineering.com/new-patterning-options-emerging/

It seems that the major piece is the poly connecting to the gate above the diffusion, allowing pmos and nmos to get closer. This theoretically makes the standard cells tighter, but will that result in tighter P&R? Won't the user need to lower the utilization % to connect up the standard cells? How much tighter is the P&R block?

I assume the big savings is in SRAM?

Is there an exotic RAM involved that gets the huge size benefit?

Is Altera's FPGA fabric much tighter?

Is there a cost per fet benefit?

Was the change worth it?

Last edited: